ABSTRACT

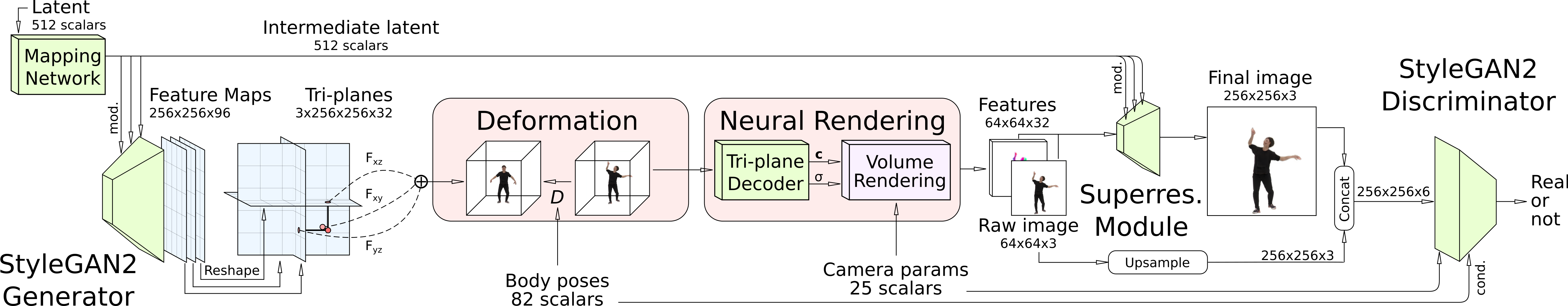

Unsupervised learning of 3D-aware generative adversarial networks (GANs) using only collections of single-view 2D photographs has very recently made much progress. These 3D GANs, however, have not been demonstrated for human bodies and the generated radiance fields of existing frameworks are not directly editable, limiting their applicability in downstream tasks. We propose a solution to these challenges by developing a 3D GAN framework that learns to generate radiance fields of human bodies or faces in a canonical pose and warp them using an explicit deformation field into a desired body pose or facial expression. Using our framework, we demonstrate the first high-quality radiance field generation results for human bodies. Moreover, we show that our deformation-aware training procedure significantly improves the quality of generated bodies or faces when editing their poses or facial expressions compared to a 3D GAN that is not trained with explicit deformations.

FILES

CITATION

A.W. Bergman, P. Kellnhofer, W. Yifan, E.R. Chan, D.B. Lindell, G. Wetzstein, Generative Neural Articulated Radiance Fields, NeurIPS 2022.

@inproceedings{bergman2022gnarf,

author = {Bergman, Alexander W. and Kellnhofer, Petr and Yifan, Wang and Chan, Eric R., and Lindell, David B. and Wetzstein, Gordon},

title = {Generative Neural Articulated Radiance Fields},

booktitle = {NeurIPS},

year = {2022},

}

QUANTITATIVE RESULTS

| Bodies |

AIST++ FID |

AIST++ PCKh@0.5 |

SURREAL FID |

SURREAL PCKh@0.5 |

| ENARF-GAN |

– |

– |

21.3 |

0.966 |

| EG3D+warping |

66.5 |

0.855 |

163.9 |

0.348 |

| GNARF |

7.9 |

0.980 |

4.7 |

0.999 |

GNARF outperforms concurrent work ENARF-GAN and a baseline which deforms a trained EG3D model by estimating the generated pose and deforming ray samples into a target pose. This is measured in FID, which compares how similar the generated images are to real images, and PCKh@0.5, which measures how similar the rendered result is to the target body pose.

| Faces |

FID |

AED |

APD |

ID-Consistency |

| EG3D+warping |

22.9 |

0.29 |

0.028 |

0.81 |

| PIRender |

64.4 |

0.28 |

0.040 |

0.70 |

| 3D GAN Inversion |

31.2 |

0.36 |

0.039 |

0.73 |

| GNARF |

17.9 |

0.23 |

0.025 |

0.80 |

Similarly, GNARF outperforms competing methods and the EG3D+warping baseline in animating facial expressions. This is measured in FID, Average Expression Distance (AED) and Average Pose Distance (APD), which measure how close the rendered image is to the target pose, and ID-consistency. GNARF is either the state-of-the-art or competitive on all of these metrics.