ABSTRACT

Unsupervised generation of high-quality multi-view-consistent images and 3D shapes using only collections of single-view 2D photographs has been a long-standing challenge. Existing 3D GANs are either compute-intensive or make approximations that are not 3D-consistent; the former limits quality and resolution of the generated images and the latter adversely affects multi-view consistency and shape quality. In this work, we improve the computational efficiency and image quality of 3D GANs without overly relying on these approximations. For this purpose, we introduce an expressive hybrid explicit-implicit network architecture that, together with other design choices, synthesizes not only high-resolution multi-view-consistent images in real time but also produces high-quality 3D geometry. By decoupling feature generation and neural rendering, our framework is able to leverage state-of-the-art 2D CNN generators, such as StyleGAN2, and inherit their efficiency and expressiveness. We demonstrate state-of-the-art 3D-aware synthesis with FFHQ and AFHQ Cats, among other experiments.

FILES

CITATION

E.R. Chan*, C.Z. Lin*, M.A. Chan*, K. Nagano*, B. Pan, S. De Mello, O. Gallo, L. Guibas, J. Tremblay, S. Khamis, T. Karras, G. Wetzstein, Efficient Geometry-aware 3D Generative Adversarial Networks, CVPR 2022

@inproceedings{Chan2022,

author = {Eric R. Chan and Connor Z. Lin and Matthew A. Chan and Koki Nagano and Boxiao Pan and Shalini De Mello and Orazio Gallo and Leonidas Guibas and Jonathan Tremblay and Sameh Khamis and Tero Karras and Gordon Wetzstein},

title = {{Efficient Geometry-aware 3D Generative Adversarial Networks}},

booktitle = {CVPR},

year = {2022}

}

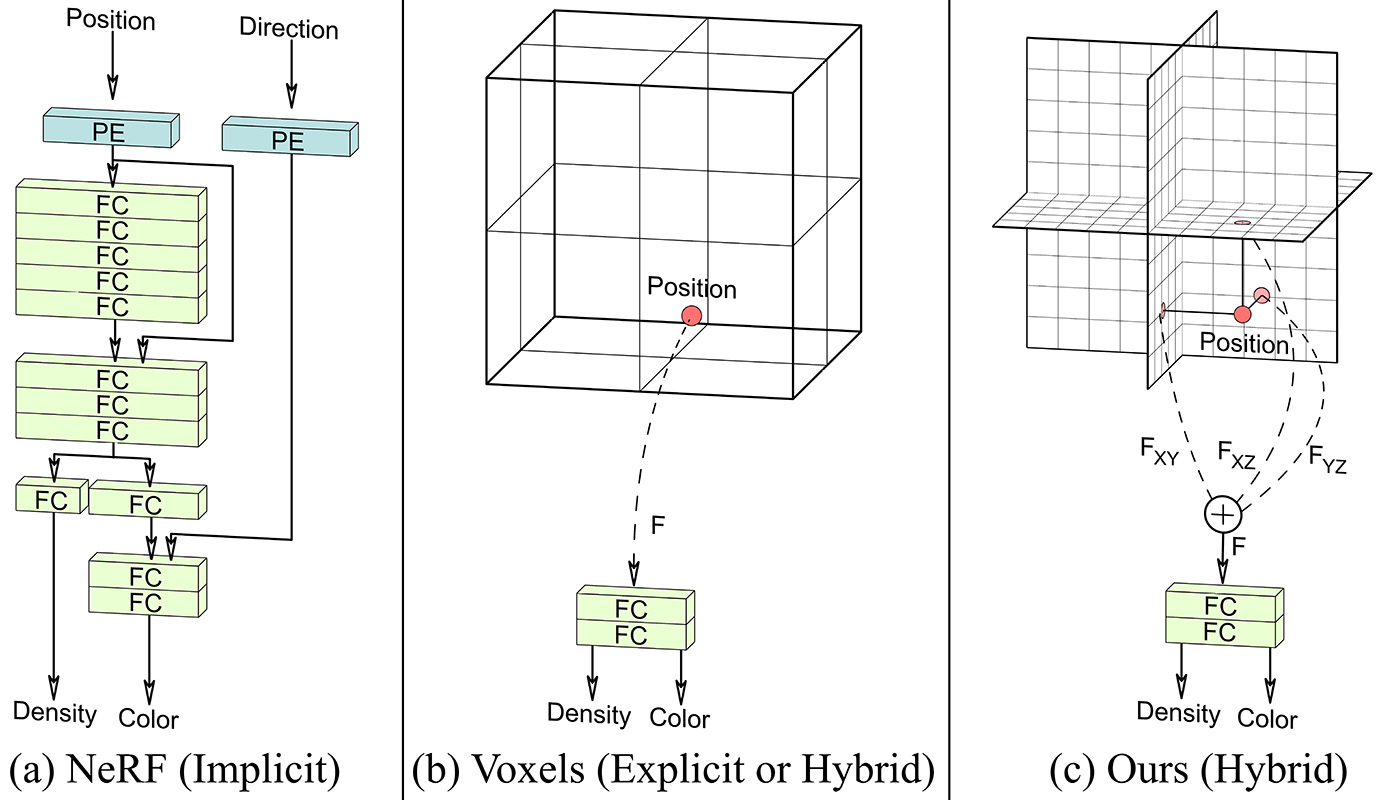

Tri-plane Representation

|

|

| Training a GAN with neural rendering is expensive, so we use a hybrid explicit-implicit 3D representation in order to make neural rendering as efficient as possible. Our representation combines an explicit backbone, which produces features aligned on three orthogonal planes, with a small implicit decoder. Compared to a typical multilayer perceptron representation, our 3D representation is more than seven times faster and uses less than one sixteenth as much memory. In using StyleGAN2 as the backbone of our representation, we inherit the qualities of the backbone, including a well-behaved latent space. |