GazeGPT | 2024

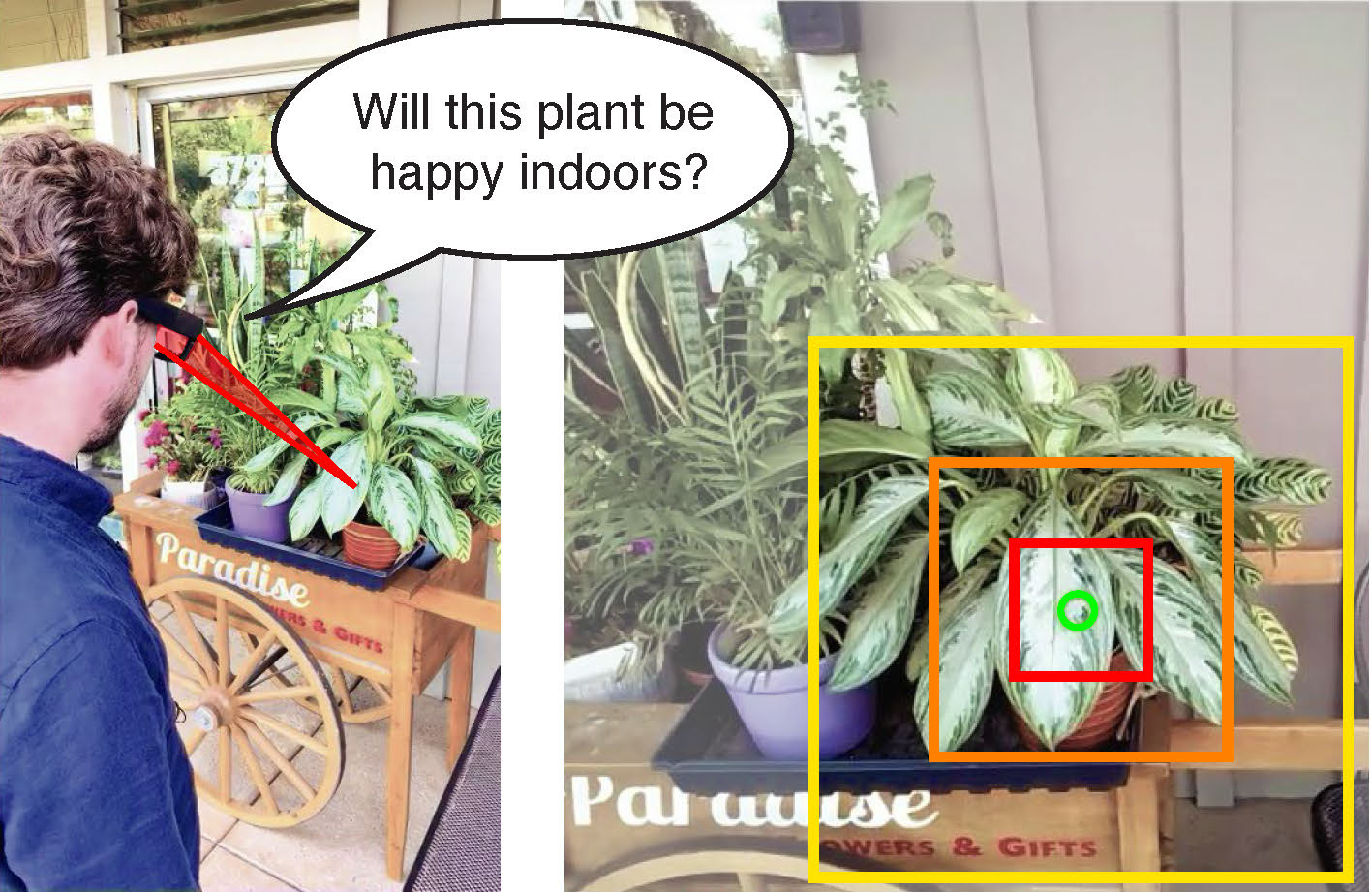

Augmenting Human Capabilities using Gaze-contingent Contextual AI for Smart Eyewear

Read More

Augmenting Human Capabilities using Gaze-contingent Contextual AI for Smart Eyewear

Read More

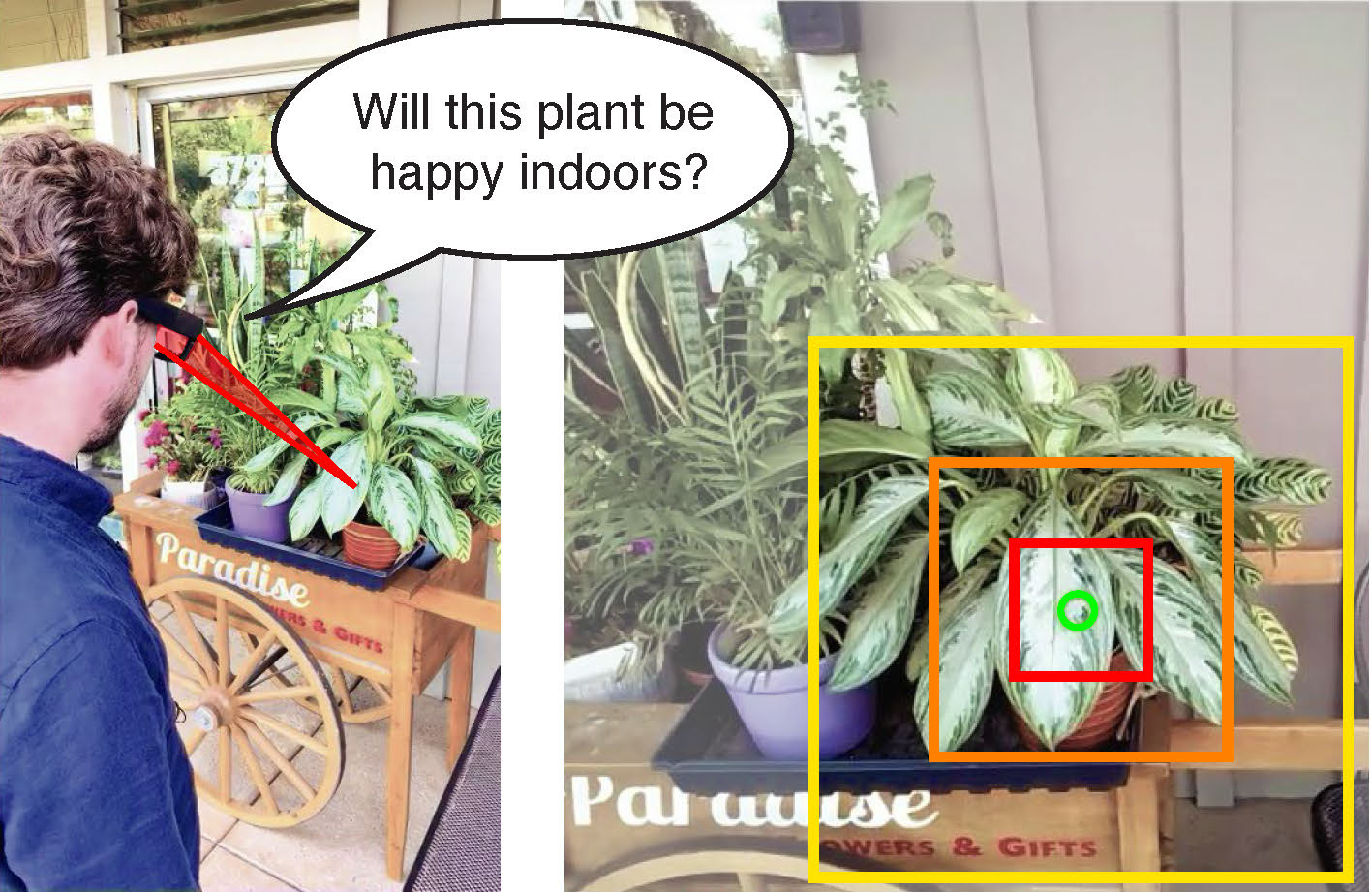

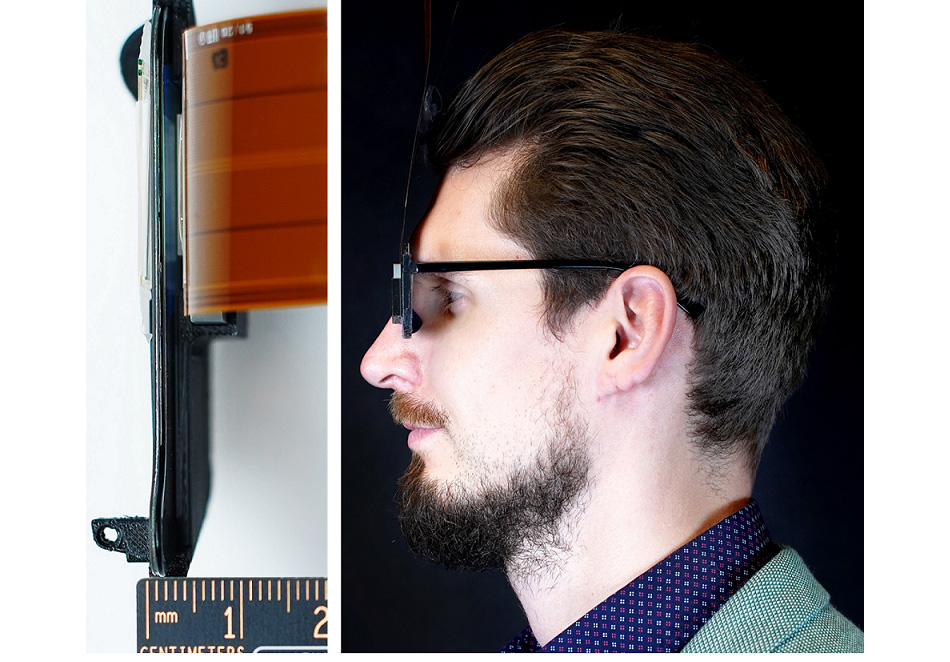

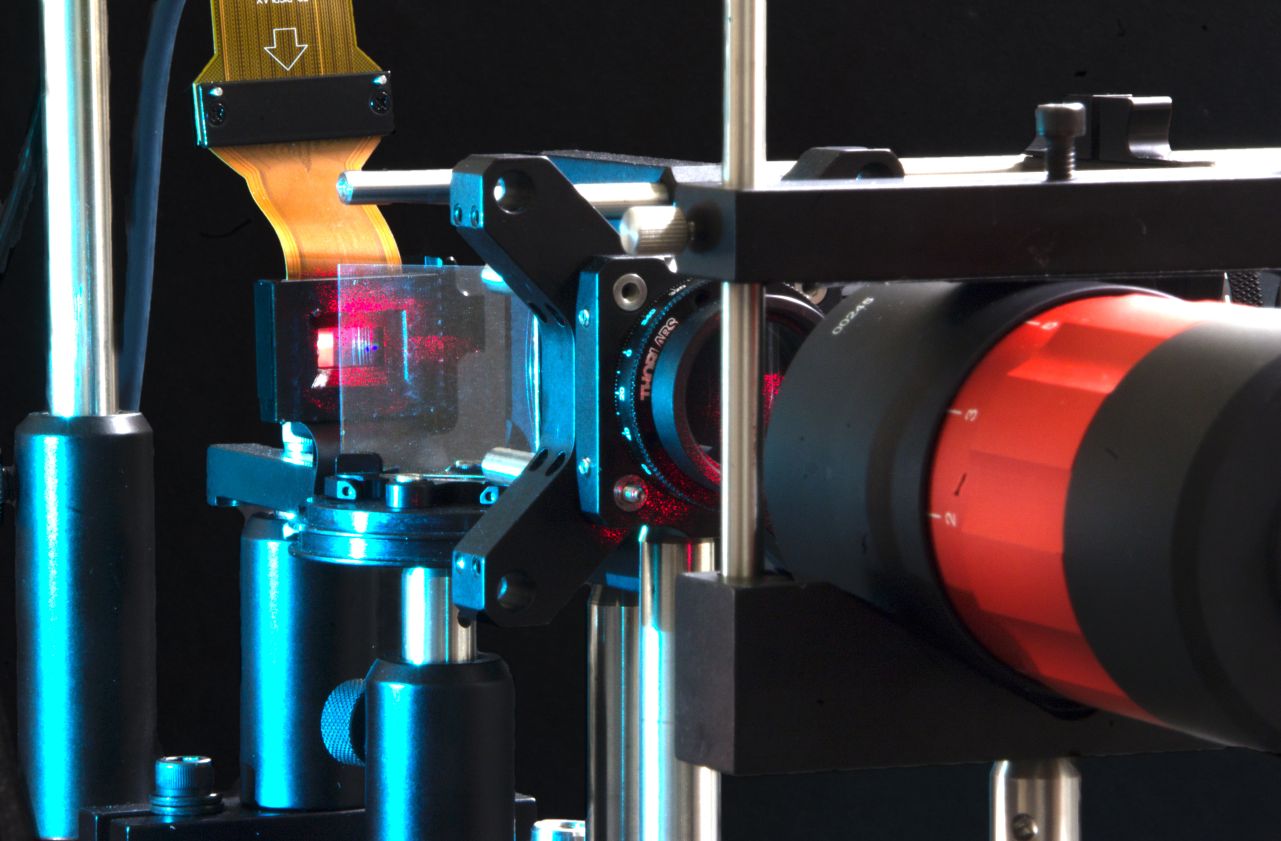

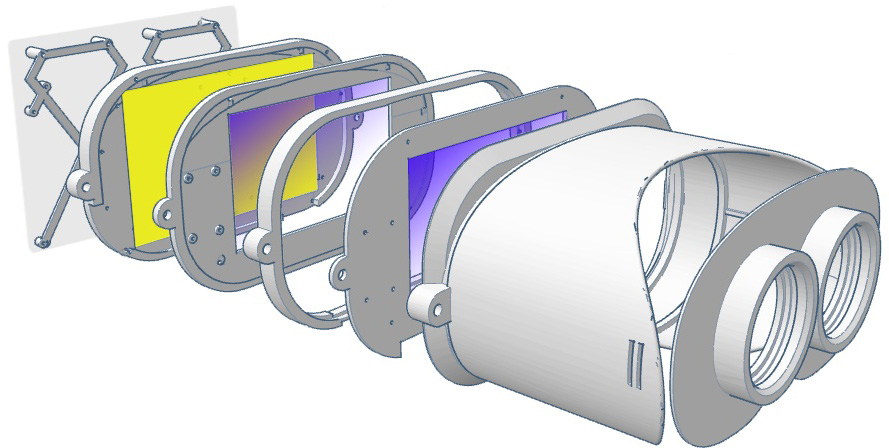

A near-eye display design that pairs inverse-designed metasurface waveguides with AI-driven holographic displays to enable full-colour 3D augmented reality from a compact glasses-like form factor. Photo by Andrew Brodhead

Read More

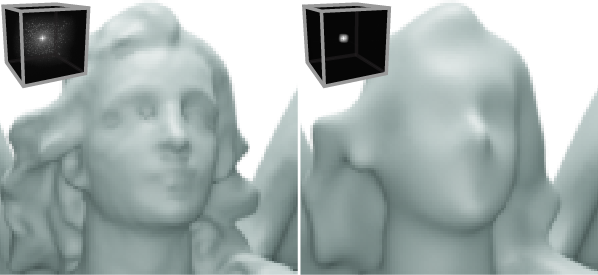

Inclusion of parallax cues in CGH rendering plays a crucial role in enhancing perceptual realism.

Read More

Tutorial and survey paper on diffusion models for 2D, 3D, and 4D generation.

Read More

Controllable text-to-3D scene generation with intuitive user inputs.

Read More

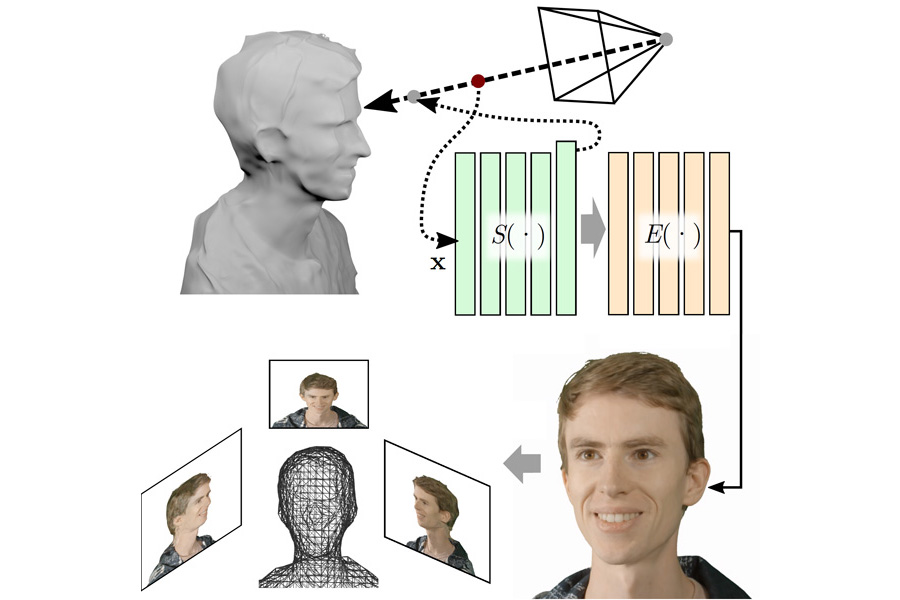

Unconditional Generation of Relightable 3D Human Faces

Read More

Efficient 3D Articulated Human Generation with Layered Surface Volumes

Read More

A diffusion model for low-light image reconstruction for text recognition.

Read More

Existing perceptual models used in foveated graphics neglect the effects of visual attention distribution. We introduce the first attention-aware model of contrast sensitivity and motivate the development of future foveation

Read More

Generative Novel View Synthesis with 3D-Aware Diffusion Models

Read More

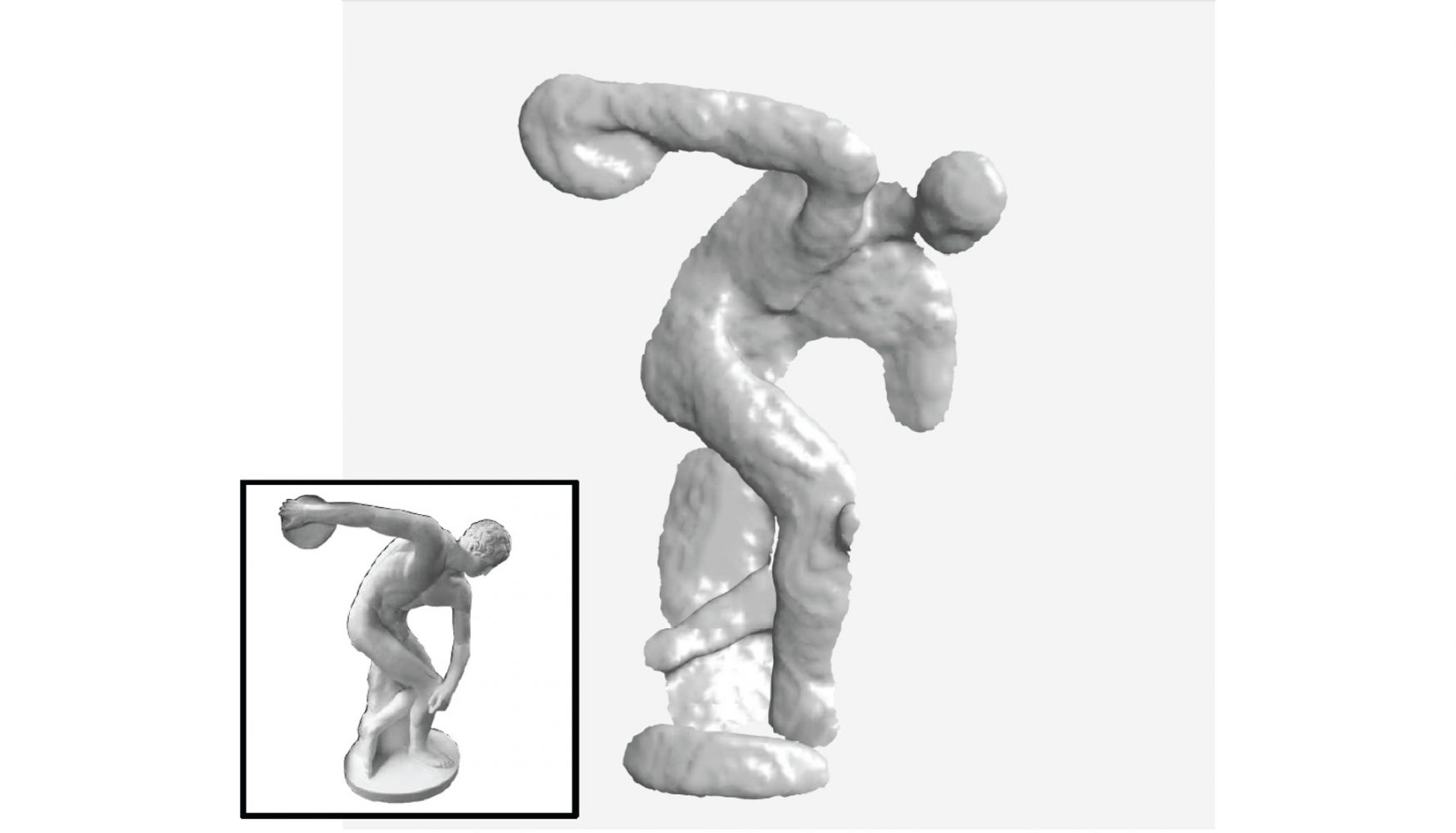

State-of-the-art 3D diffusion model for unconditional object generation.

Read More

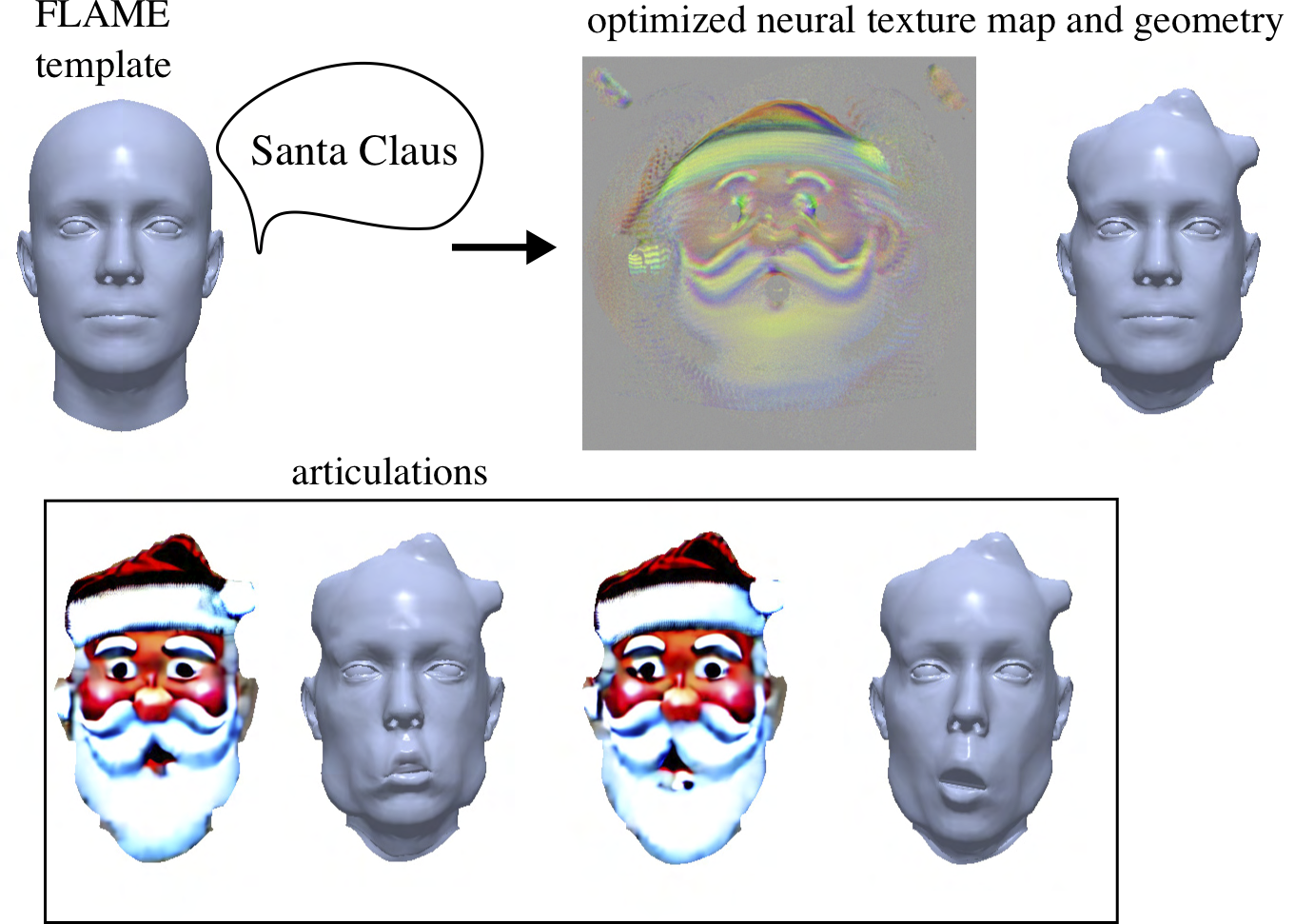

Generation of articulable head avatars from text prompts using diffusion models.

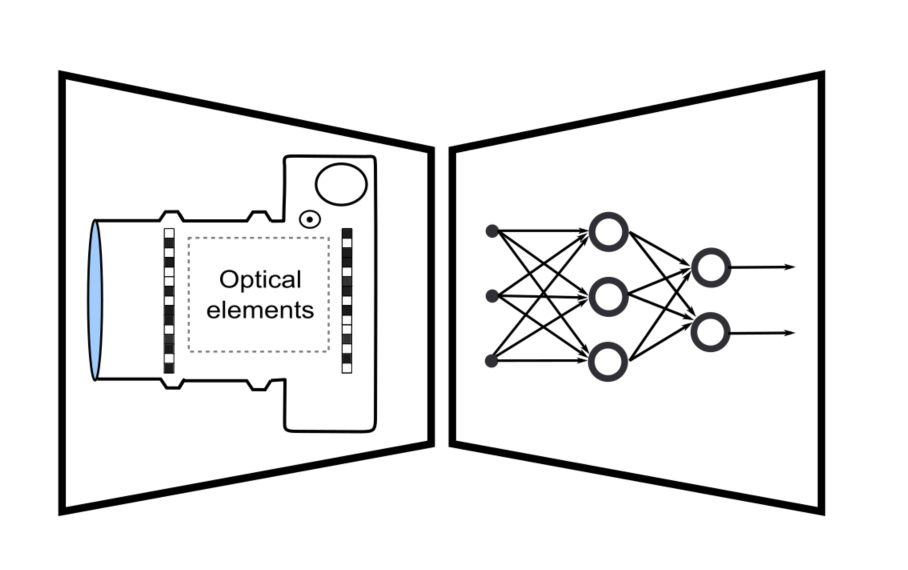

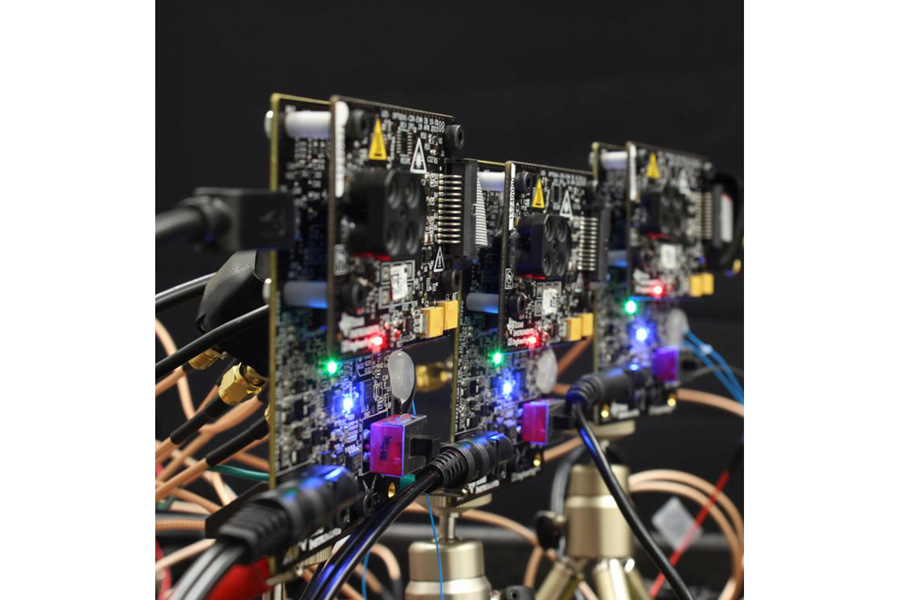

Read MoreIn-pixel recurrent neural networks for end-to-end-optimized perception with neural sensors.

Read More

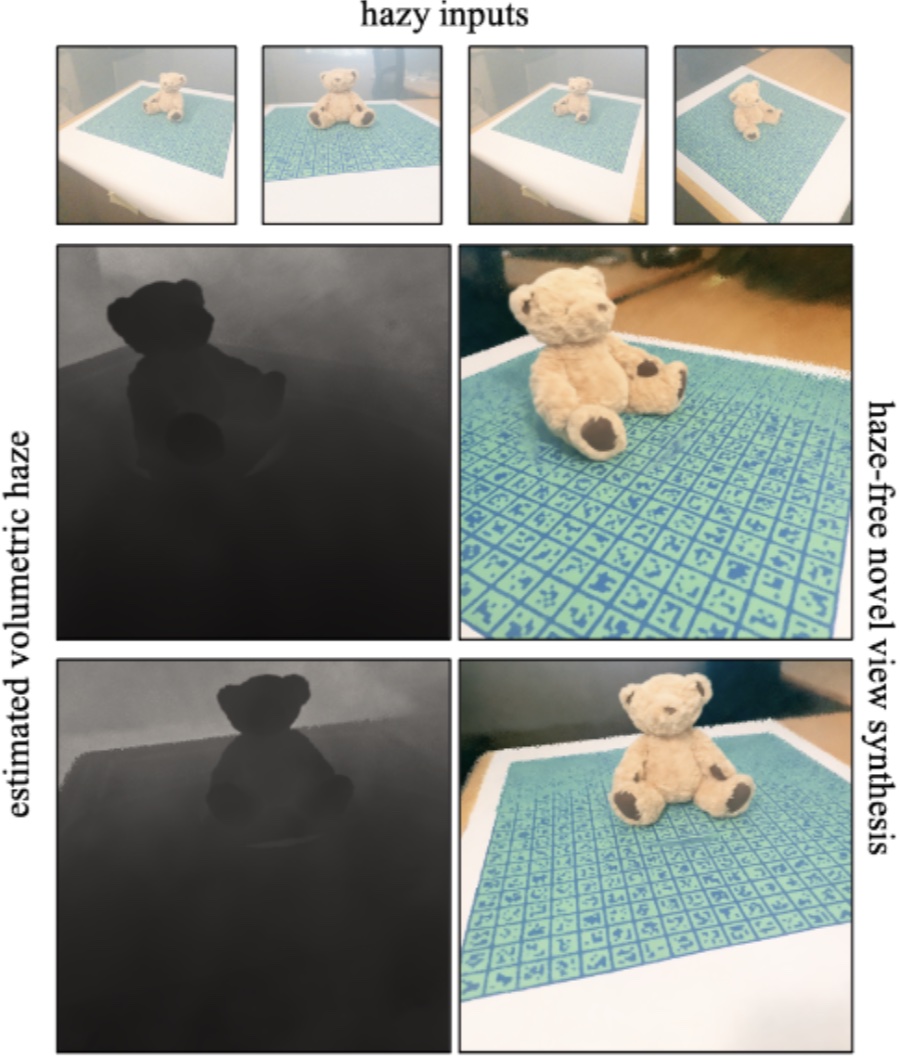

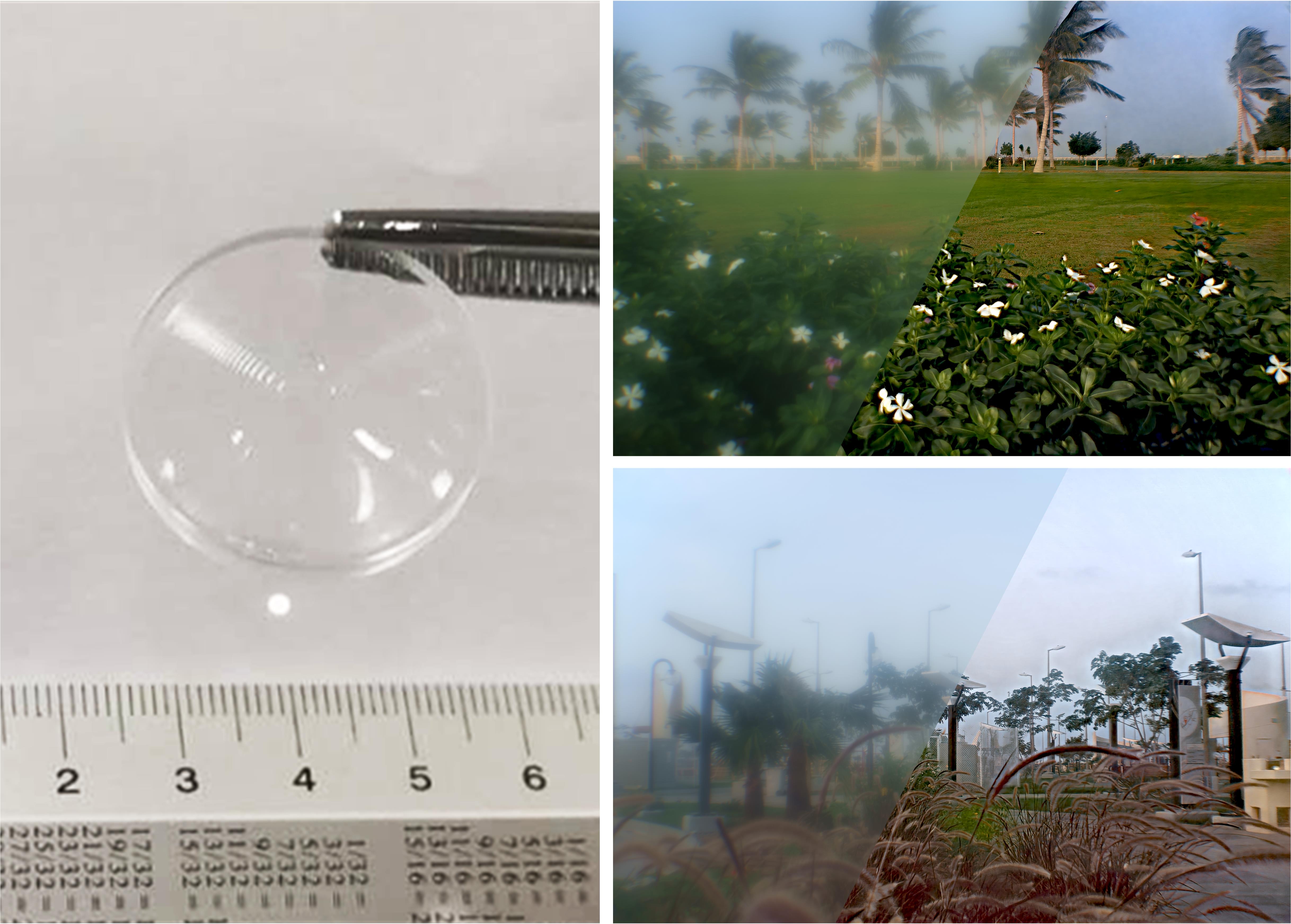

Multiple Image Haze Removal and 3D Shape Reconstruction using Neural Radiance Fields.

Read More

Generating different realizations of a single 3D scene from a few images.

Read More

Single-image-conditioned “infinite nature” approach with conditional diffusion models.

Read More

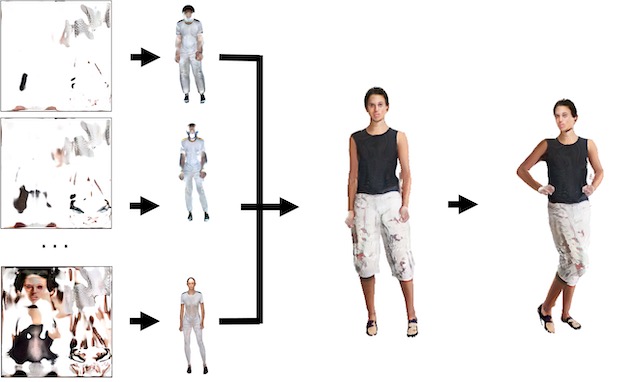

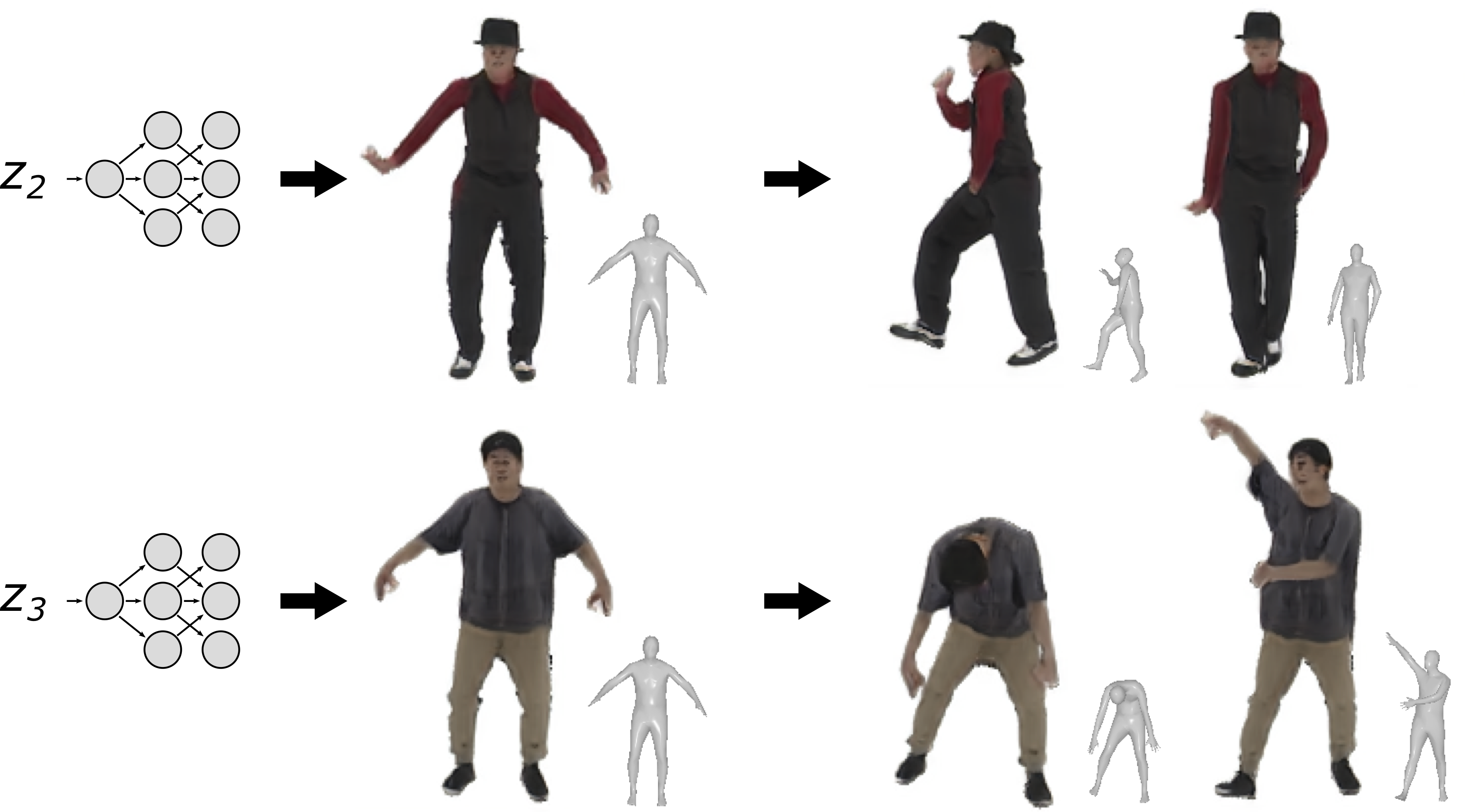

Unconditional generation of editable radiance field representations of human bodies.

Read More

Solving difficult inverse problems in physics using fast GNNs and generative priors.

Read More

A flexible framework for holographic near-eye displays with fast heavily-quantized spatial light modulators.

Read More

An ultra-thin VR display using computer-generated holography.

Read More

Amortized Inference of Poses for Ab Initio Reconstruction of 3D Molecular Volumes from Real Cryo-EM Images.

Read More

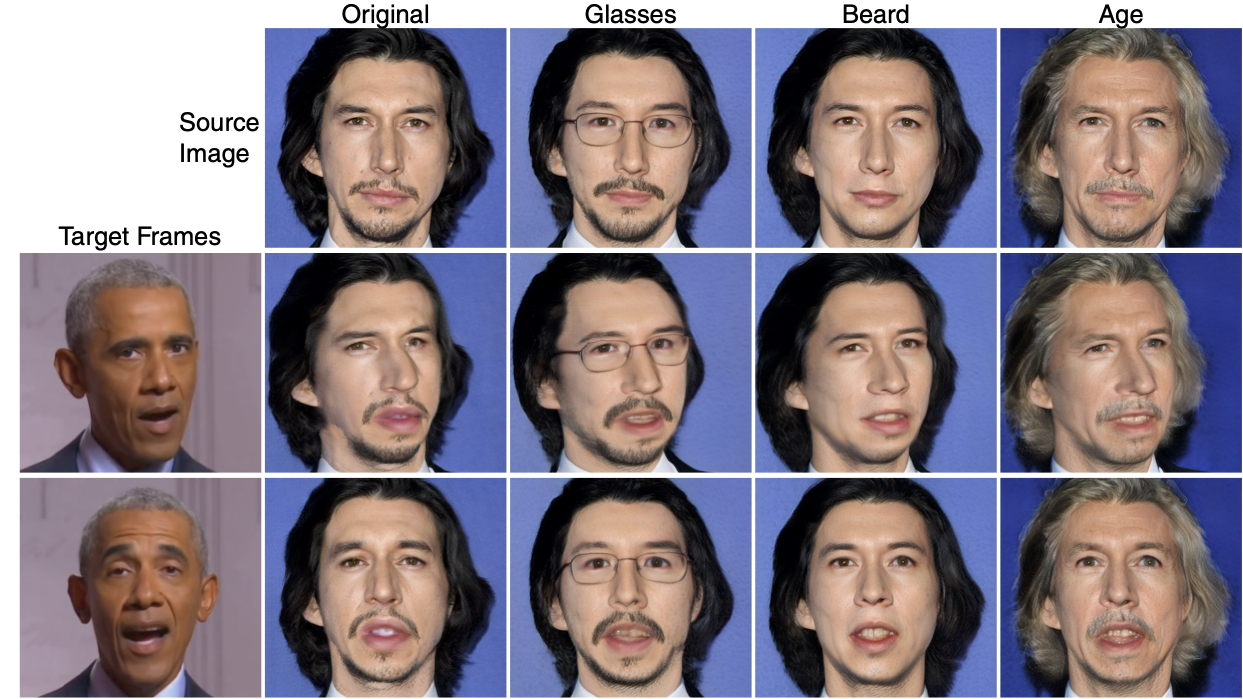

Portrait image animation and attribute editing using 3D GAN inversion.

Read More

3D GAN for photorealistic, multiview consistent, and shape-aware image synthesis.

Read More

A new type of neural network with an analytical Fourier spectrum that enables multiscale scene representation.

Read More

Per-pixel exposures implemented on a programmable sensor that find the best tradeoff between denoising short exposures and deblurring long ones.

Read More

Learning Snapshot High-dynamic-range Imaging with Perceptually-based In-pixel Irradiance Encoding This project won the Best Demo Award at ICCP 2022!

Read More

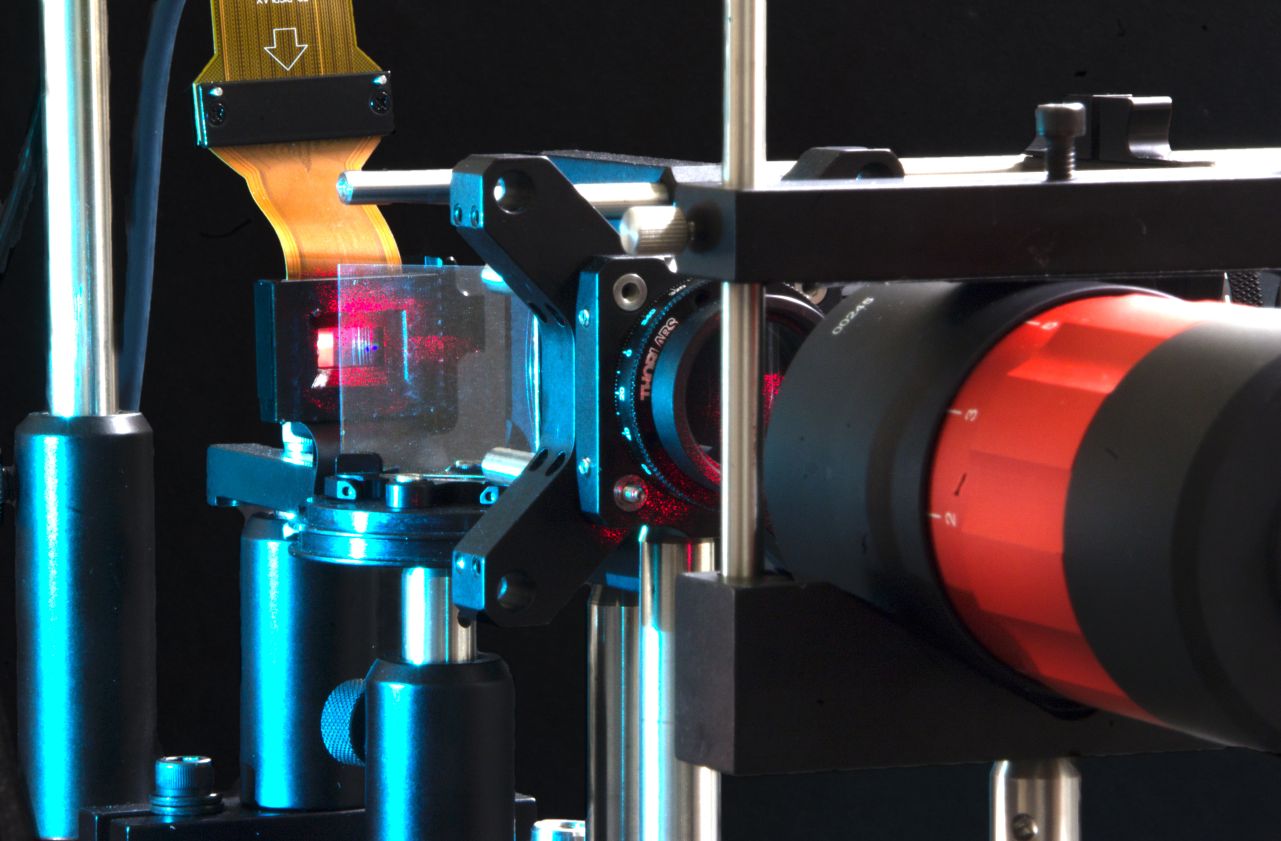

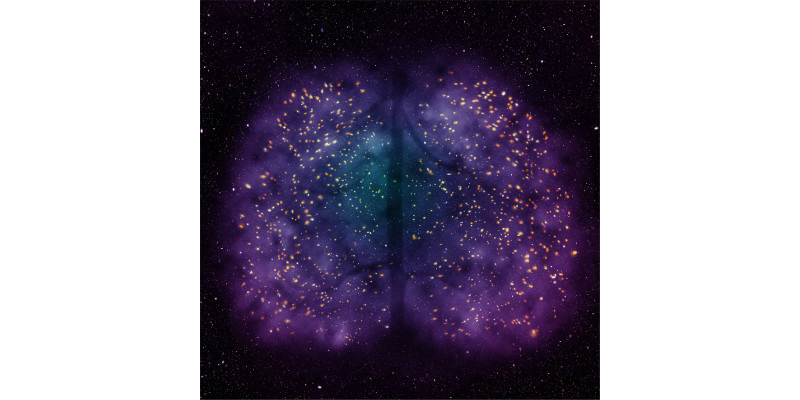

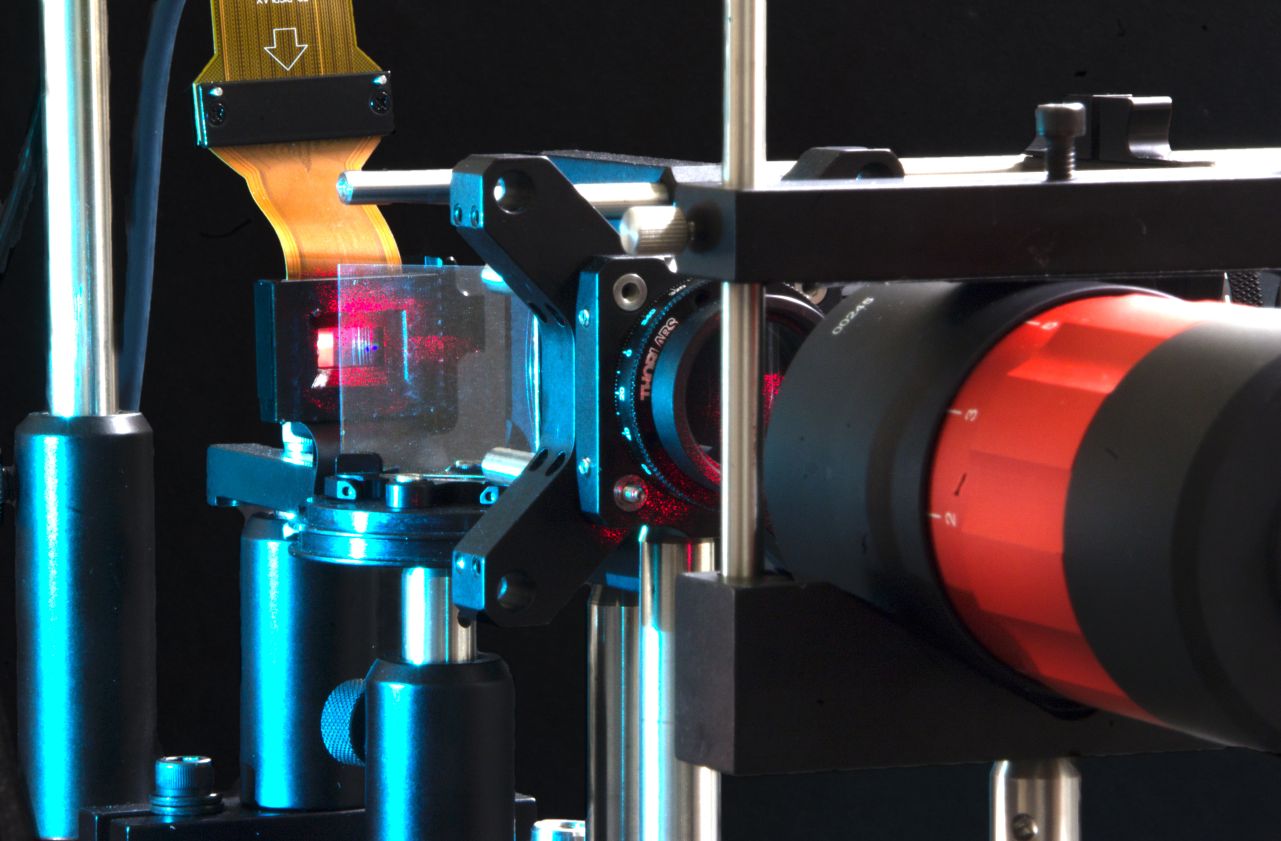

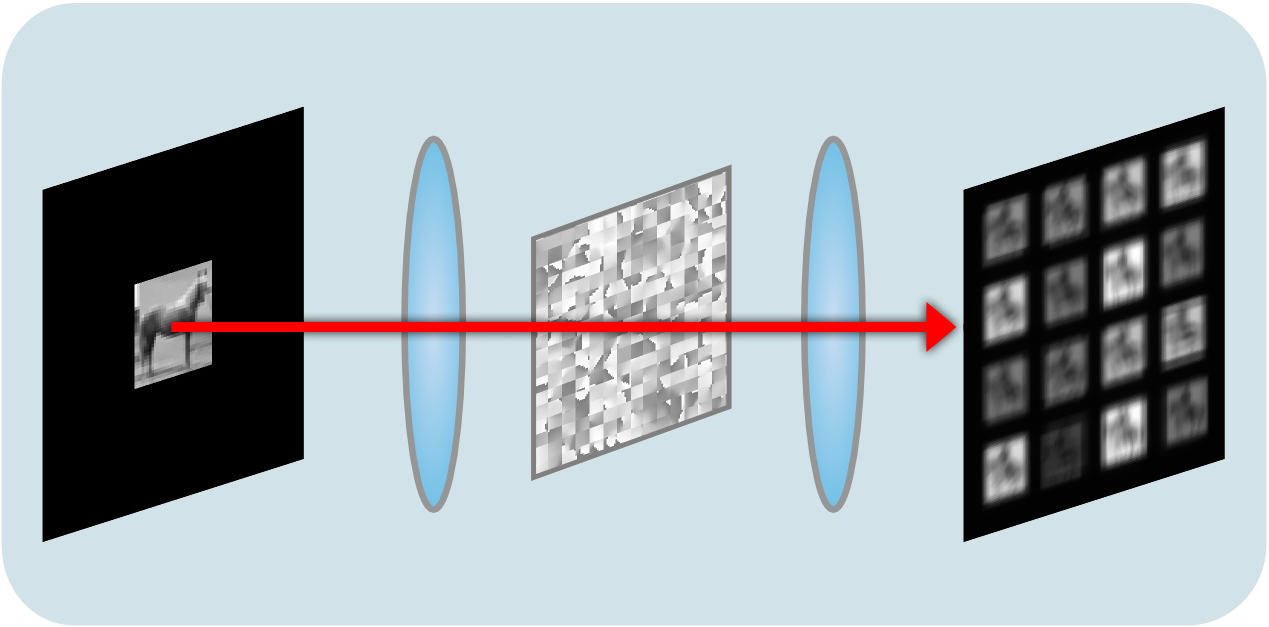

Deep learning multi-shot 3D localization microscopy using hybrid optical–electronic computing.

Read More

An algorithmic framework for optimizing high diffraction orders without optical filtering to enable compact holographic displays.

Read More

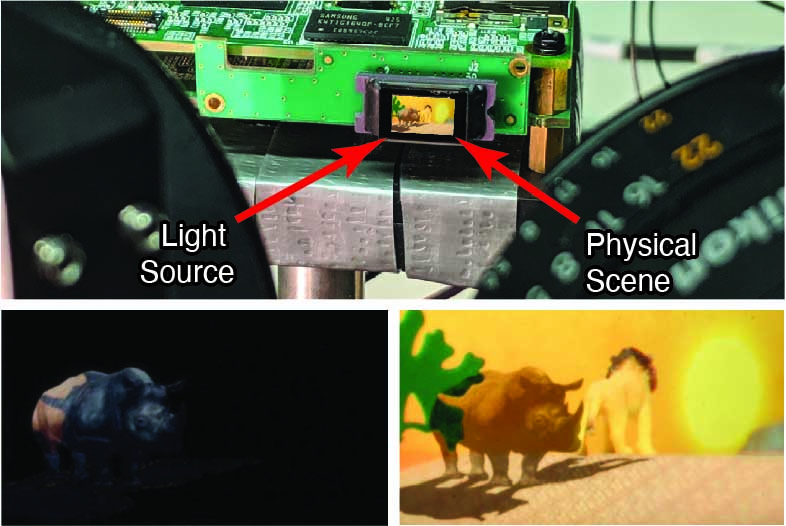

A holographic display combining artificial intelligence with partially coherent light sources to reduce speckle in AR/VR applications.

Read More

TMCA is a new type of codification for compressive imaging systems using coded apertures. It enables better reconstruction quality due to a better conditioning of the systems’ sensing matrices.

Read More

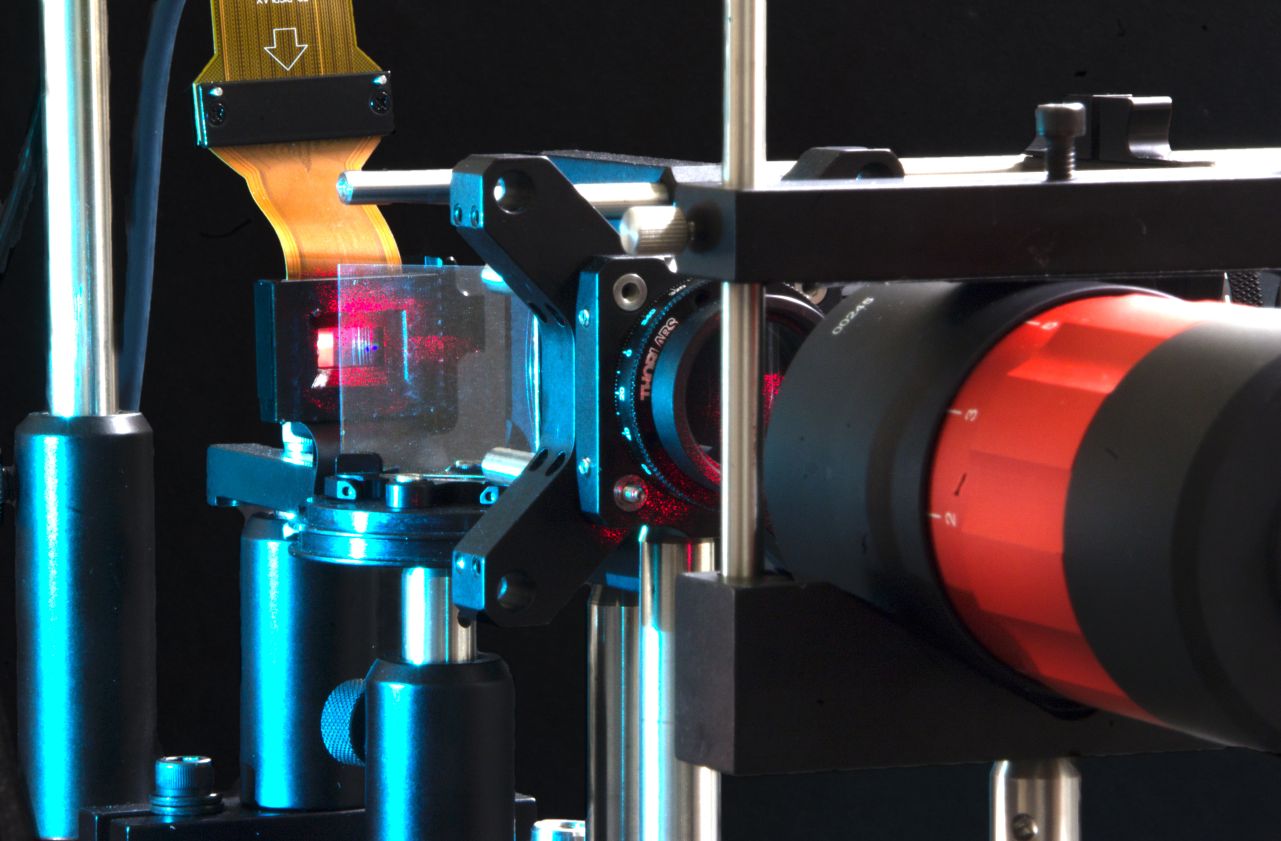

A differentiable camera-calibrated wave propagation model for holographic near-eye displays that enables unprecedented 3D image fidelity.

Read More

Fast training and real-time rendering of neural surface representations using meta learning.

Read More

ACORN is a hybrid implicit-explicit neural representation that enables large-scale fitting of signals such as shapes or images.

Read More

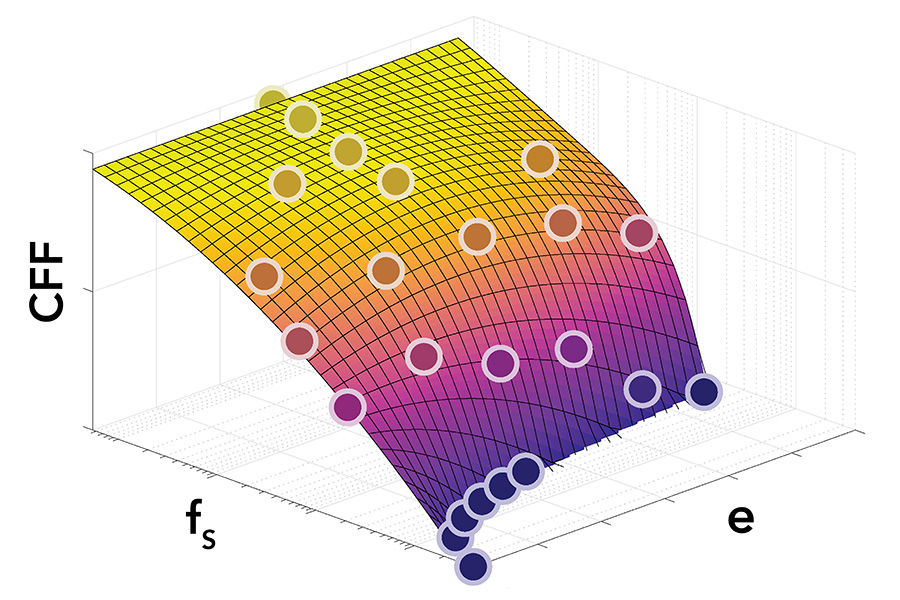

We derive an eccentricity-dependent spatio-temporal model of the visual system to enable the development of new temporally foveated graphics techniques.

Read More

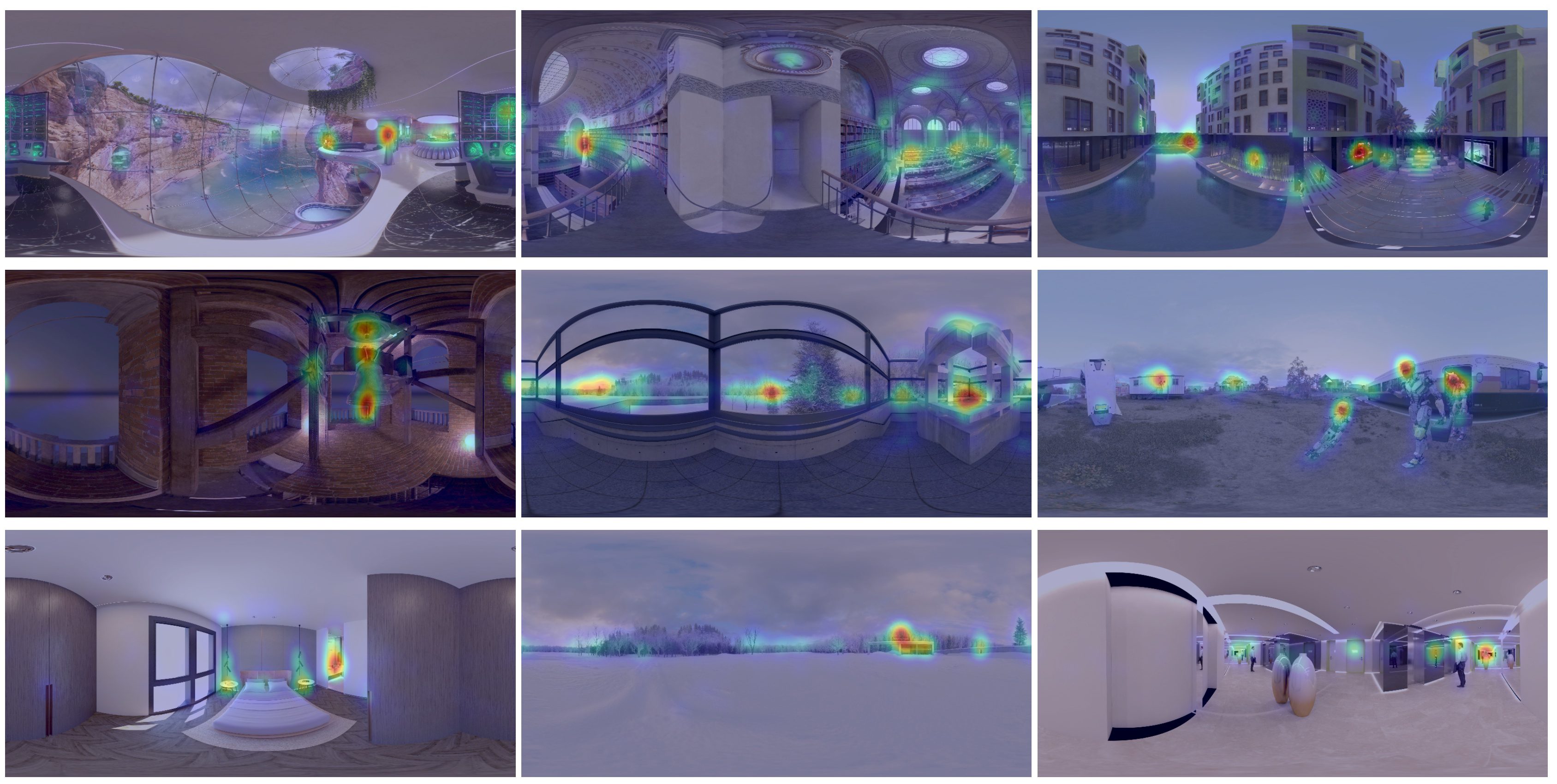

A Generative Model of Realistic Scanpaths for 360° Images

Ultra low-latency and low-power eye tracking for AR/VR beyond 10,000 Hz using event sensors.

Read More

A new GAN architecture and training procedure for 3D-aware image synthesis using periodic implicit neural representations.

Read More

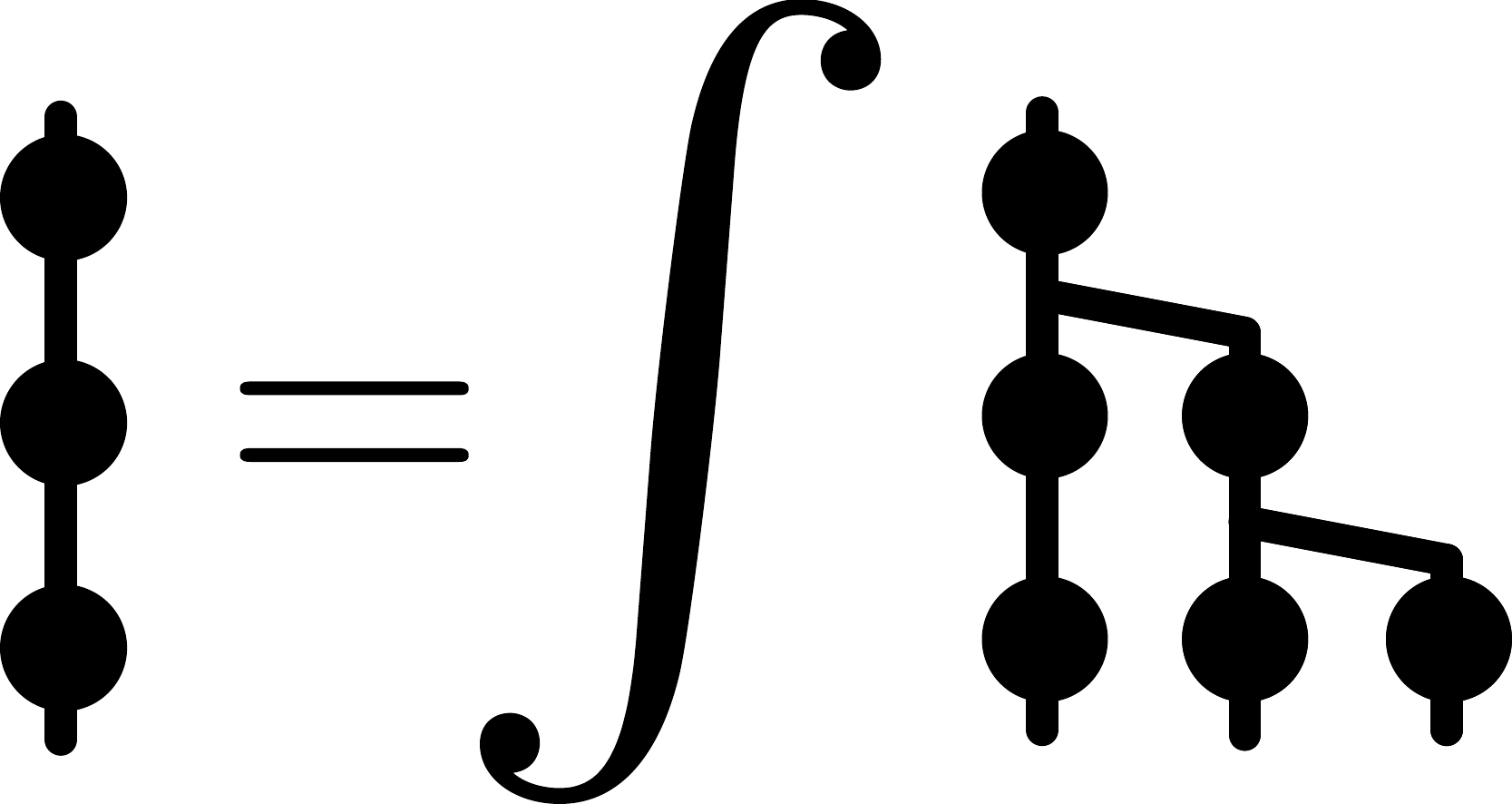

A new framework to integrate signals with implicit neural representations and its application to volume rendering.

Read More

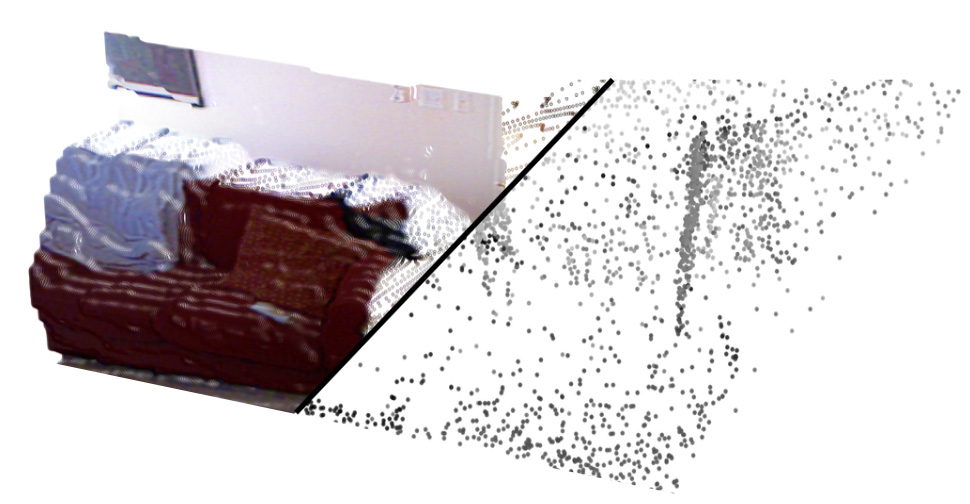

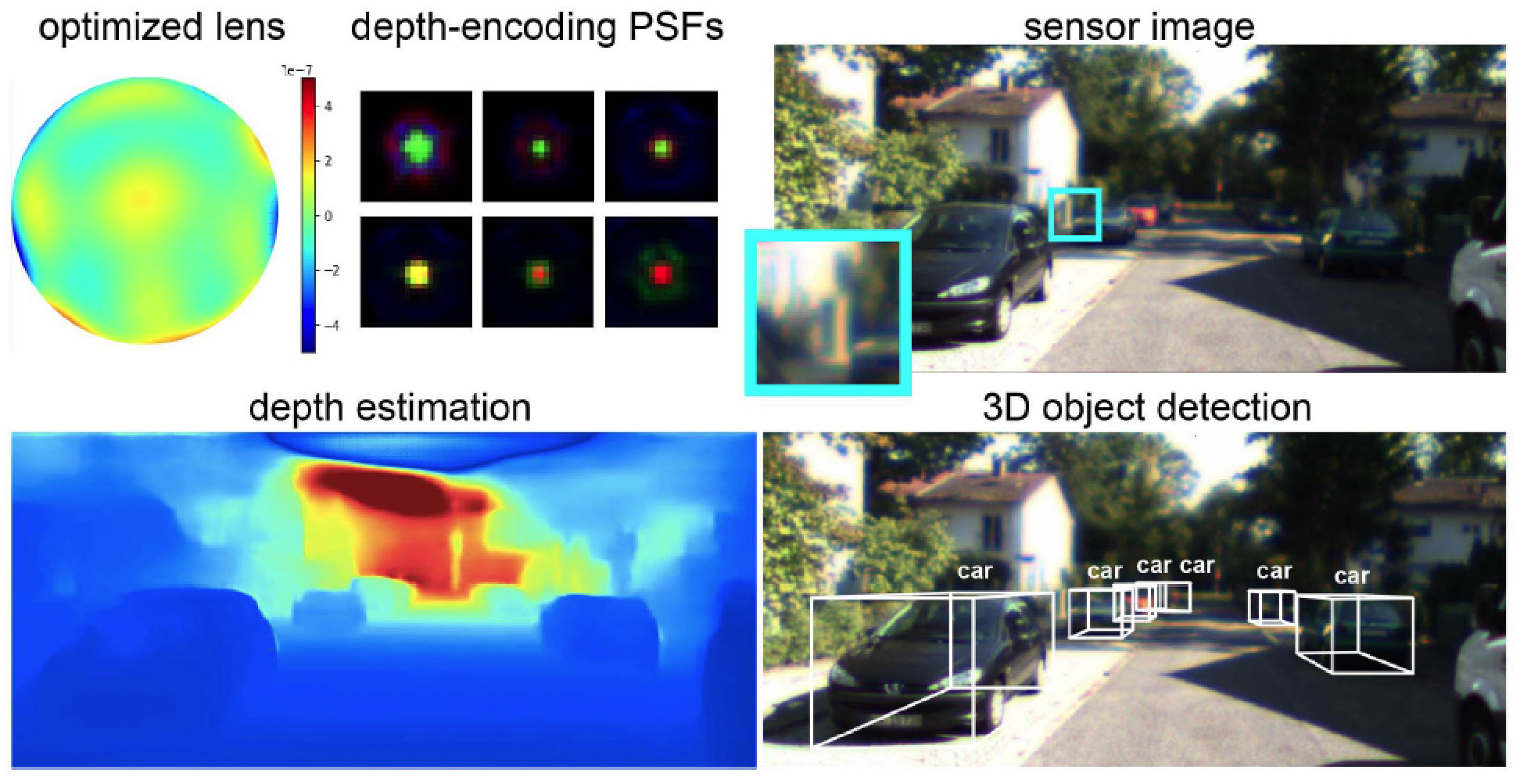

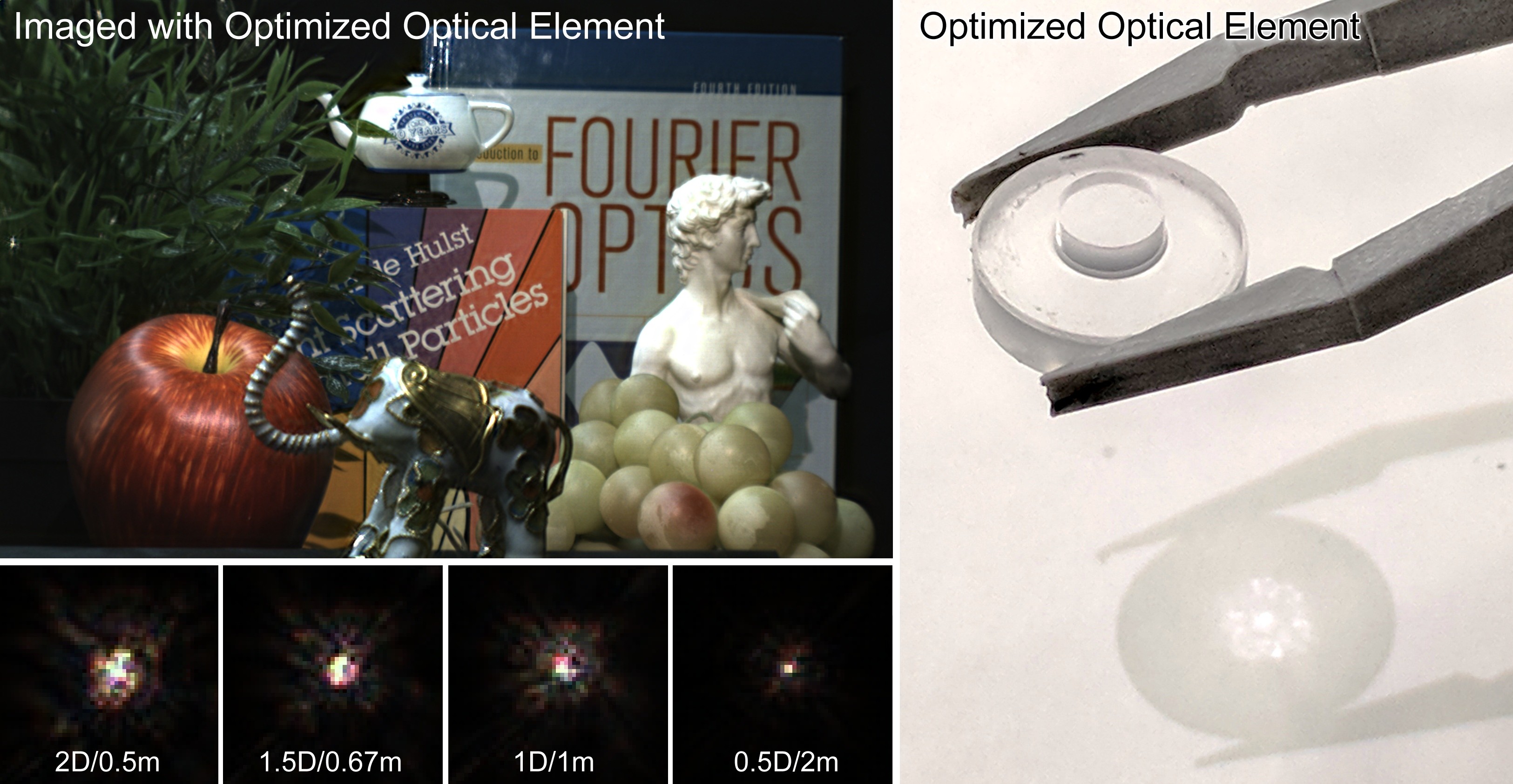

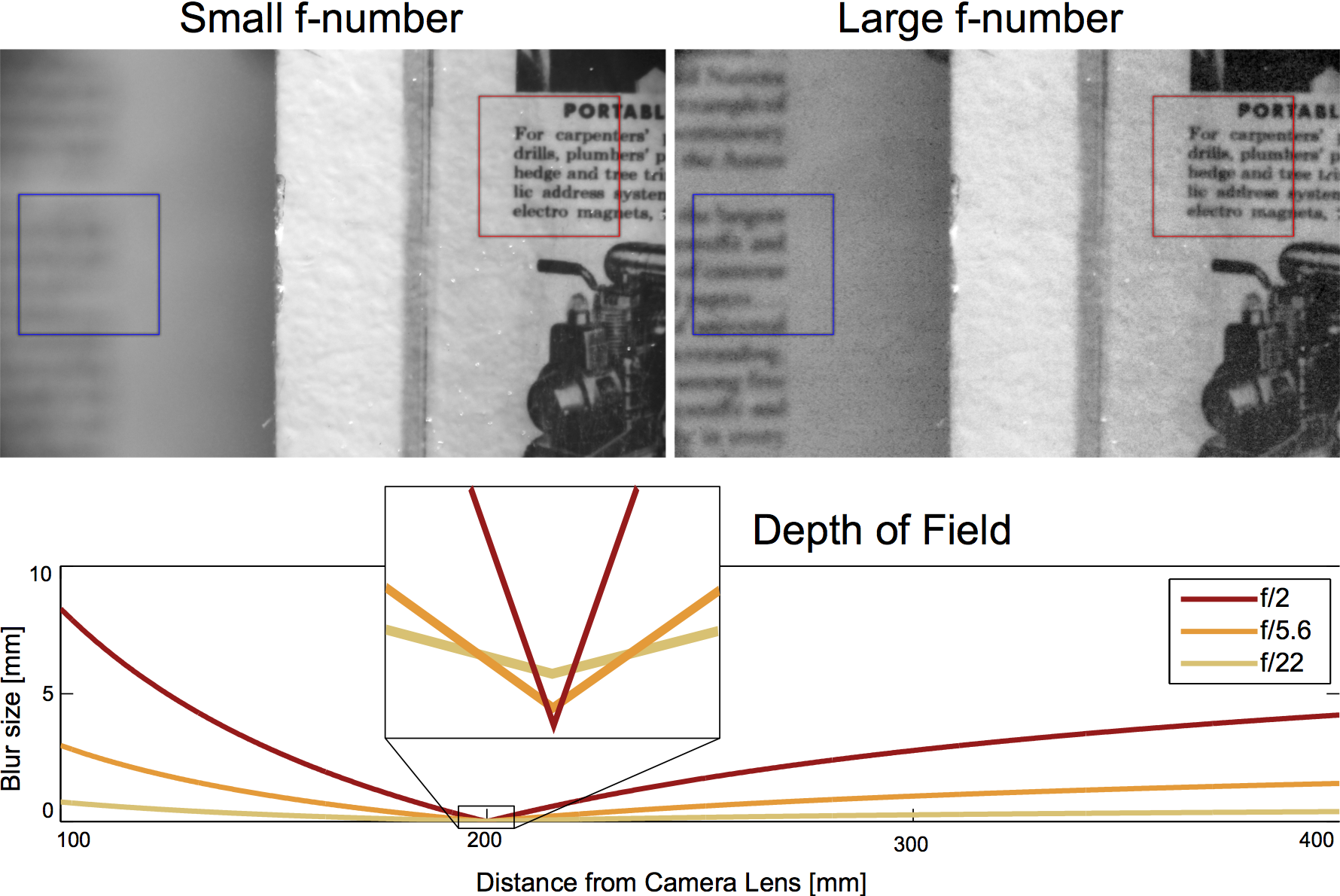

End-to-end optimization of optics and image processing for passive depth estimation.

Read More

A holographic display technology that optimizes image quality for emerging holographic near-eye displays using 2 SLMs and camera-in-the-loop calibration.

Read More

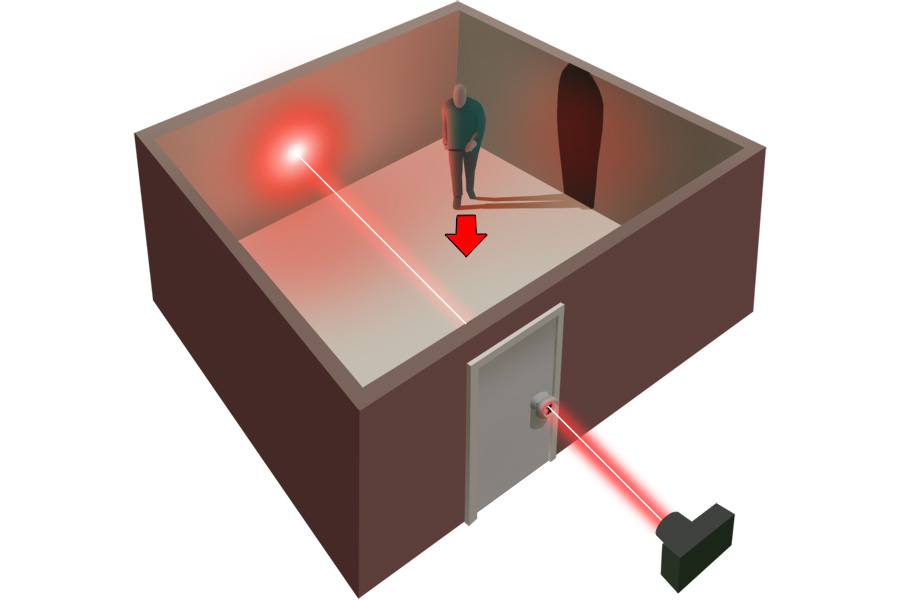

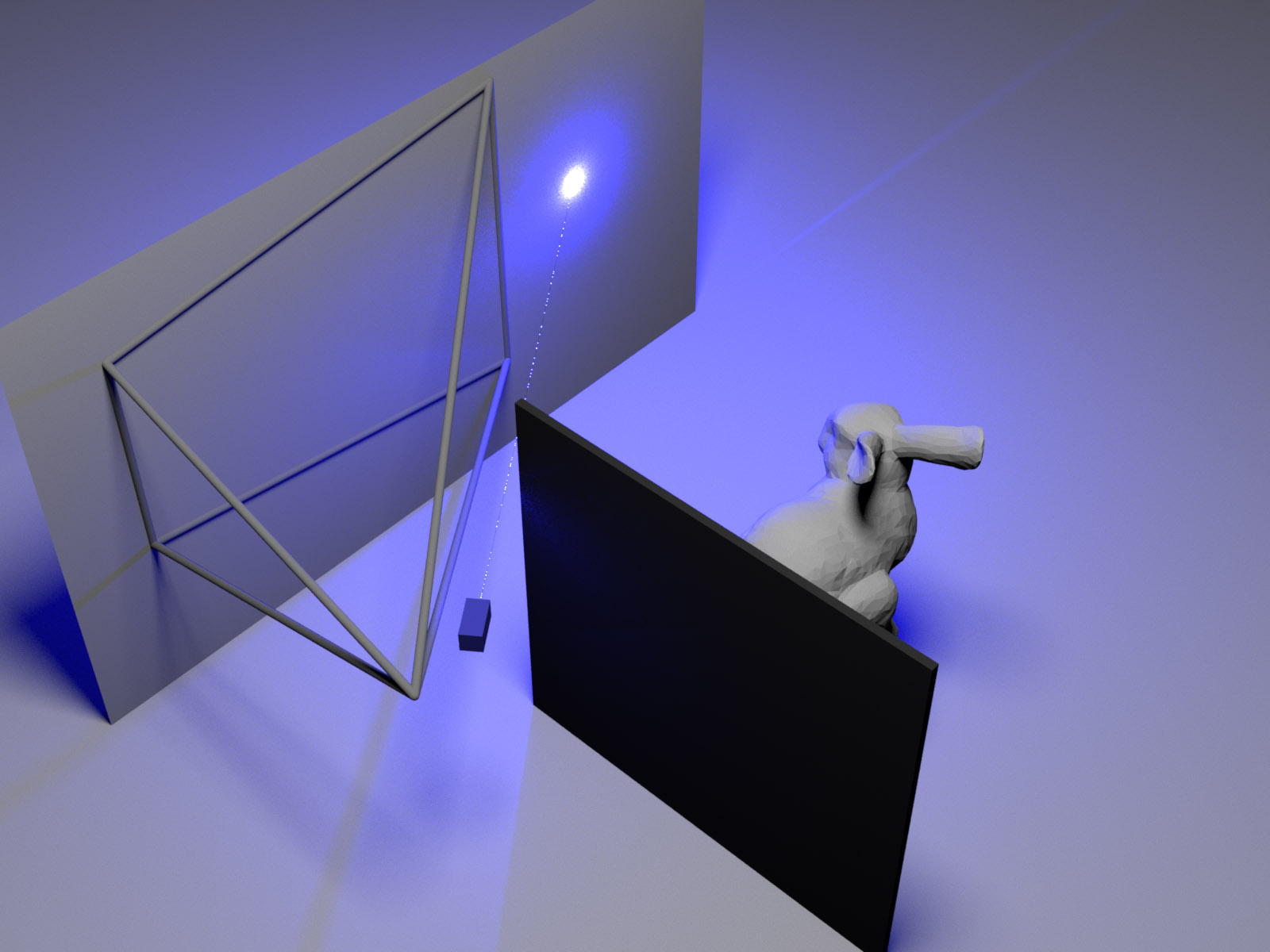

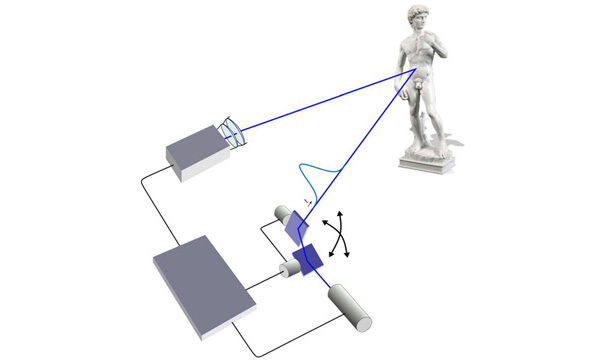

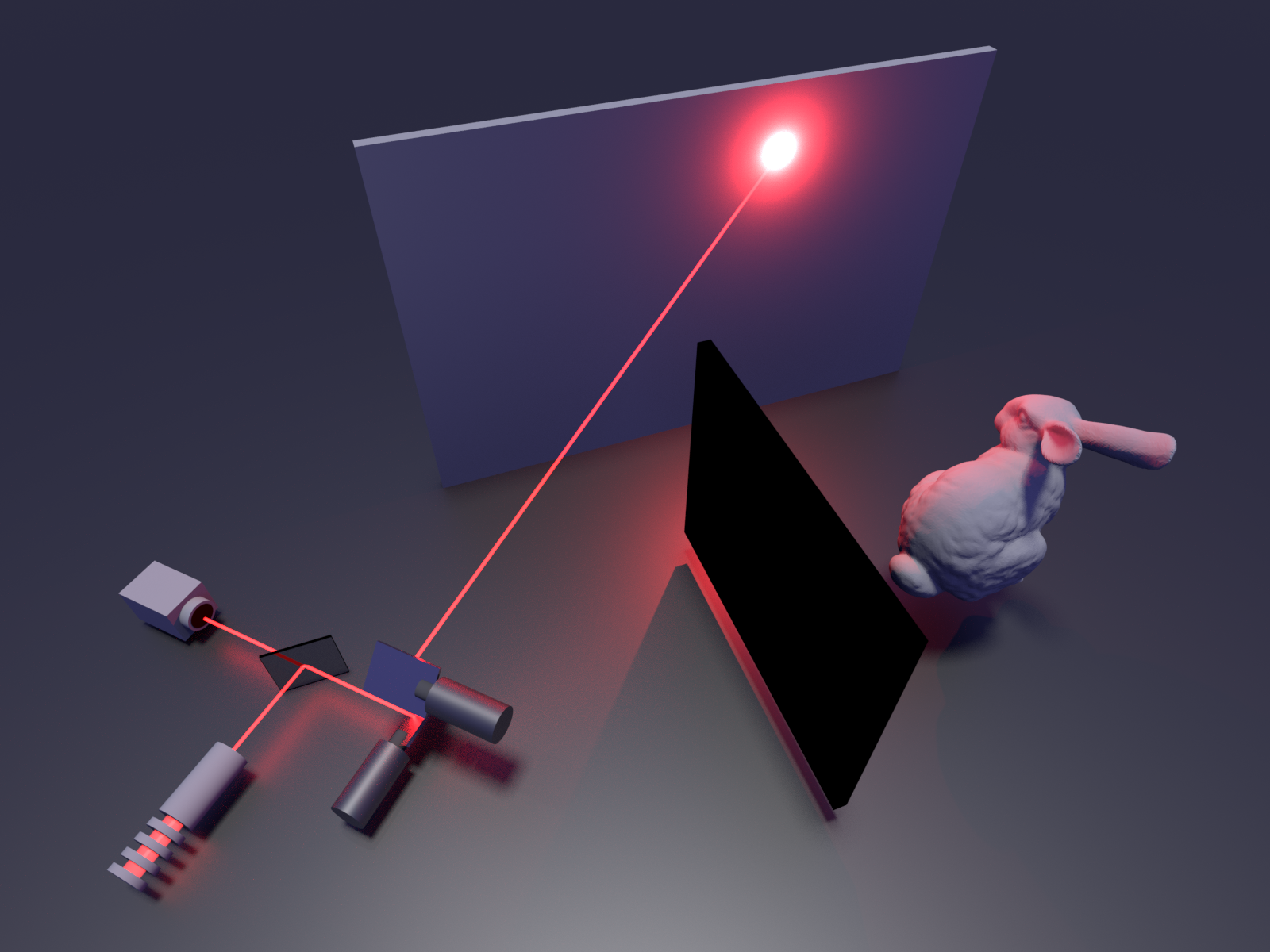

Computational imaging of moving 3D objects through the keyhole of a closed door.

Read More

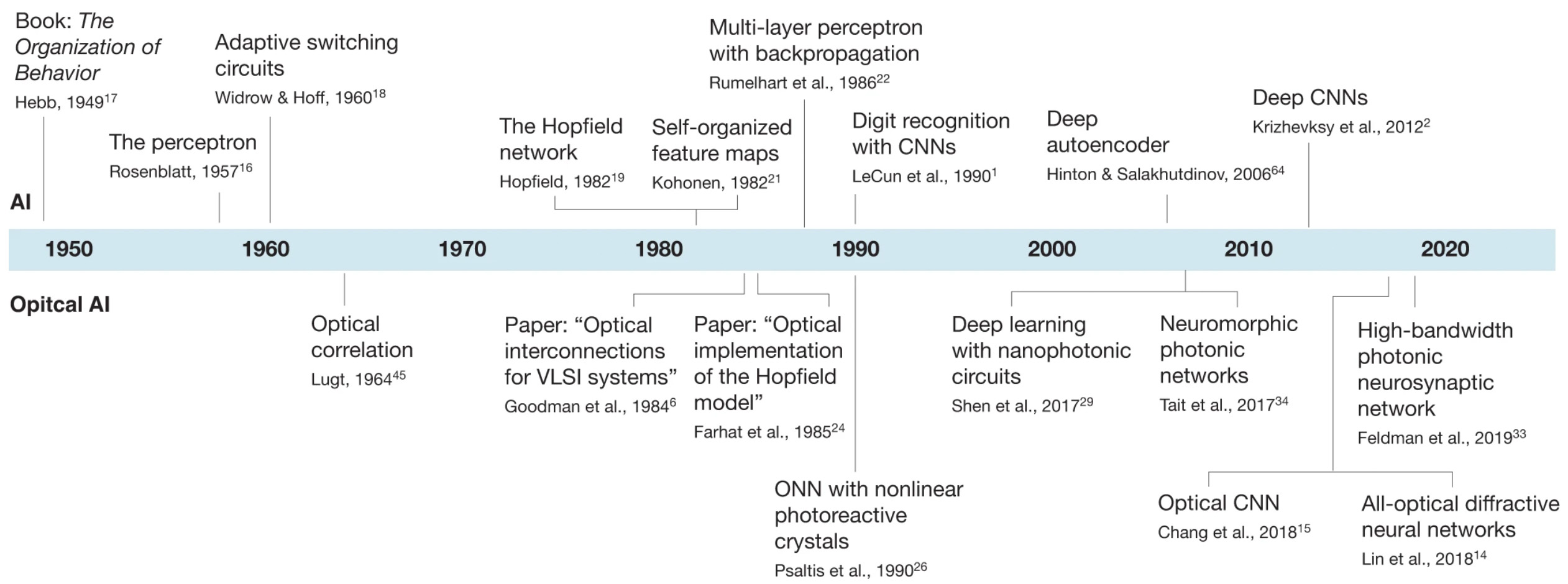

Review article on inference in artificial intelligence with deep optics and photonics.

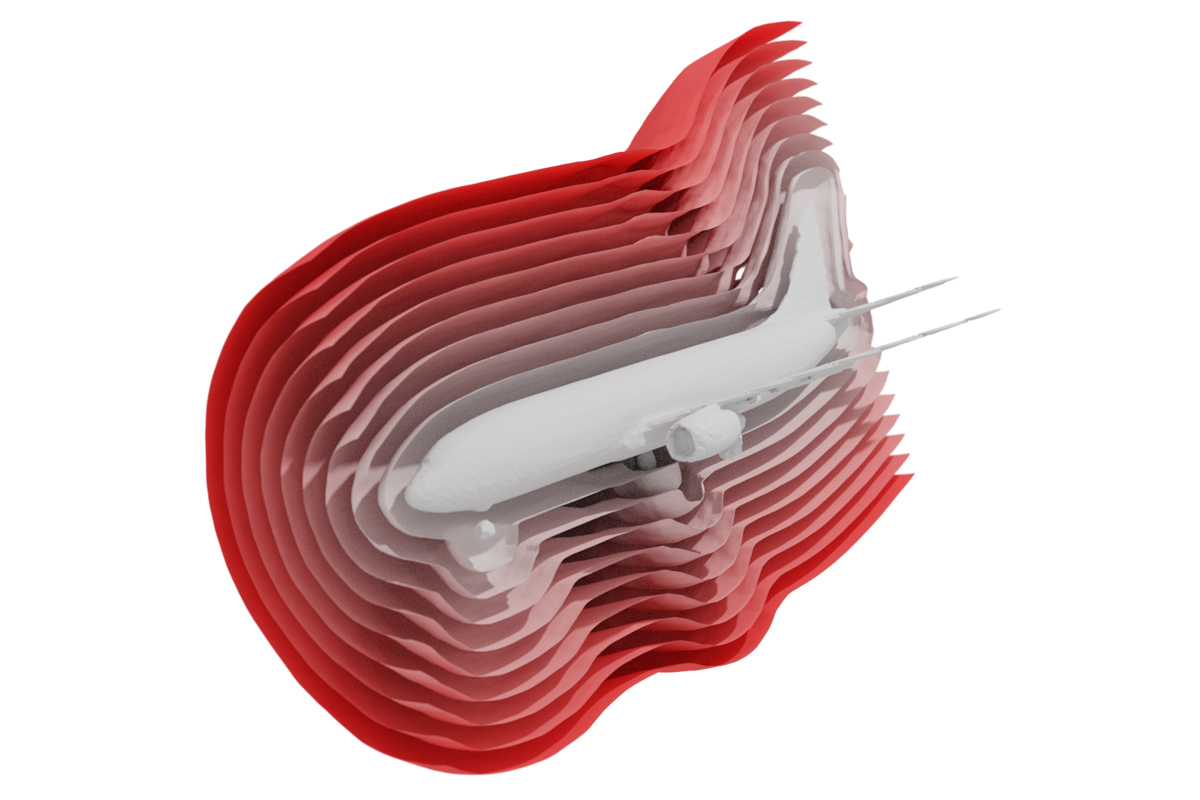

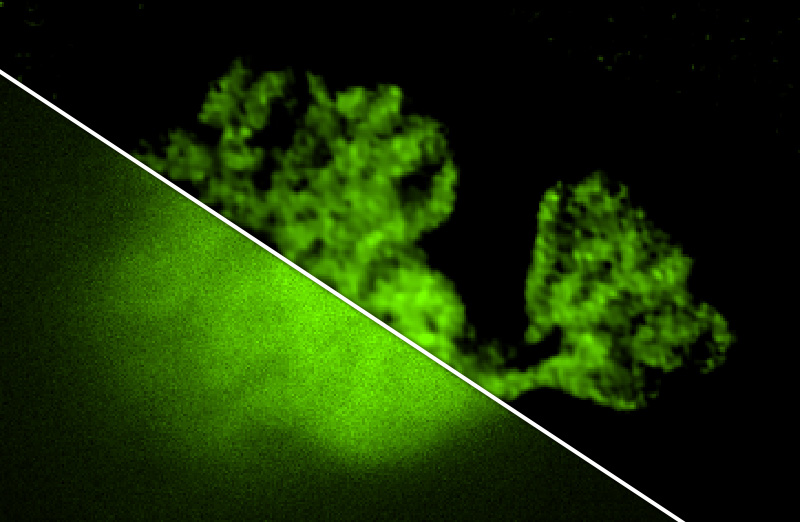

A new computationally efficient method to recover the 3D shape of objects hidden behind a thick scattering layer.

Read More

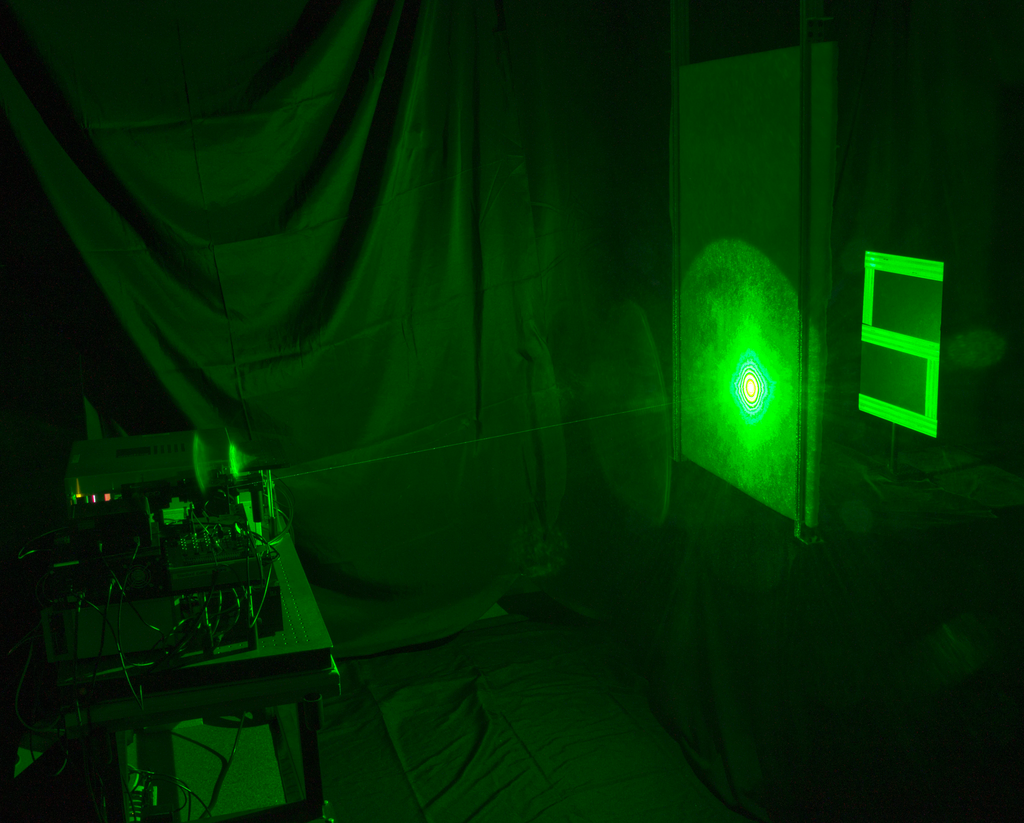

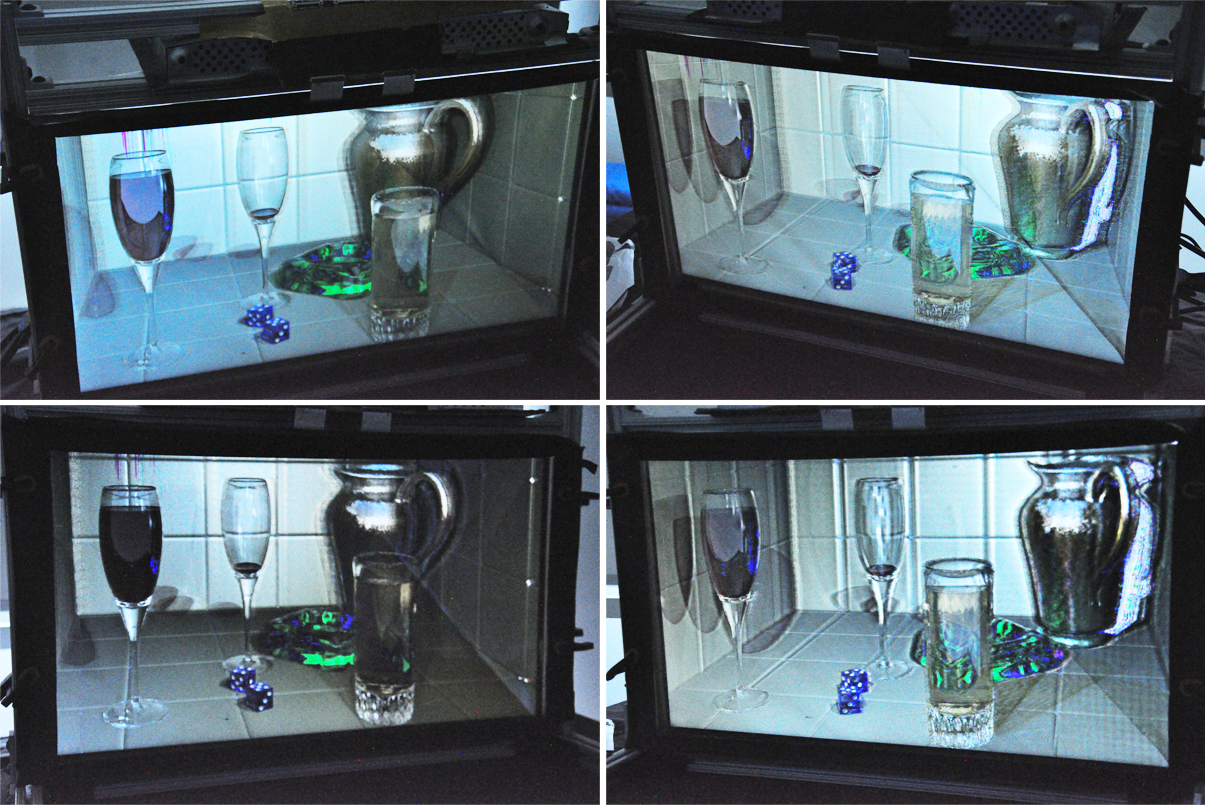

We develop Neural Holography – an algorithmic CGH framework that uses camera-in-the-loop training to achieve unprecedented image fidelity and real-time framerates.

Read More

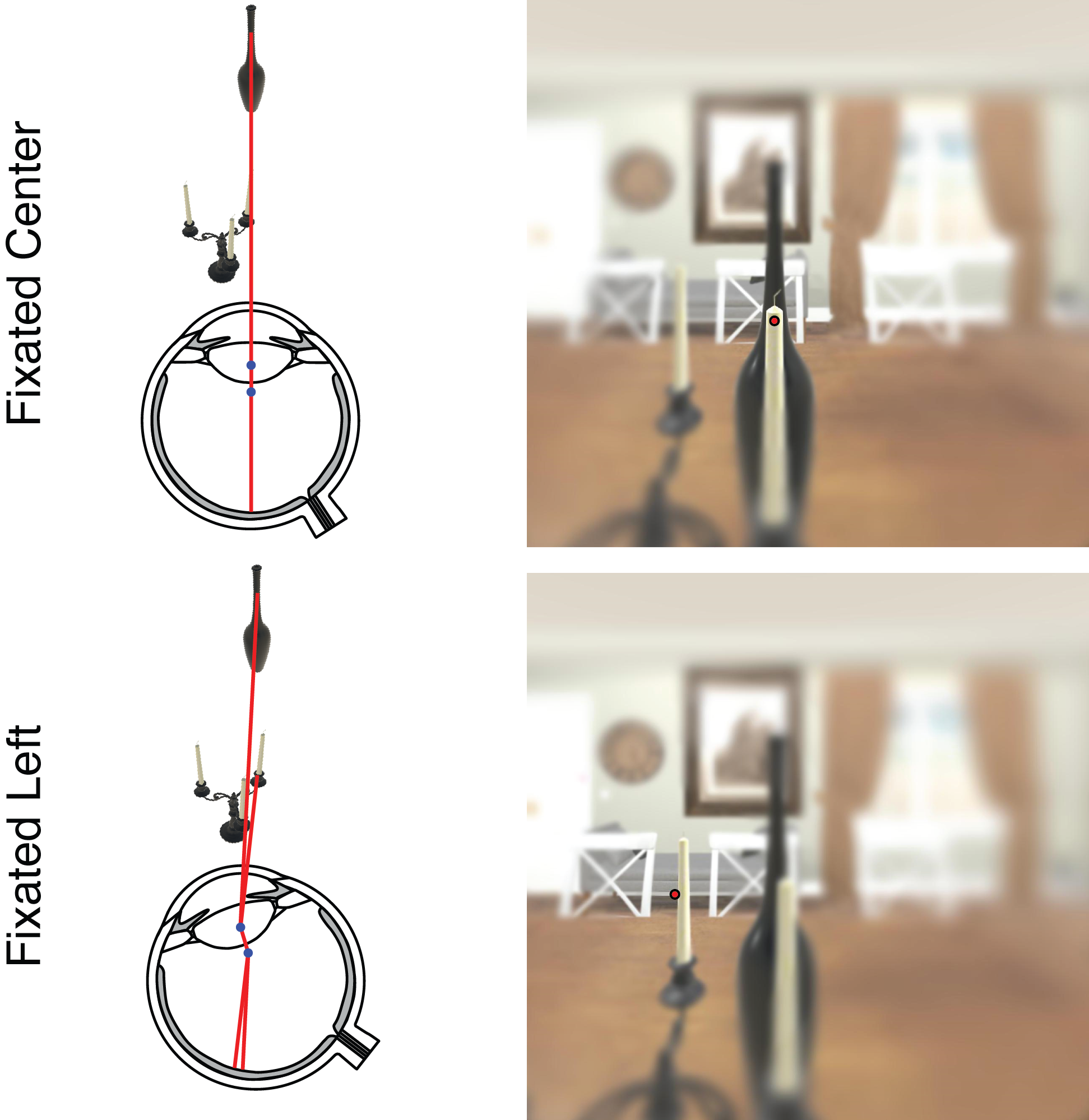

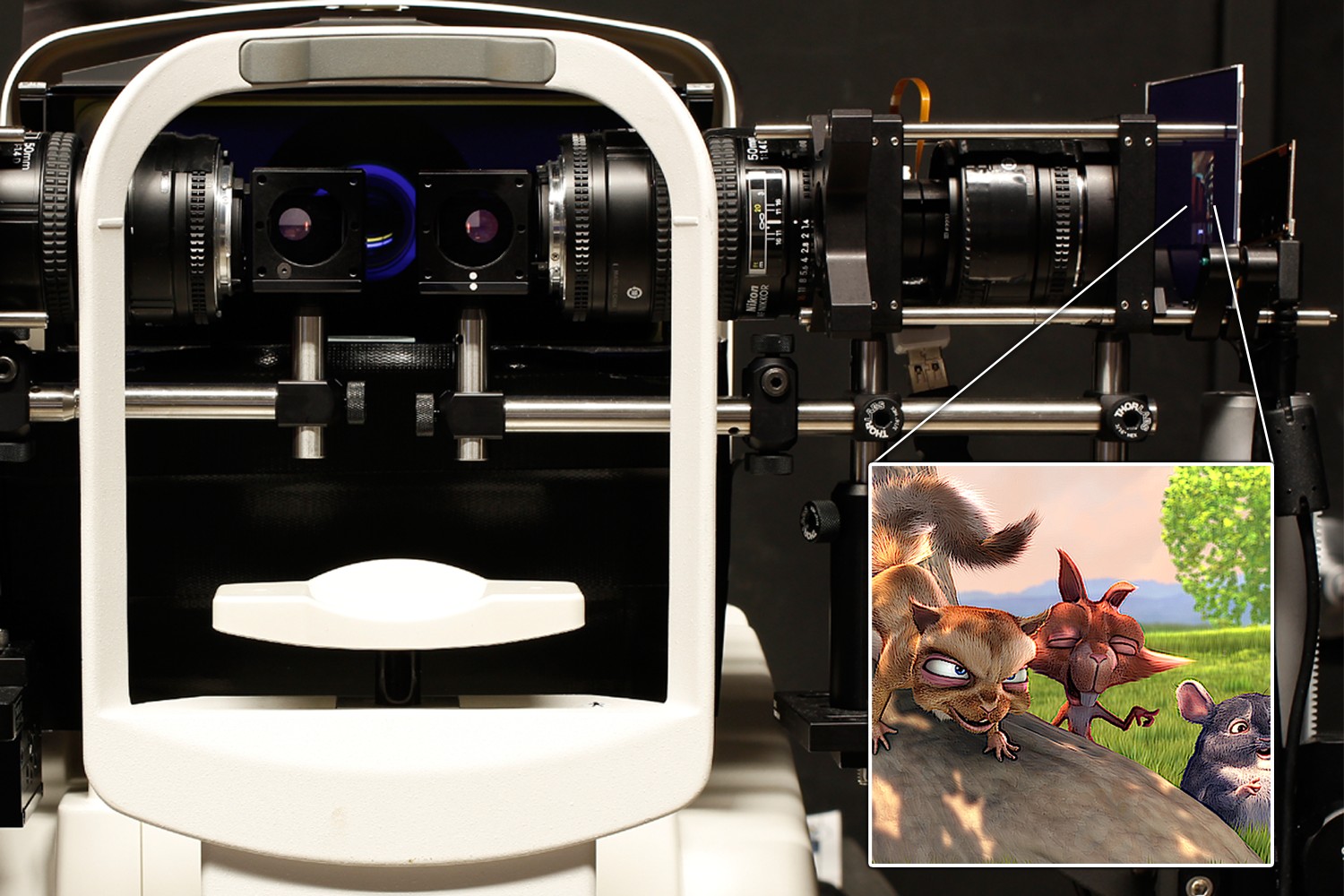

We introduce a gaze-contingent stereo rendering technique that improves perceptual realism and depth perception of VR and physical-digital object alignment in AR displays.

Read More

An implicitly defined neural signal representation for images, audio, shapes, and wavefields.

Read More

A meta-learning approach to generalizing over neural signed distance functions.

Read More

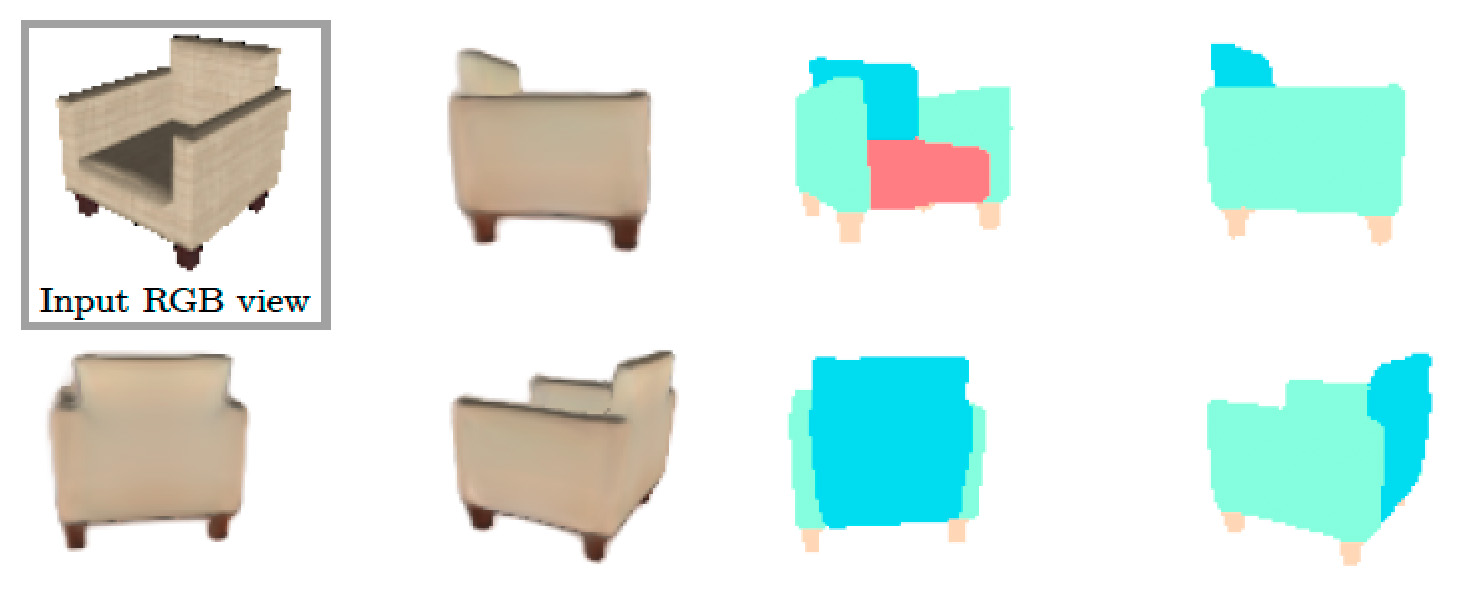

We demonstrate a representation that jointly encodes shape, appearance, and semantics in a 3D-structure-aware manner.

Read More

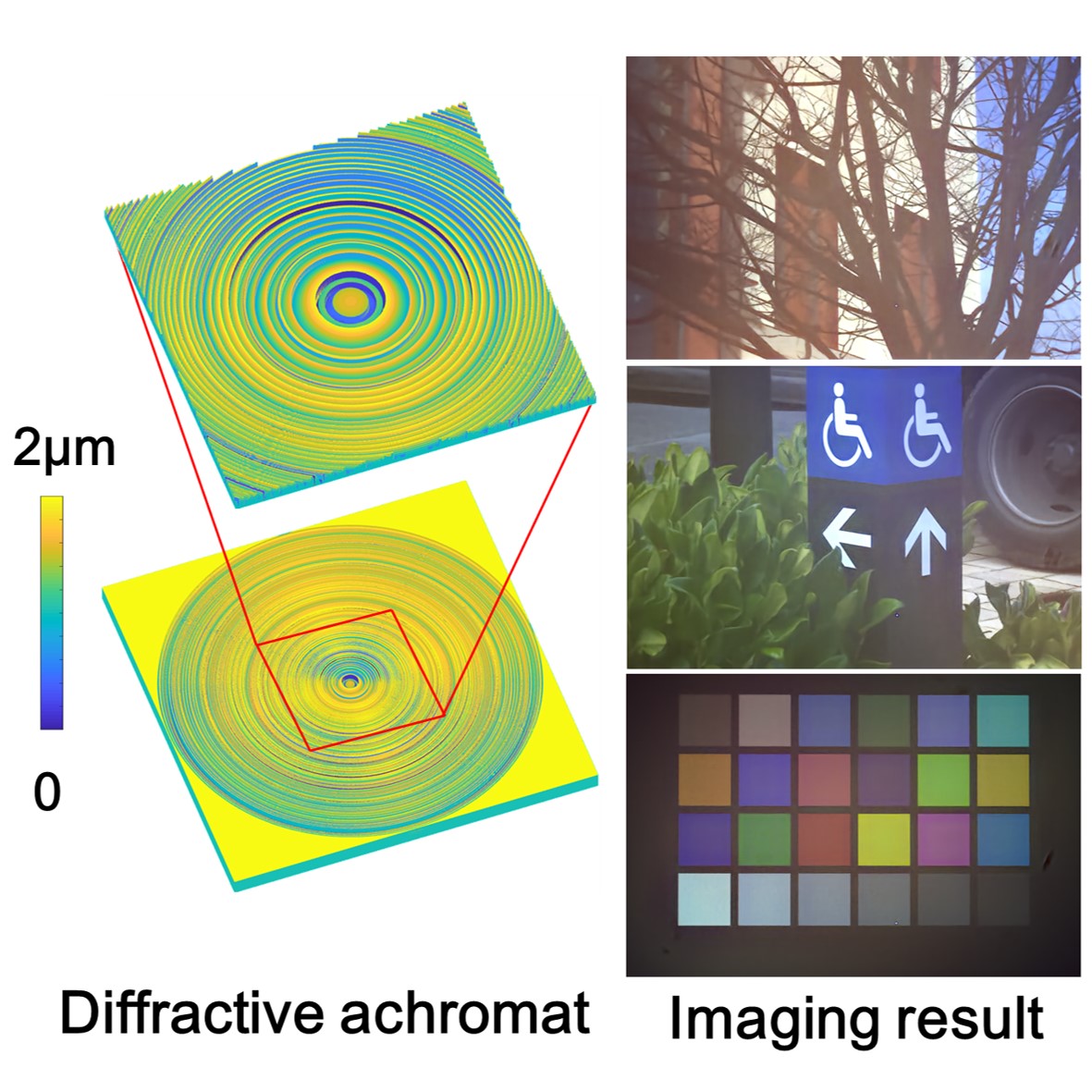

We realize the joint learning of a diffractive achromat with a realistic aperture size and an image recovery neural network in an end-to-end manner across the full visible spectrum.

Read More

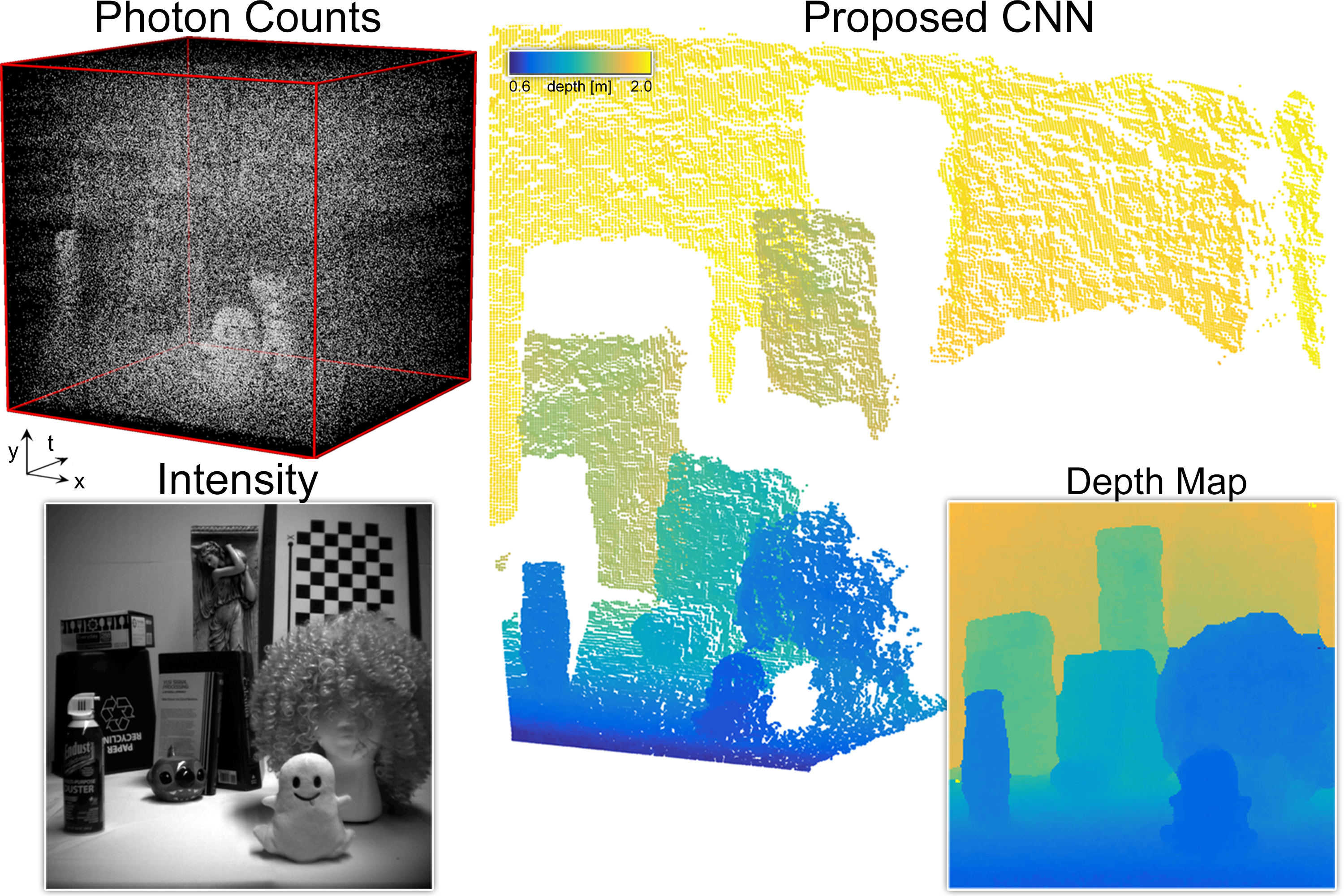

Robust 3D imaging with an RGB camera and a single SPAD transient fueled by machine learning.

Read More

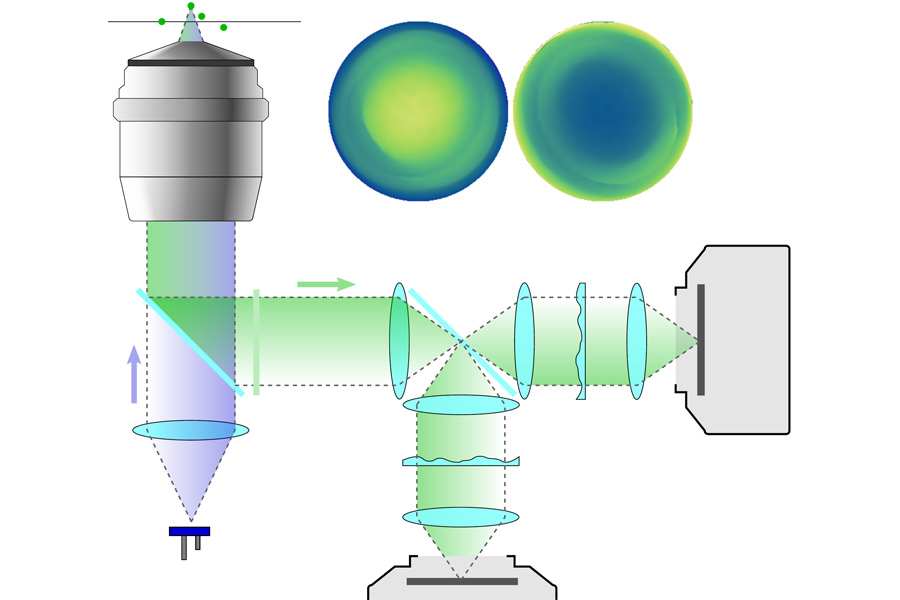

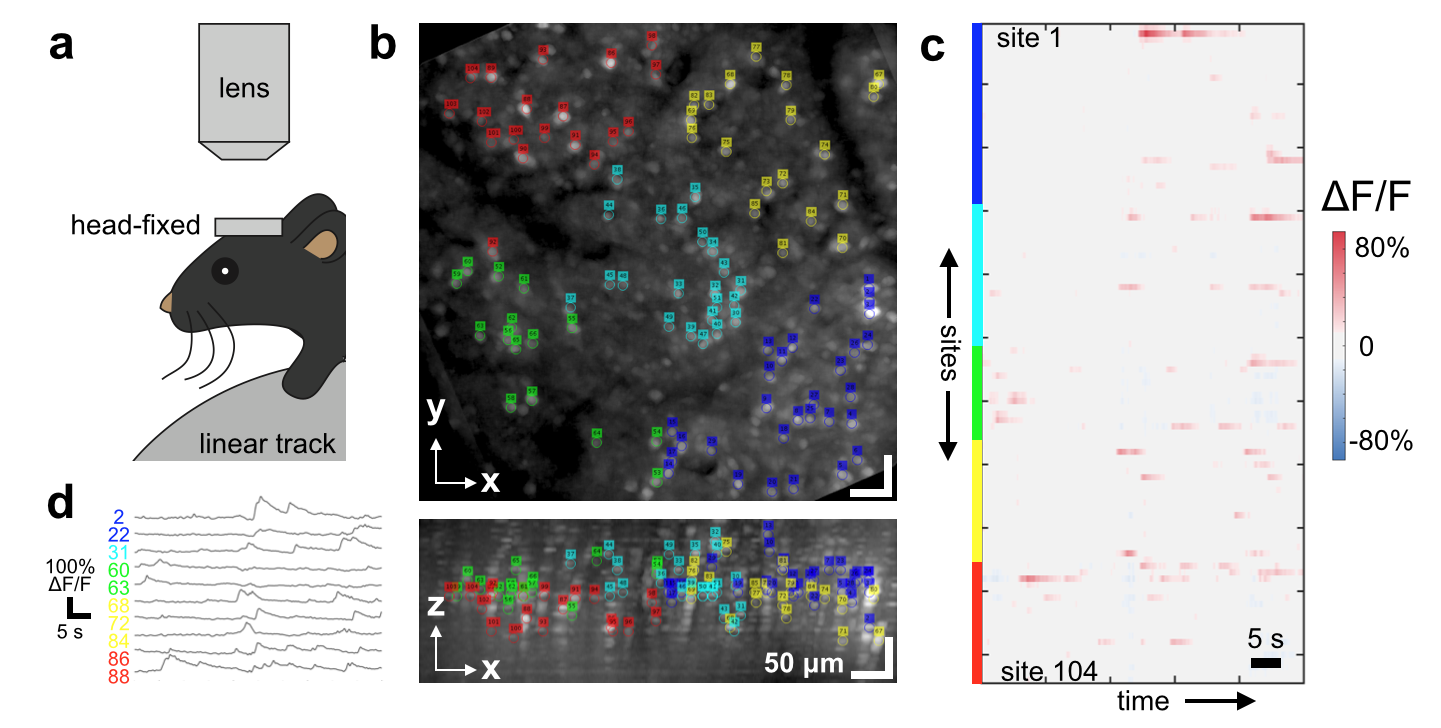

A computational microscope enabling fast, synchronous recording of cortex-spanning neural dynamics.

Read More

End-to-end optimization of optics and image processing for HDR imaging.

Read More

A neural sensor fusion framework for robust 3D imaging with single-photon detectors.

Read More

We propose the learning of the pixel exposures of a sensor, taking into account its hardware constraints, jointly with decoders to reconstruct HDR images and high-speed videos from coded images.

Read More

An end-to-end differentiable depth imaging system which jointly optimizes the LiDAR scanning pattern and sparse depth inpainting.

Read More

We propose a joint albedo–normal approach to non-line-of-sight (NLOS) surface reconstruction using the directional light-cone transform (D-LCT).

Read More

A novel, computational approach to obtaining hard-edge mutual occlusion in optical see-through augmented reality, using only a single spatial light modulator.

Read More

A new gaze-contingent rendering mode for VR/AR that renders in perceptually correct ocular parallax which benefits depth perception and perceptual realism.

Read More

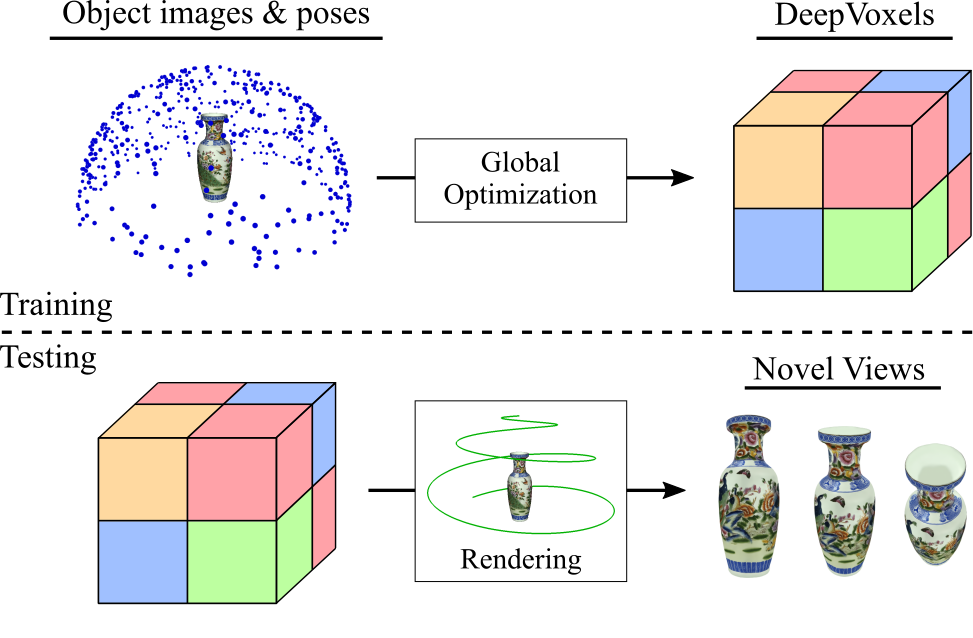

A data-efficient, scalable, interpretable and flexible neural scene representation.

Read More

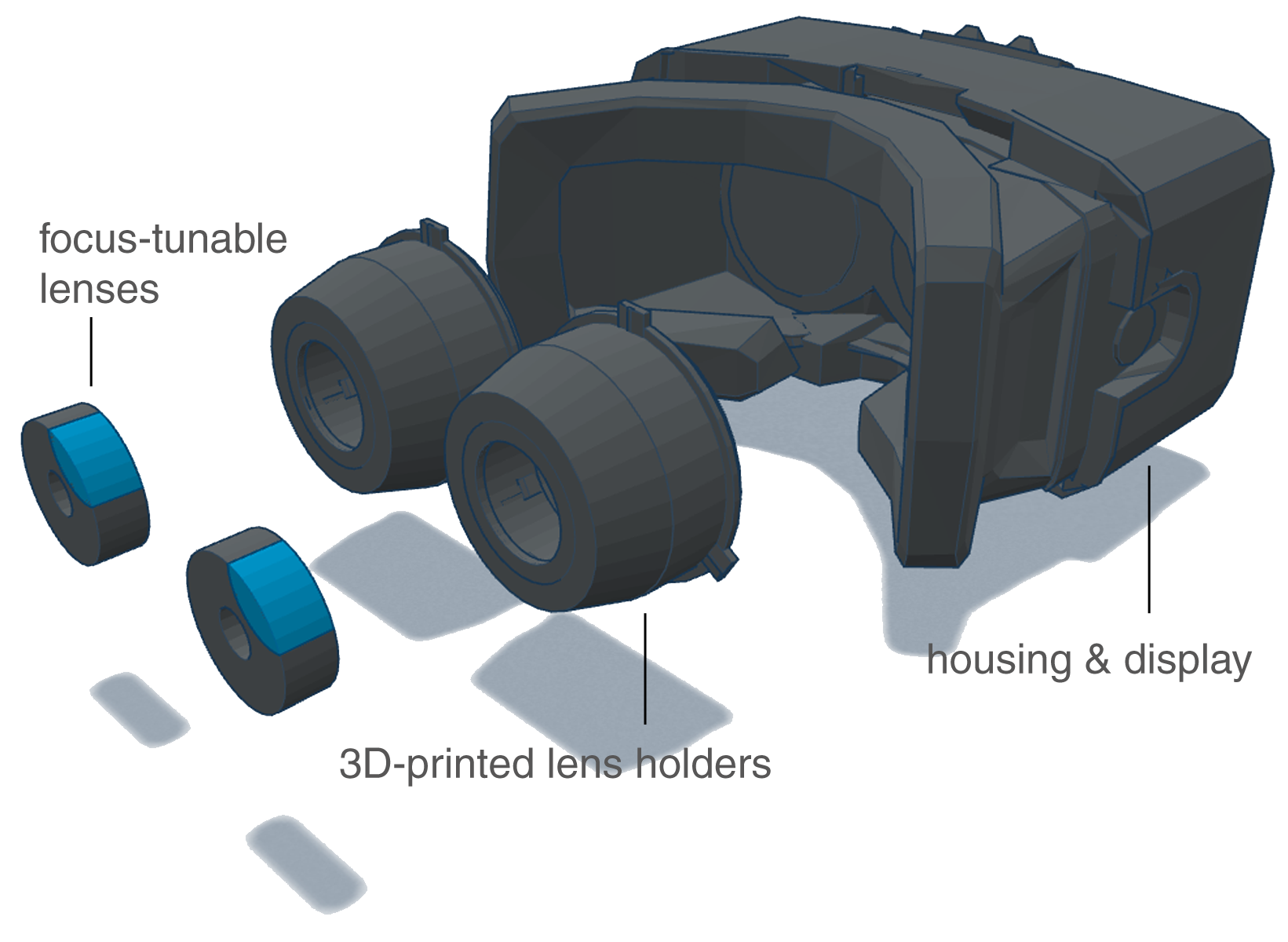

A new presbyopia correction technology that uses eye tracking, depth sensing, and focus-tunable lenses to automatically refocus the real world.

Read More

We propose a lens design and learned reconstruction architecture that provide an order of magnitude increase in field of view for computational imaging using only a single thin-plate lens element.

Read More

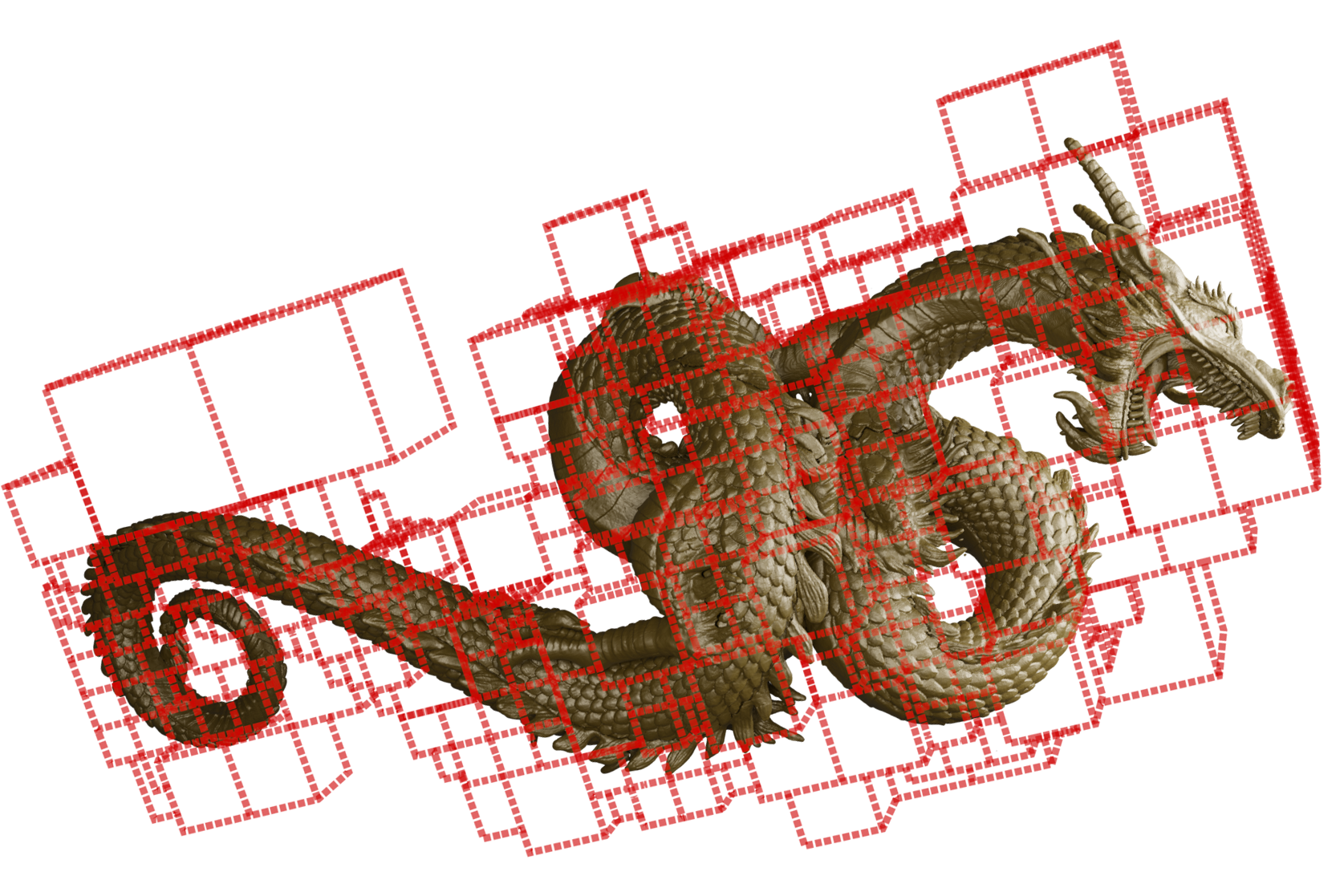

The overlap-add stereogram (OLAS) algorithm uses overlapping hogels to encode the view-dependent lighting effects of a light field into a hologram achieving better quality than other holographic stereograms.

Read More

A new optical see-through AR display system that renders mutual occlusion in a depth-dependent, perceptually realistic manner.

Read More

Monocular depth estimation and 3D object detection with optimized optical elements, defocus blur, and chromatic aberrations.

Read More

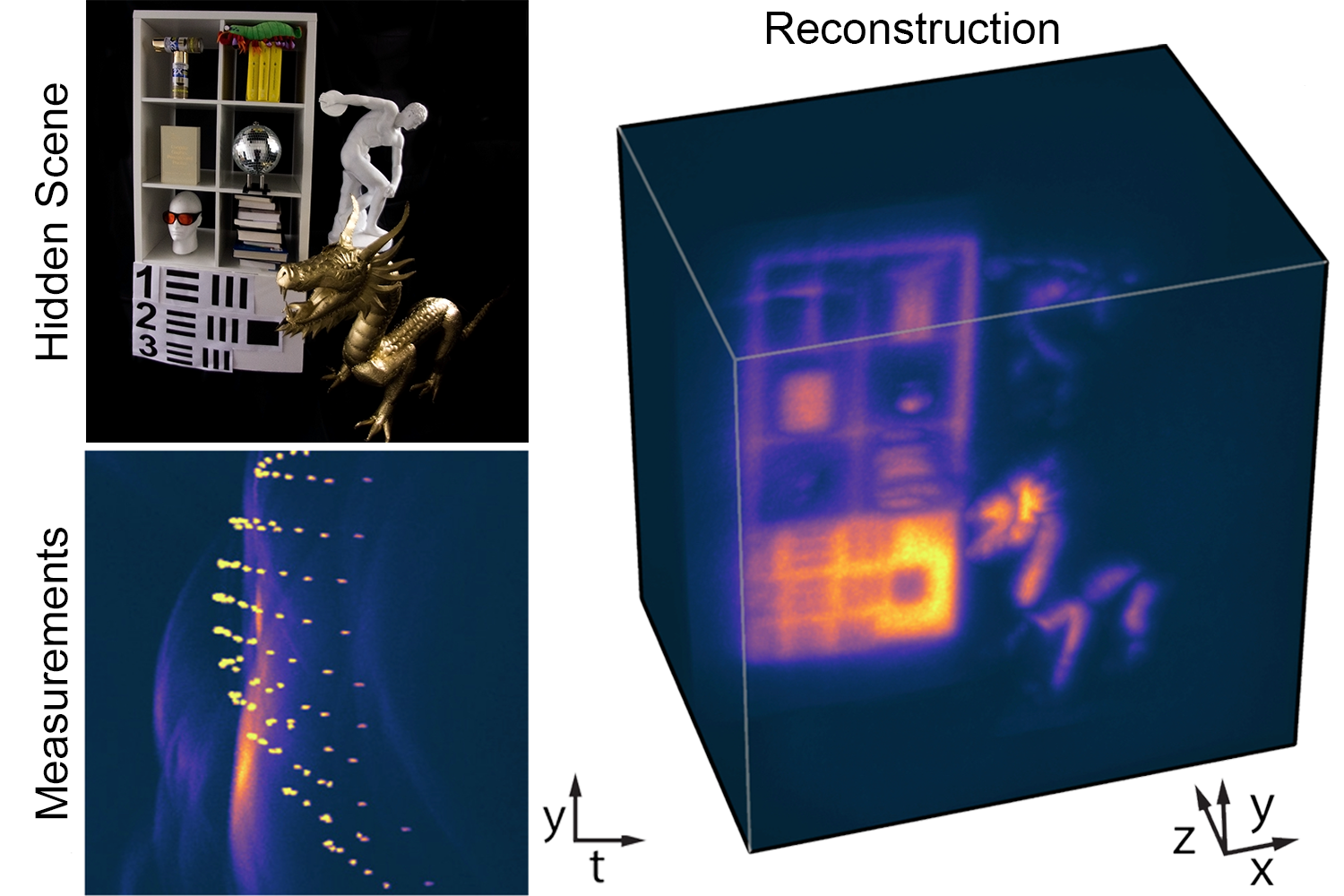

We introduce a wave-based image formation model for the problem of non-line-of-sight (NLOS) imaging. Inspired by inverse methods used in seismology, we adapt a frequency-domain method, f-k migration, for solving

Read More

A new approach to non-line-of-sight imaging that estimates partial occlusions in the hidden volume along with surface normals.

Read More

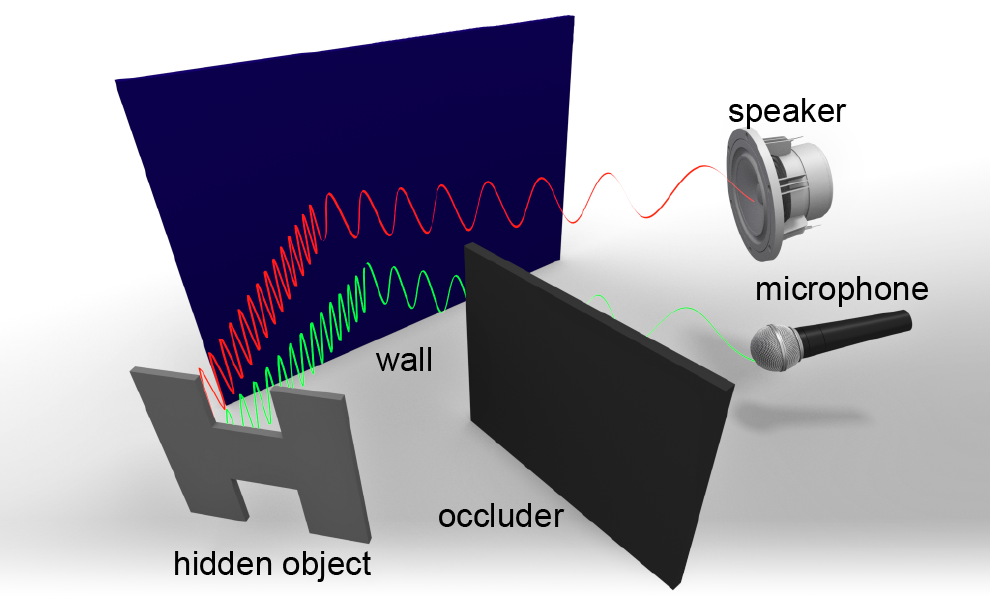

We introduce a novel approach to seeing around corners using acoustic echoes. A system of speakers emits sound waves which scatter from a wall to a hidden object and back.

Read More

3D understanding in generative neural networks.

Read More

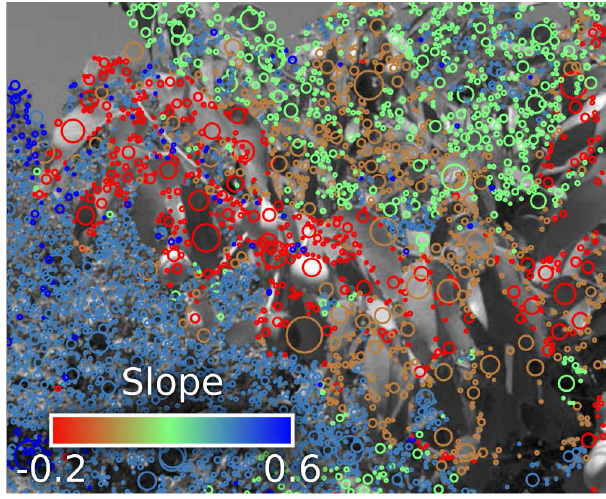

An efficient 4D light field feature detector and descriptor along with a large-scale 4D light field dataset.

Read More

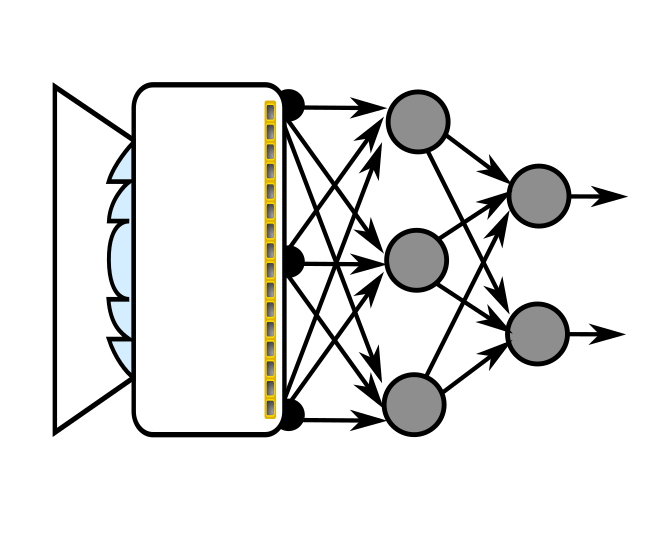

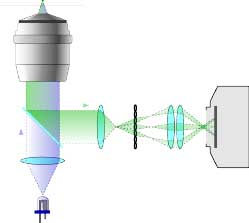

Hybrid optical-electronic convolutional neural networks with optimized diffractive optics for image classification.

Read More

State-of-the-art depth estimation with pileup correction for single-photon avalanche diodes.

Read More

A convex algorithm 3D deconvolution of low photon count fluorescence imaging.

Read More

Jointly optimizing high-level image processing and camera optics to design novel domain-specific cameras.

Read More

Photon efficient 3D imaging using single-photon detectors and deep neural networks.

Read More

A confocal scanning technique solves the reconstruction problem of non-line-of-sight imaging to give fast and high-quality reconstructions of hidden objects.

Read More

Capturing and reconstructing transient images with single-photon avalanche diodes (SPAD) at interactive rates. This page describes the following projects presented at CVPR 2017 and ICCP 2018. CVPR 2017 Reconstructing

Read More

Using eyetracking data to explore visual saliency in VR

Read More

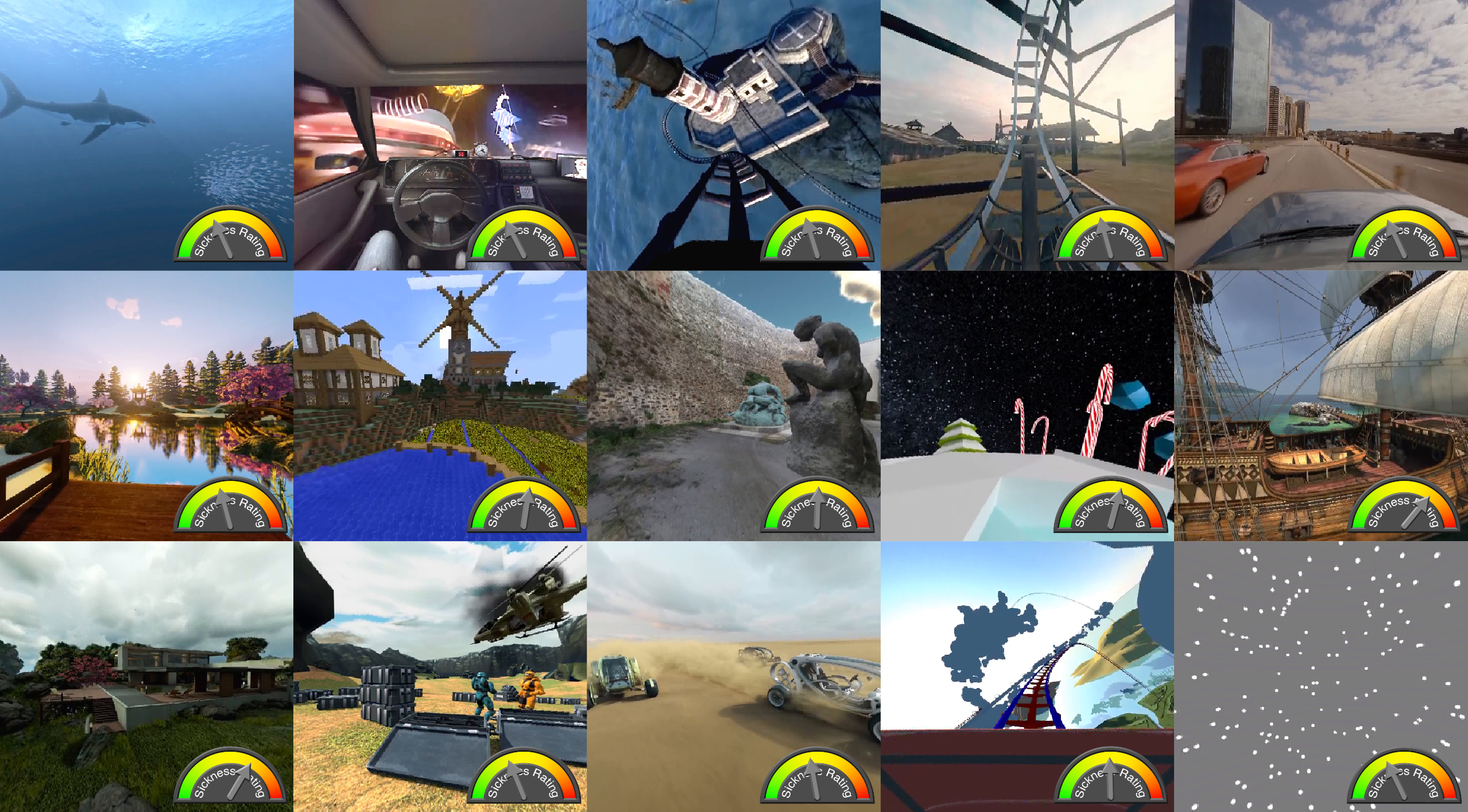

Using machine learning approaches to automate the process of determining sickness ratings for a given 360° stereoscopic video.

Read More

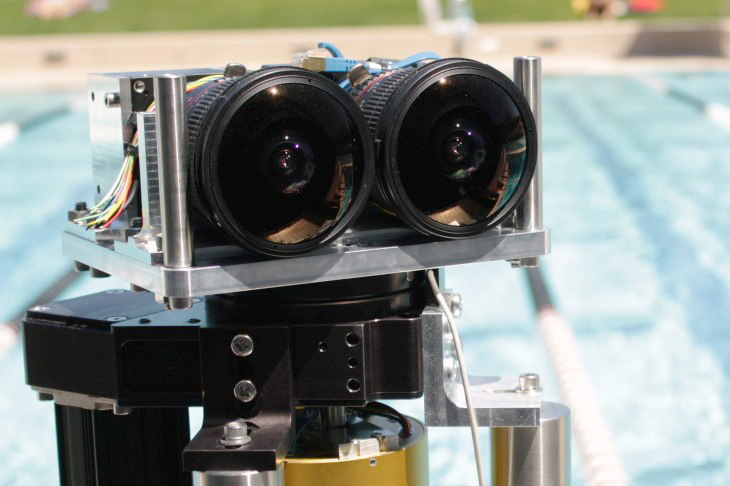

A computational camera system that directly captures streaming 3D virtual reality content natively in the omni-directional stereo (ODS) format.

Read More

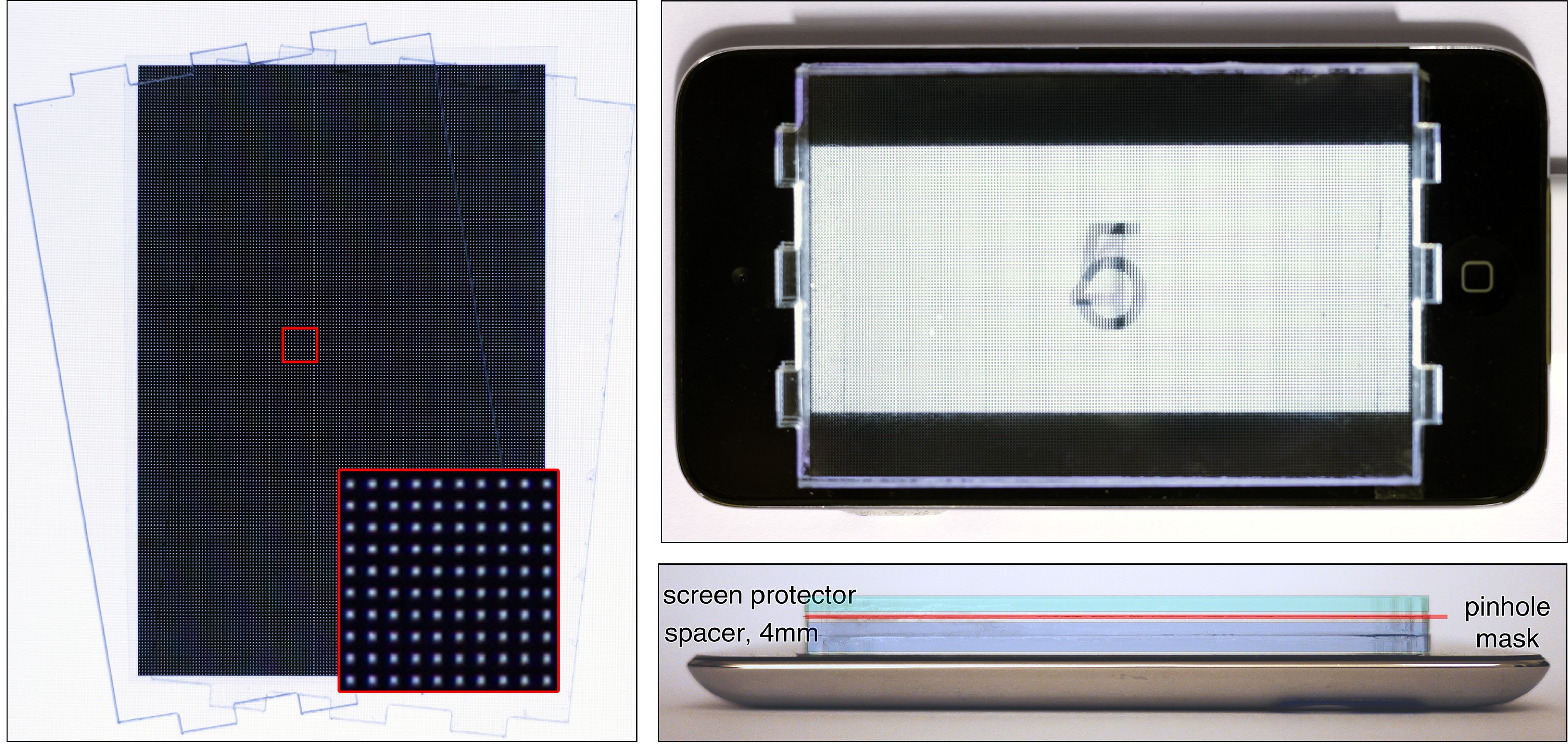

A new VR/AR display technology that produces visual stimuli that are invariant to the accommodation state of the eye. Accommodation is then driven by stereoscopic cues, instead of retinal blur, and

Read More

A gaze-contingent varifocal near-eye display technology with focus cues and an evaluation for how to make VR displays with focus cues accessible for users of all ages.

Read More

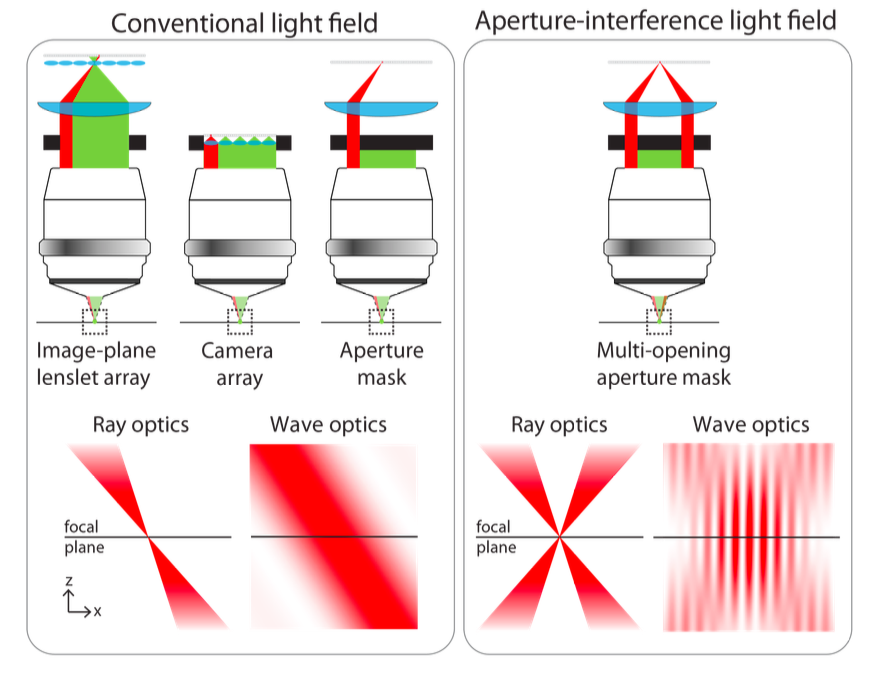

Understanding and developing an approach to improve upon the poor spatial resolution of light field microscopy for state-of-the-art volumetric fluorescence imaging.

Read More

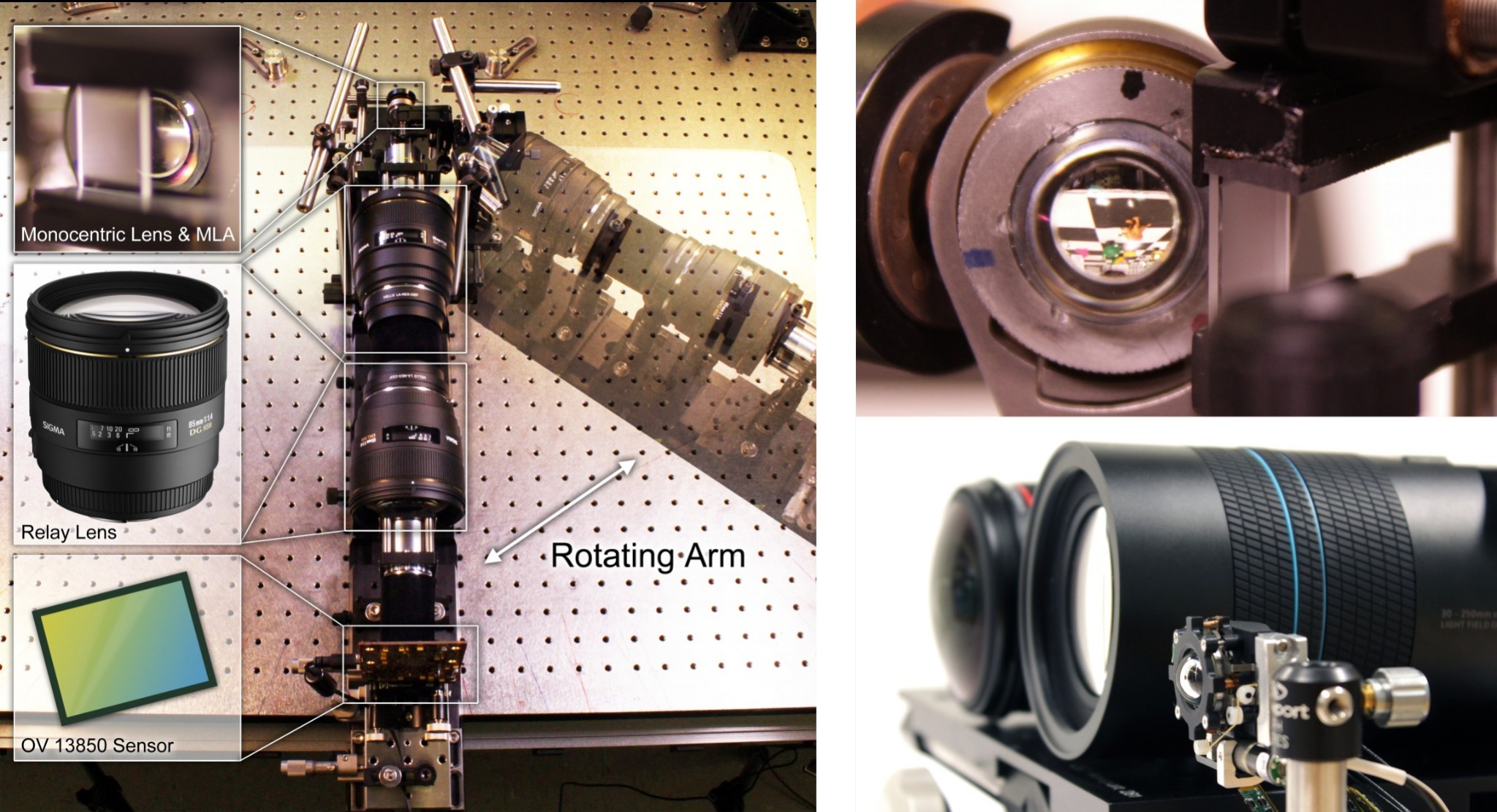

This work presents the first single-lens wide-field-of-view (FOV) light field (LF) camera. Shown below is a pan through a 138°, 72-MPix LF captured using the optical prototype. Shifting the virtual

Read More

ProxImaL is a Python-embedded modeling language for image optimization problems.

Computational imaging with multi-camera time-of-flight imaging systems.

Read More

We develop an optical system based on focus-tunable optics and evaluate three display modes (conventional, dynamic-focus, and monovision) with respect to the vergence-accommodation conflict.

Read More

A method to measure light fields with high diffraction-limited resolution using Wigner-based point spread functions and computed tomographic reconstruction.

Read More

We develop a computational illumination method for fast, large field of view, 3D two-photon illumination in vivo neuronal calcium imaging.

Read More

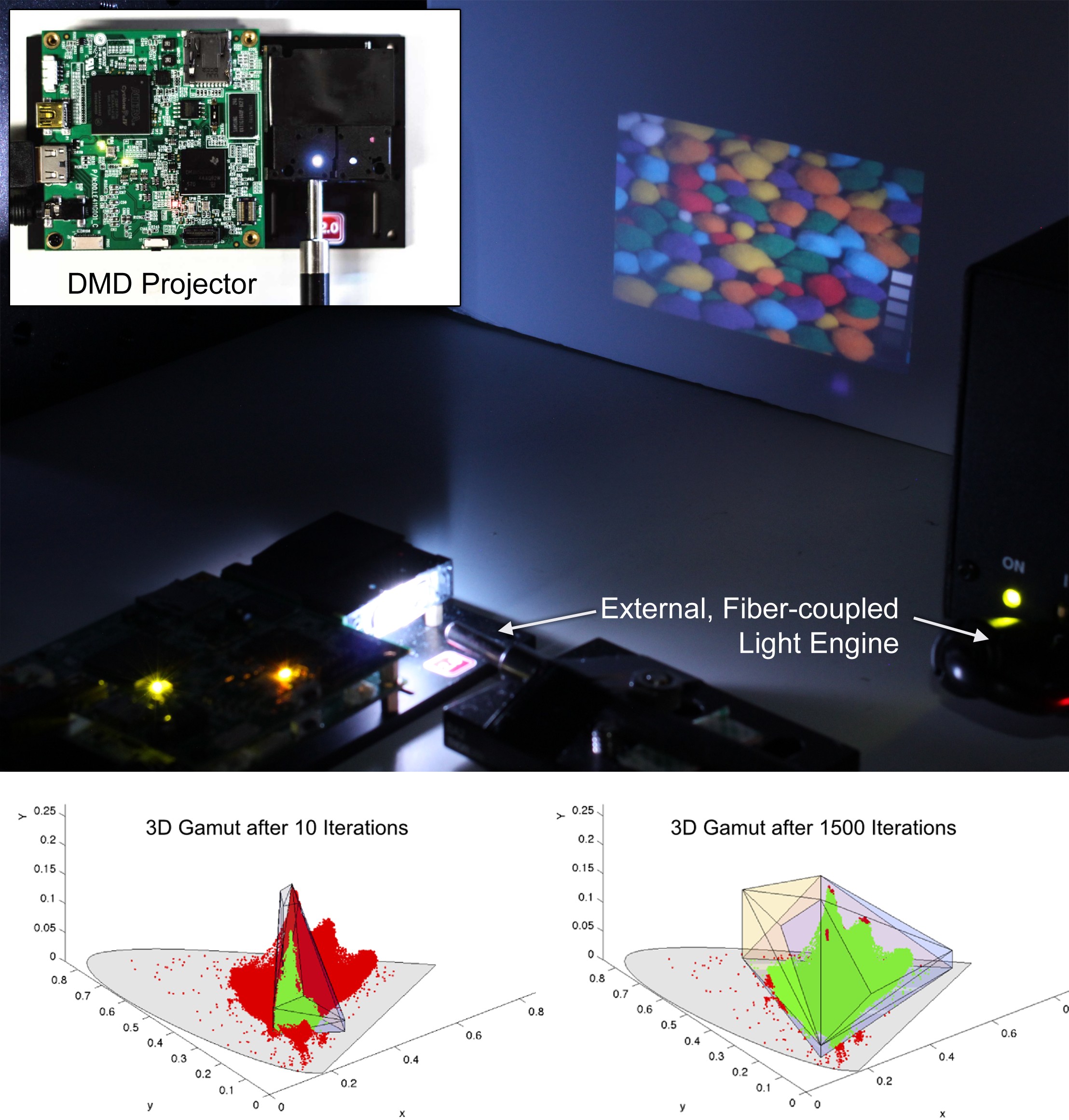

A computational projection system that provides content-adaptive color primaries, which are optimized in a perceptually-optimal manner.

Read More

Inspired by Wheatstone’s original stereoscope and augmenting it with modern factored light field synthesis, we present a new near-eye display technology that supports focus cues. These cues are critical for mitigating visual

Read More

A fundamentally new imaging modality for all time-of-flight (ToF) cameras: instantaneous, per-pixel radial velocity. The proposed technique exploits the Doppler effect of objects in motion.

Read More

An extremely efficient and practical approach to convolutional sparse coding. This algorithm has the potential to replace most patch-based sparse coding methods.

Read More

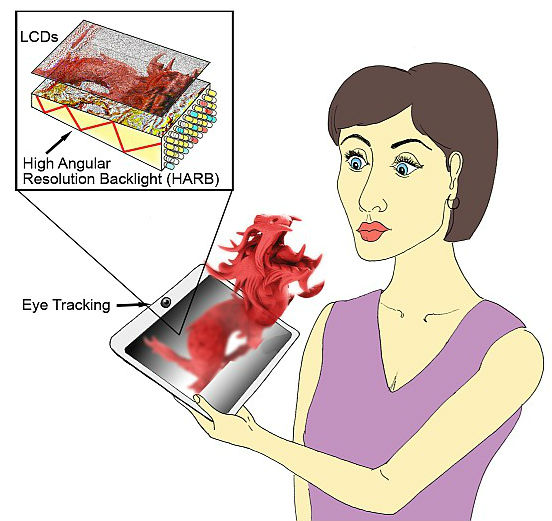

A single-person wide field of view light field display. Head tracking is employed to dynamically steer a smaller, instantaneous viewing zone around the viewer, such that they perceive a much larger

Read More

A computational light field display technology that predistorts the presented content for an observer, so that the target image is perceived without the need for eyewear.

Read More

An experimental light field projection system enabled by the combination of two high-speed spatial light modulators in the projector, nonnegative light field factorization, and an optical angle-expanding screen. The projector

Read More

A light field microscopy approach that enables high-speed, large-scale three-dimensional (3D) imaging of neuronal activity in neuroscience.

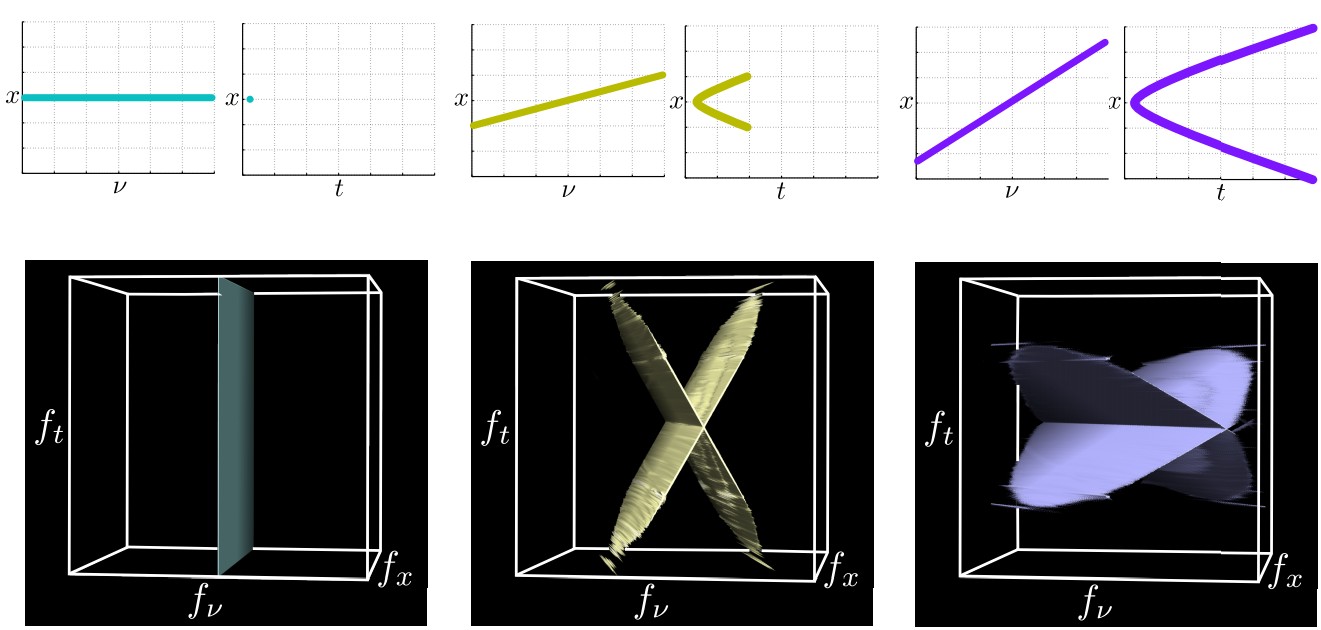

We analyze free space light propagation in the frequency domain considering spatial, temporal, and angular light variation. Using this analysis, we design a new lensless imaging system.

Read More

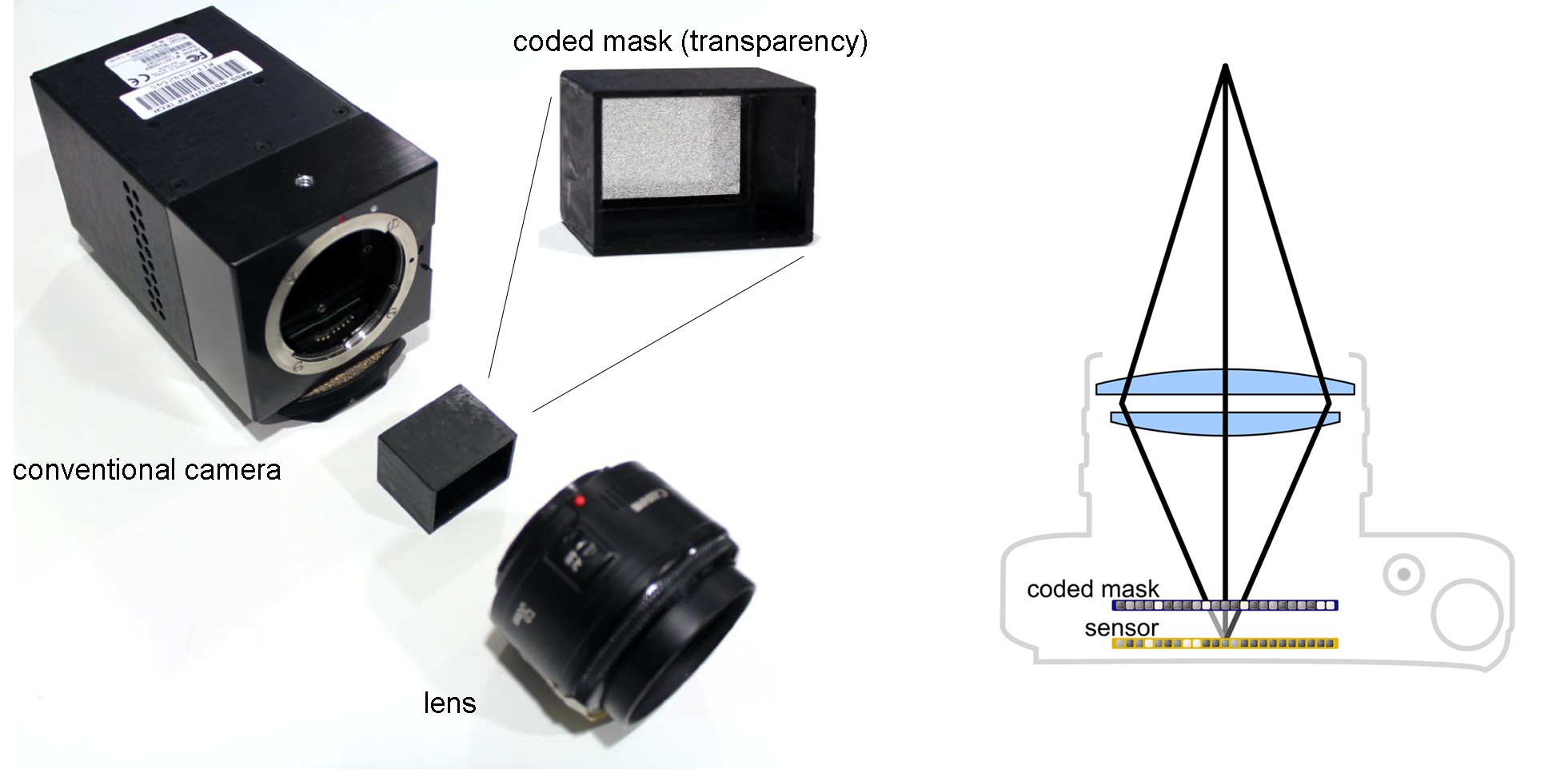

A compressive light field camera architecture that allows for higher-resolution light fields to be recovered than previously possible from a single image.

Read More

A glasses-free, multilayer 3D display design with the potential to provide viewers with nearly correct accommodative depth cues, as well as motion parallax and binocular cues.

Read More