ABSTRACT

Holographic near-eye displays offer unprecedented capabilities for virtual and augmented reality systems, including perceptually important focus cues. Although artificial intelligence–driven algorithms for computer-generated holography (CGH) have recently made much progress in improving the image quality and synthesis efficiency of holograms, these algorithms are not directly applicable to emerging phase-only spatial light modulators (SLM) that are extremely fast but offer phase control with very limited precision. The speed of these SLMs offers time multiplexing capabilities, essentially enabling partially-coherent holographic display modes. Here we report advances in camera-calibrated wave propagation models for these types of near-eye holographic displays and we develop a CGH framework that robustly optimizes the heavily quantized phase patterns of fast SLMs. Our framework is flexible in supporting runtime supervision with different types of content, including 2D and 2.5D RGBD images, 3D focal stacks, and 4D light fields. Using our framework, we demonstrate state-of-the-art results for all of these scenarios in simulation and experiment.

Unlike conventional displays which directly present desired intensities, Holographic displays use exotic phase patterns for an SLM which modulates the “phase” of light per pixel. The modulated wavefield propagates and reconstructs a 3D scene in a volume shown in the second row.

FILES

CITATION

S. Choi*, M. Gopakumar*, Y. Peng, J. Kim, M. O’Toole, and G. Wetzstein, “Time-multiplexed Neural Holography: A flexible framework for holographic near-eye displays with fast heavily-quantized spatial light modulators”, SIGGRAPH 2022.

@inproceedings{choi2022time,

title={Time-multiplexed Neural Holography: A flexible framework for holographic near-eye displays with fast heavily-quantized spatial light modulators},

author={Choi, Suyeon and Gopakumar, Manu and Peng, Yifan and Kim, Jonghyun and O’Toole, Matthew and Wetzstein, Gordon},

booktitle={Proceedings of the ACM SIGGRAPH},

pages={1–9},

year={2022}

}

|

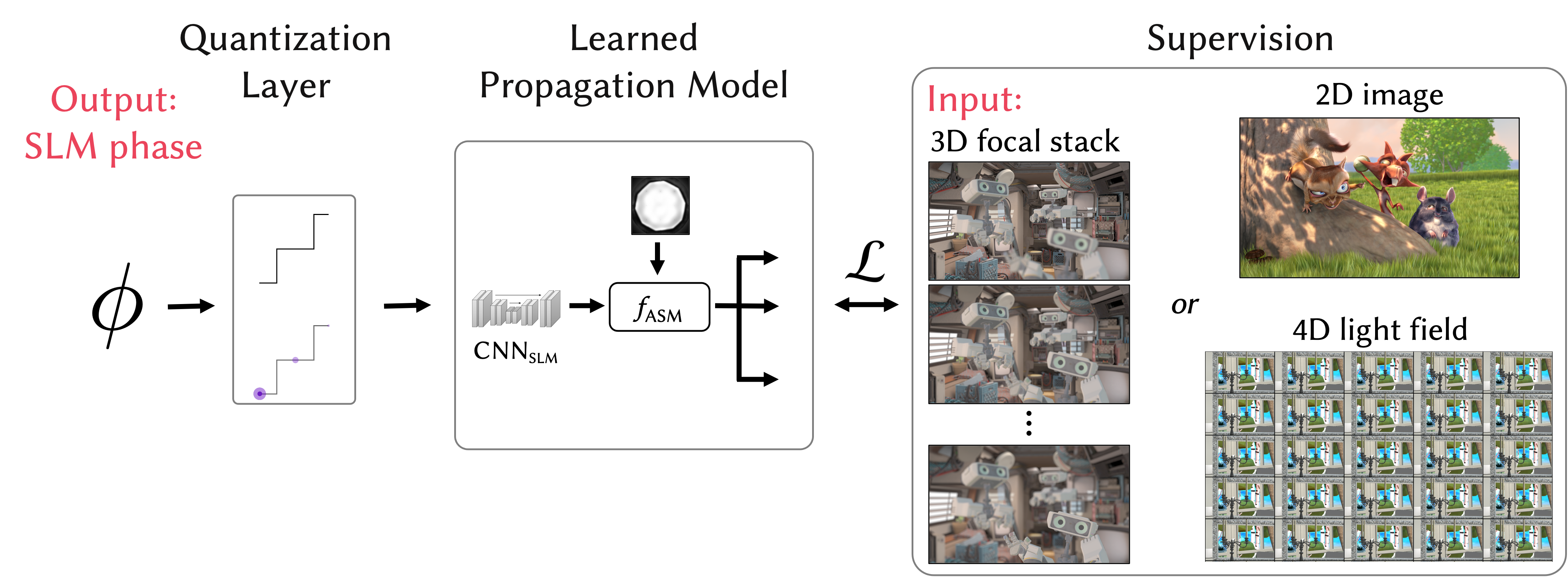

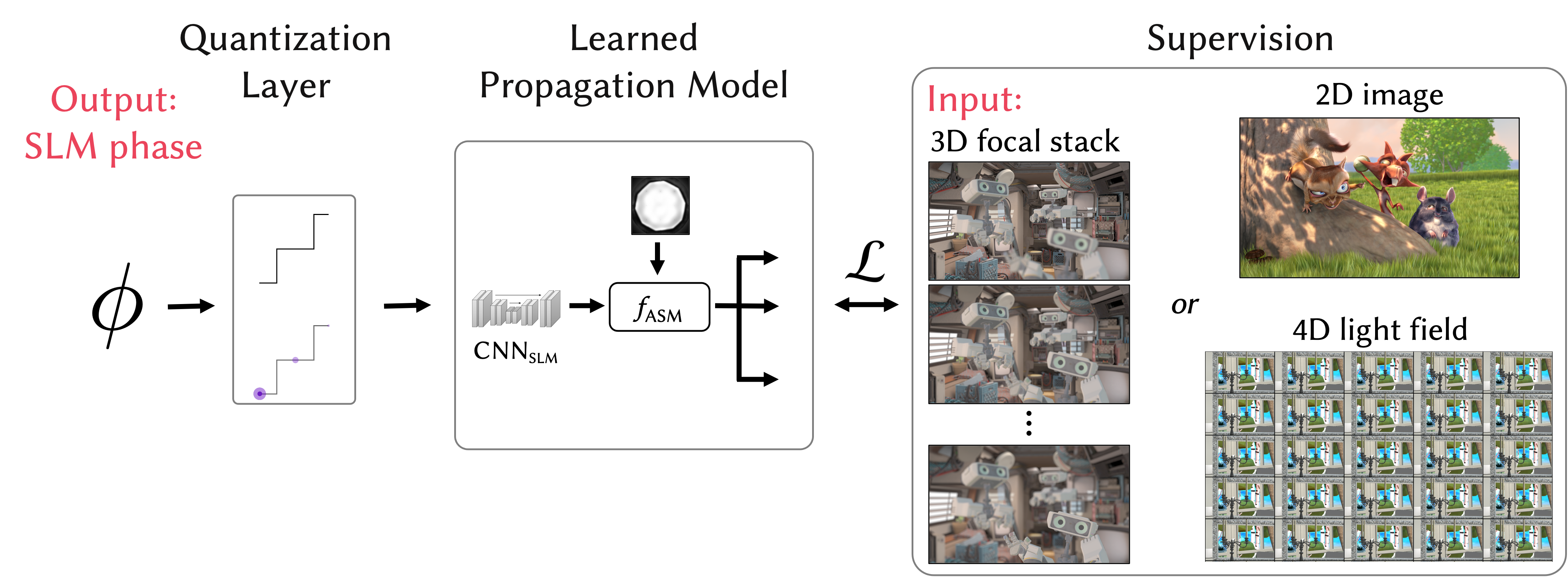

| Illustration of our framework. The complex-valued field at the SLM is adjusted by several learnable terms (including discrete lookup table) and then processed by a CNN. The resulting complex-valued wave field is propagated to all target planes using the ASM wave propagation operator with amplitude and phase at the Fourier domain. The wave fields at each target plane are processed again by smaller CNNs. The proposed framework applies to multiple input forms, including 2D, 2.5D, 3D, and 4D. |

|

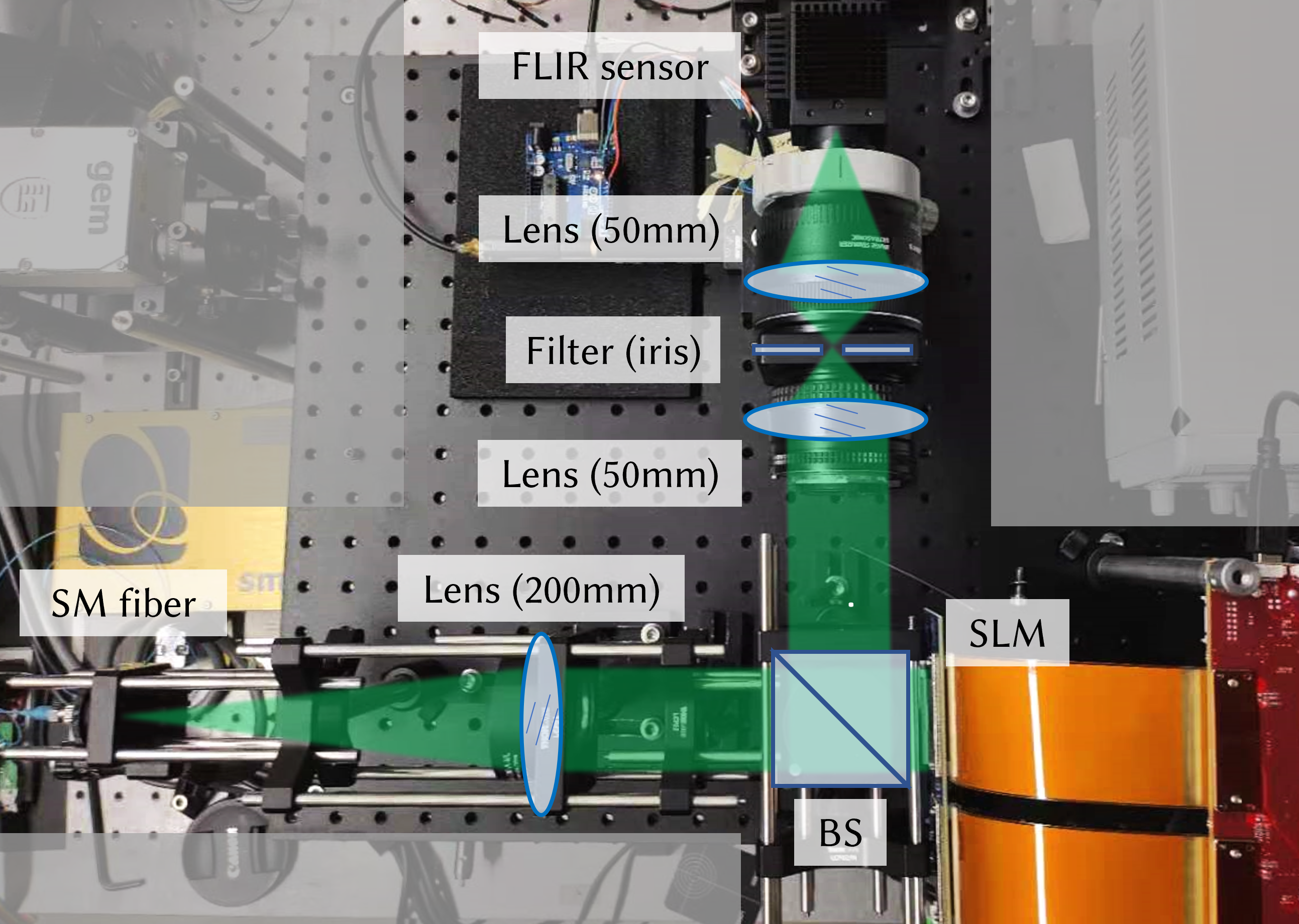

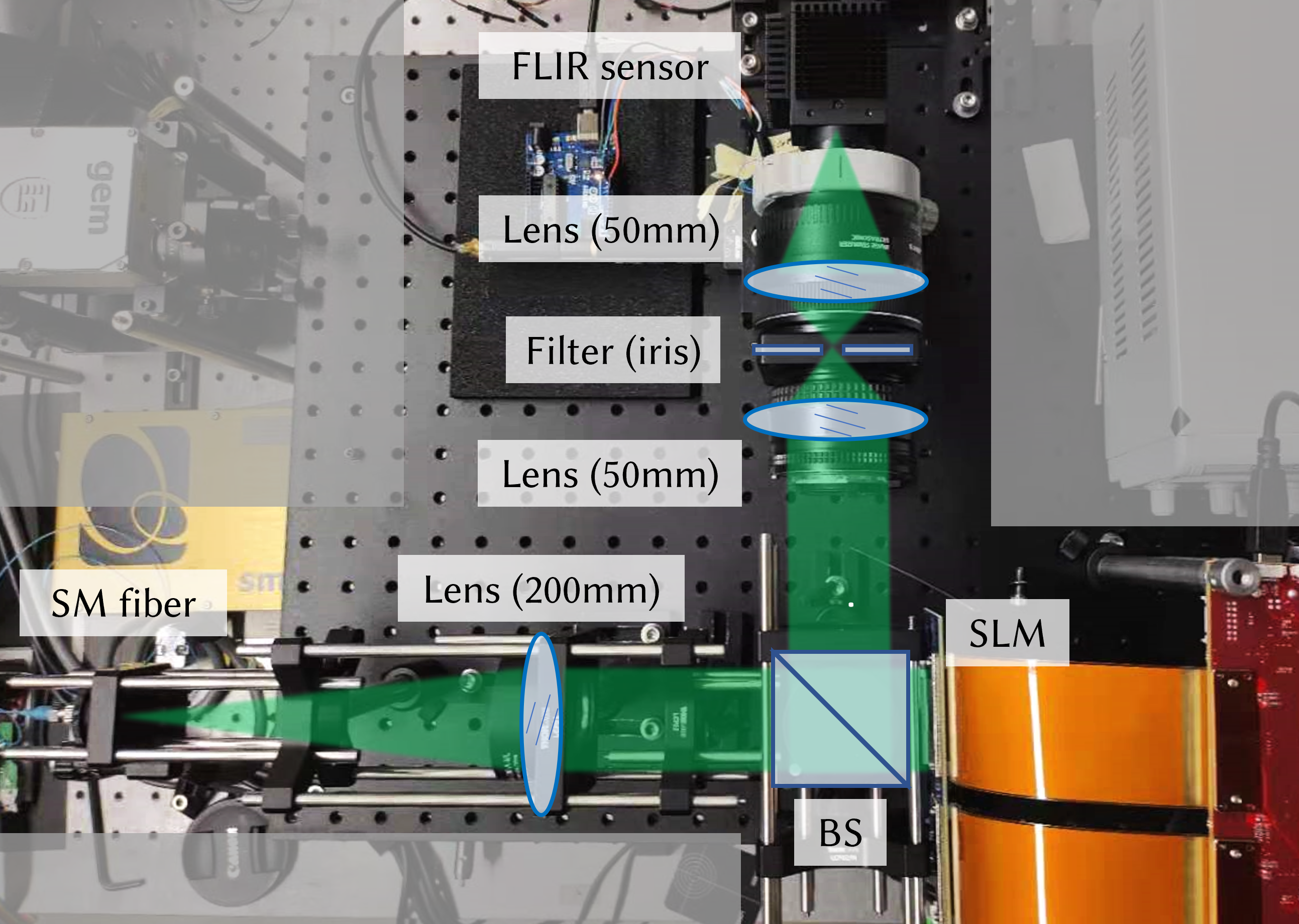

| Display prototype. Photograph of the prototype holographic near-eye display systems. |