|

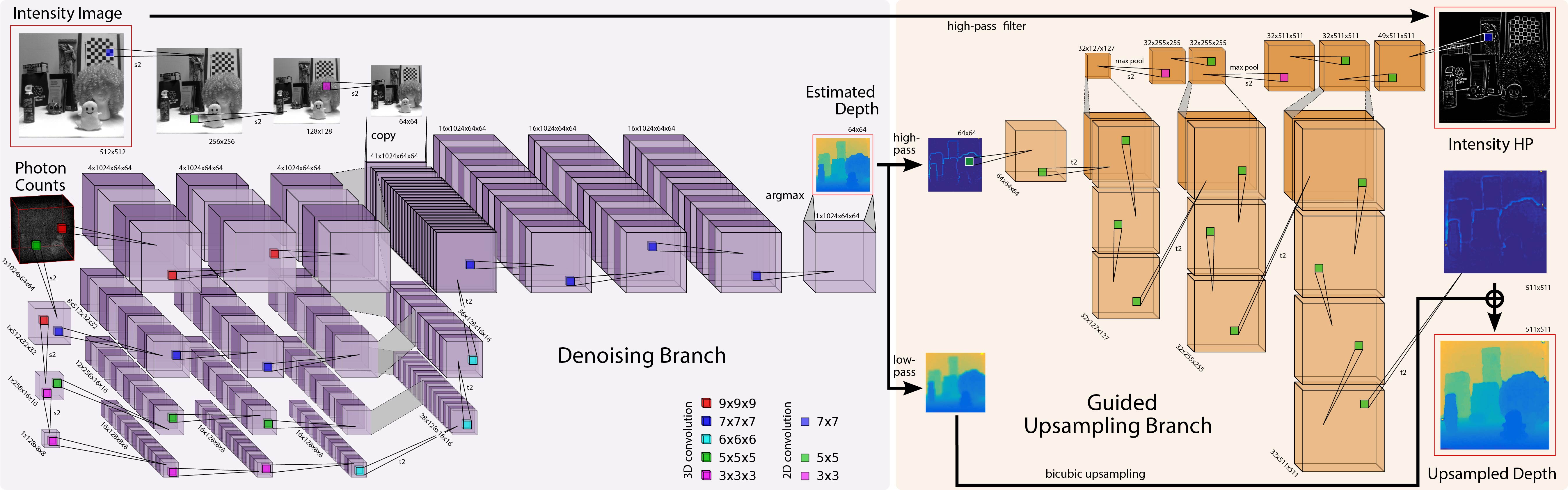

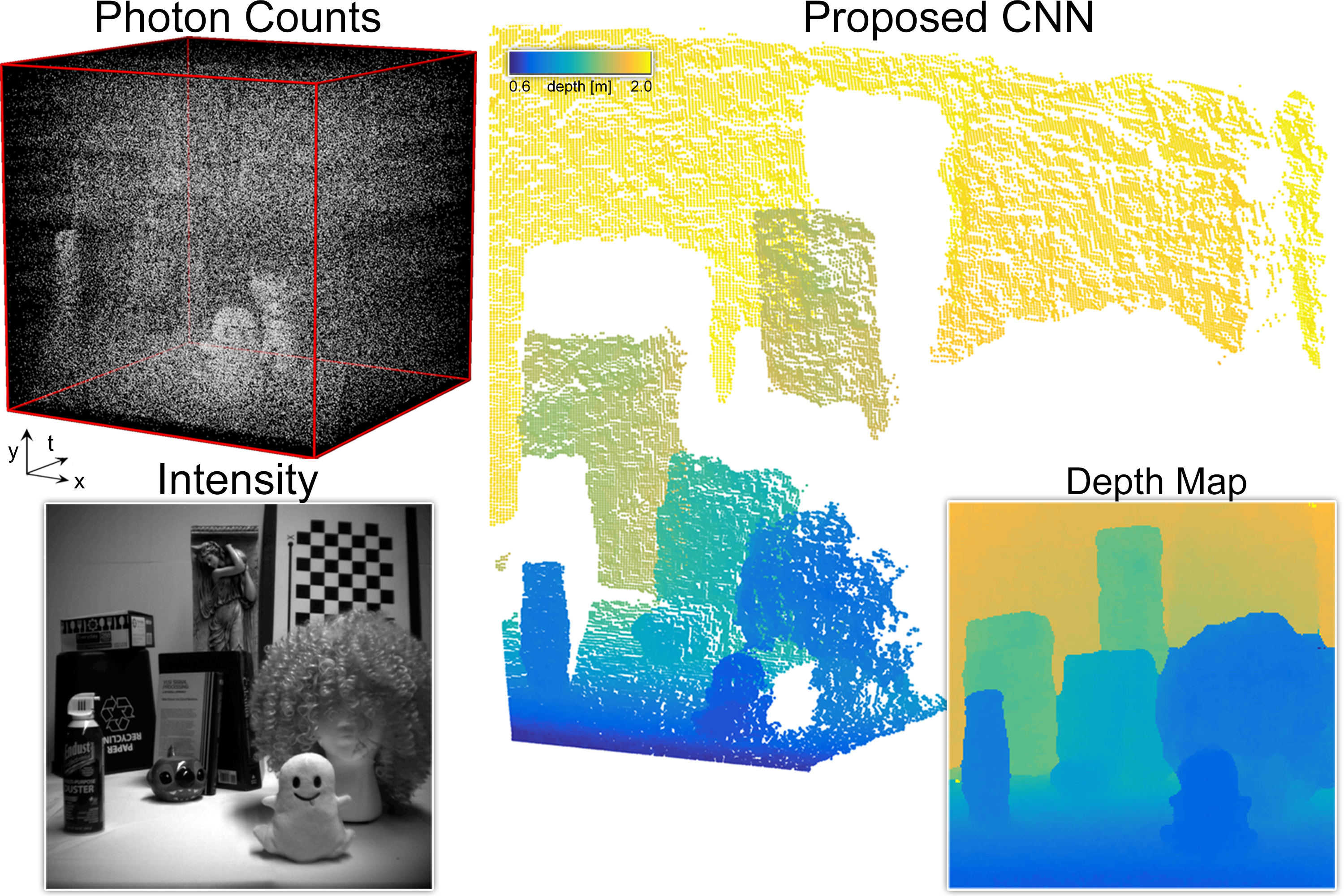

| Single-photon 3D imaging systems measure a spatio-temporal volume containing photon counts (left) that include ambient light, noise, and photons emitted by a pulsed laser into the scene and reflected back to the detector. We introduce a data-driven approach to solve this depth estimation problem and explore deep sensor fusion approaches that use an intensity image of the scene to optimize the robustness and resolution of the depth estimation (right). |

FILES

- technical paper (link)

- technical paper supplement (link)

- code (link)

- additional data (link)

CITATION

David B. Lindell, Matthew O’Toole, and Gordon Wetzstein. 2018. Single-Photon 3D Imaging with Deep Sensor Fusion. ACM Trans. Graph. 37, 4, Article 113 (August 2018), 12 pages. https://doi.org/10.1145/3197517.3201316

BibTeX

@article{Lindell:2018:3D,

author = {David B. Lindell and Matthew O’Toole and Gordon Wetzstein},

title = {{Single-Photon 3D Imaging with Deep Sensor Fusion}},

journal = {ACM Trans. Graph. (SIGGRAPH)},

issue = {37},

number = {4},

year = {2018},

}

Slides

Related Projects

You may also be interested in related projects, where we have developed non-line-of-sight imaging systems:

- Metzler et al. 2021. Keyhole Imaging. IEEE Trans. Computational Imaging (link)

- Lindell et al. 2020. Confocal Diffuse Tomography. Nature Communications (link)

- Young et al. 2020. Non-line-of-sight Surface Reconstruction using the Directional Light-cone Transform. CVPR (link)

- Lindell et al. 2019. Wave-based Non-line-of-sight Imaging using Fast f-k Migration. ACM SIGGRAPH (link)

- Heide et al. 2019. Non-line-of-sight Imaging with Partial Occluders and Surface Normals. ACM Transactions on Graphics (presented at SIGGRAPH) (link)

- Lindell et al. 2019. Acoustic Non-line-of-sight Imaging. CVPR (link)

- O’Toole et al. 2018. Confocal Non-line-of-sight Imaging based on the Light-cone Transform. Nature (link)

and direct line-of-sight or transient imaging systems:

- Bergman et al. 2020. Deep Adaptive LiDAR: End-to-end Optimization of Sampling and Depth Completion at Low Sampling Rates. ICCP (link)

- Nishimura et al. 2020. 3D Imaging with an RGB camera and a single SPAD. ECCV (link)

- Heide et al. 2019. Sub-picosecond photon-efficient 3D imaging using single-photon sensors. Scientific Reports (link)

- Lindell et al. 2018. Single-Photon 3D Imaging with Deep Sensor Fusions. ACM SIGGRAPH (link)

- O’Toole et al. 2017. Reconstructing Transient Images from Single-Photon Sensors. CVPR (link)

Acknowledgments

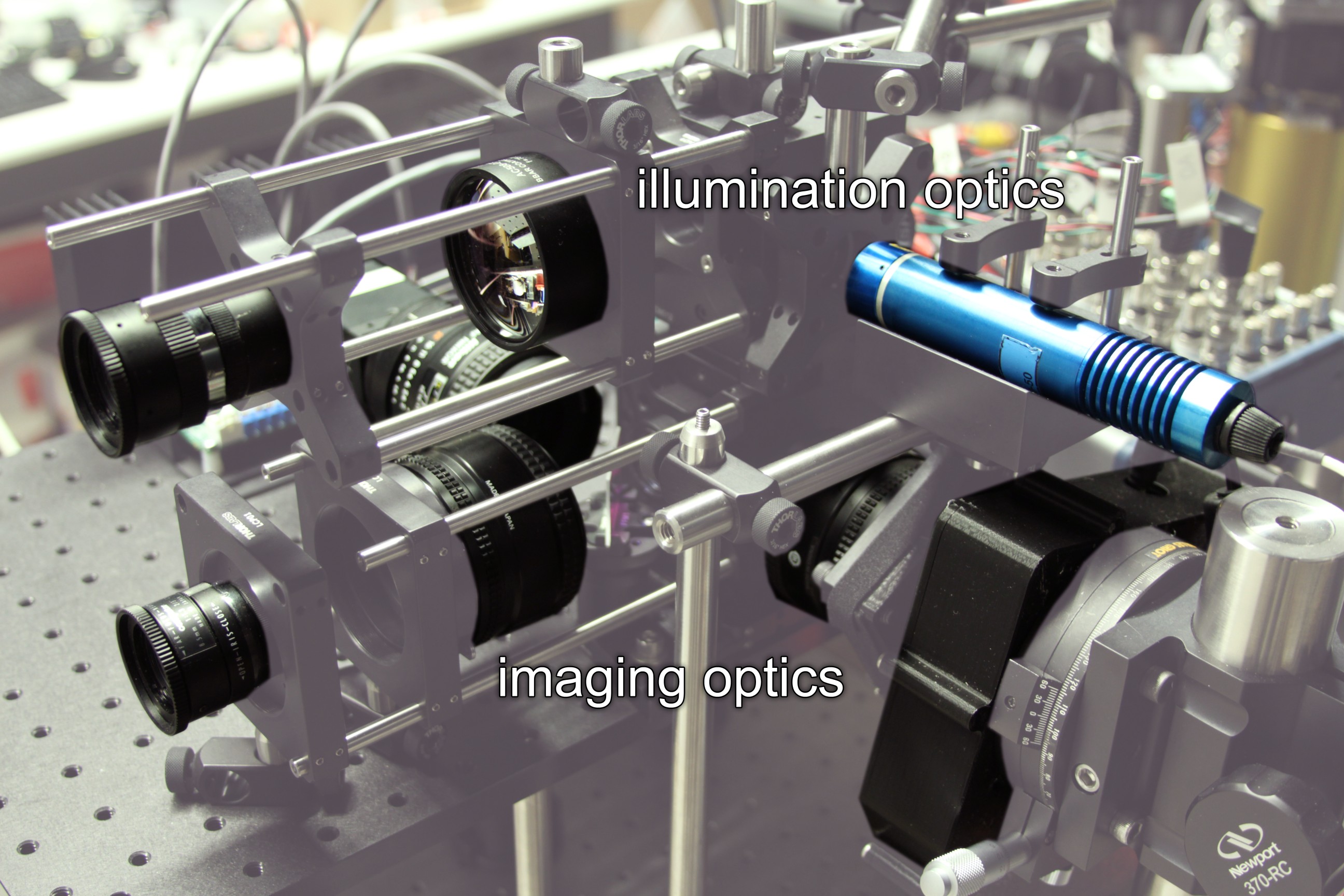

This project was supported by a Stanford Graduate Fellowship, a Banting Postdoctoral Fellowship, an NSF CAREER Award (IIS 1553333), a Terman Faculty Fellowship, a Sloan Fellowship, by the KAUST Office of Sponsored Research through the Visual Computing Center CCF grant, the Center for Automotive Research at Stanford (CARS), and the DARPA REVEAL program. The authors are grateful to Edoardo Charbon, Pierre-Yves Cattin, and Samuel Burri for providing the LinoSPAD sensor used in this work and continued support of it.