ABSTRACT

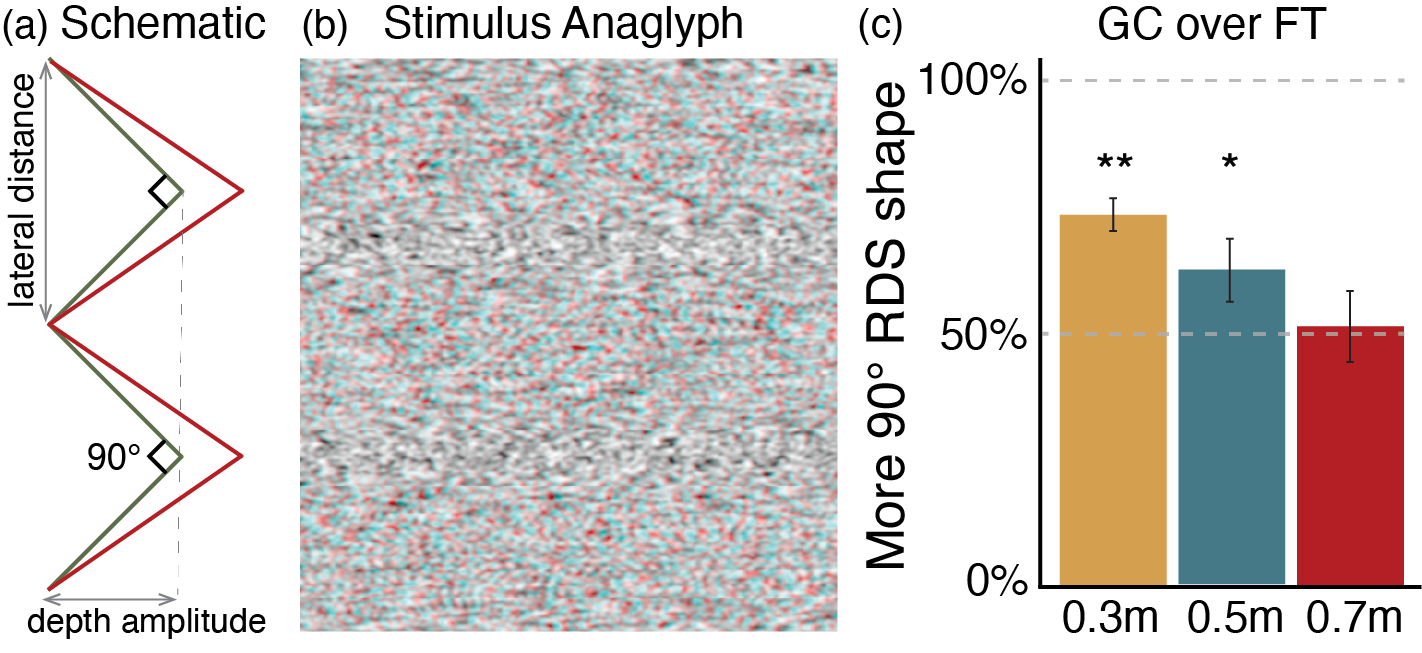

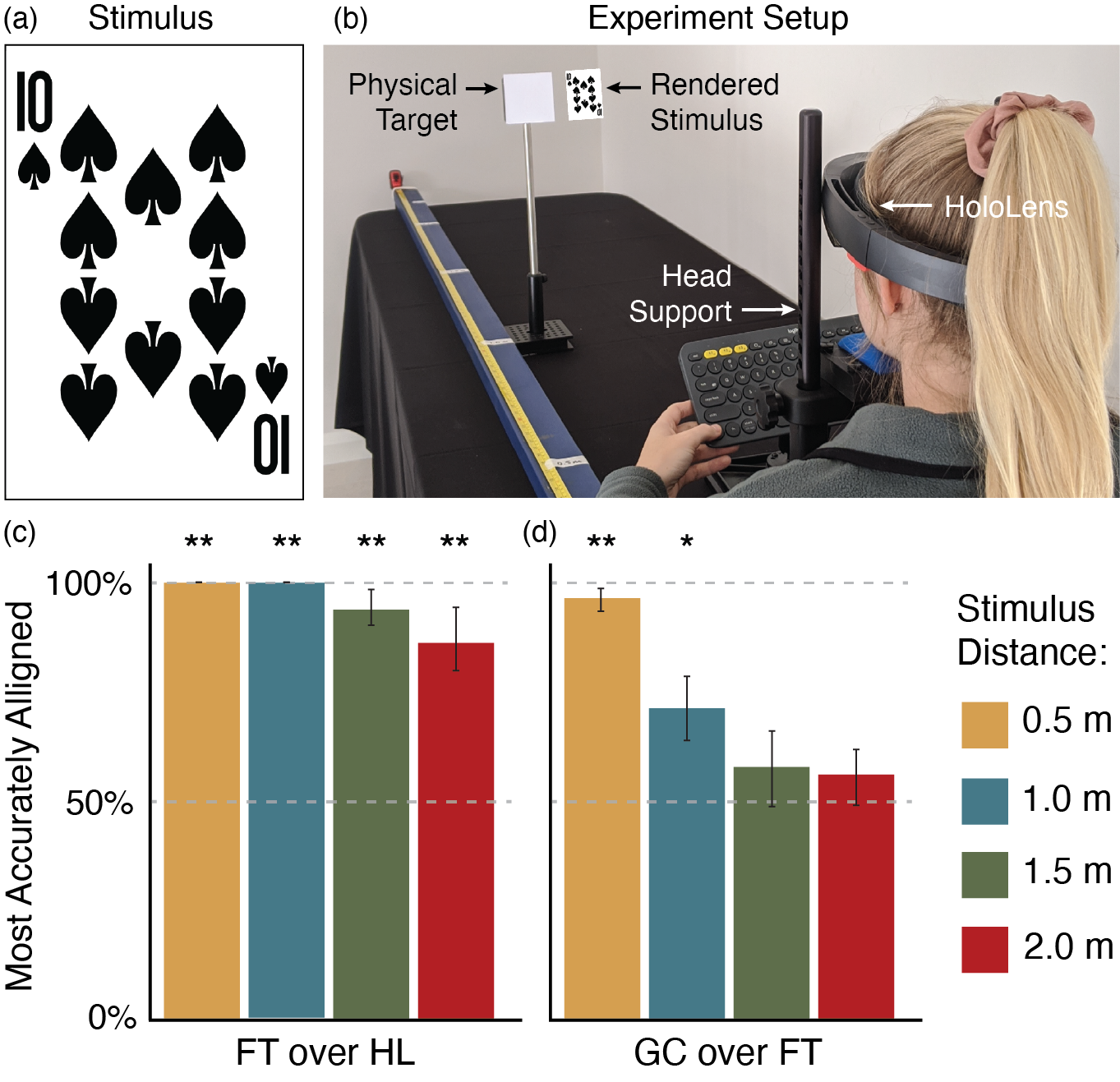

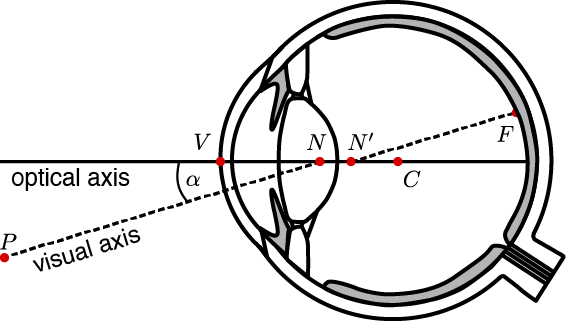

Virtual and augmented reality (VR/AR) displays crucially rely on stereoscopic rendering to enable perceptually realistic user experiences. Yet, existing near-eye display systems ignore the gaze-dependent shift of the no-parallax point in the human eye. Here, we introduce a gaze-contingent stereo rendering technique that models this effect and conduct several user studies to validate its effectiveness. Our findings include experimental validation of the location of the no-parallax point, which we then use to demonstrate significant improvements of disparity and shape distortion in a VR setting, and consistent alignment of physical and digitally rendered objects across depths in optical see-through AR. Our work shows that gaze-contingent stereo rendering improves perceptual realism and depth perception of emerging wearable computing systems.

FILES

CITATION

B. Krajancich, P. Kellnhofer, G. Wetzstein, “Optimizing Depth Perception in Virtual and Augmented Reality through Gaze-contingent Stereo Rendering”, in ACM Trans. Graph., 39 (6), 2020.

BibTeX

@article{Krajancich:2020:gc_stereo,

author = {Krajancich, Brooke

and Kellnhofer, Petr

and Wetzstein, Gordon},

title = {Optimizing Depth Perception in Virtual and Augmented Reality through Gaze-contingent Stereo Rendering},

journal = {ACM Trans. Graph.},

volume = {39},

issue = {6},

year={2020}

}