Computational Cameras and Displays

Computational photography has become an increasingly active area of research within the computer vision community. Within the few last years, the amount of research has grown tremendously with dozens of published papers per year in a variety of vision, optics, and graphics venues. A similar trend can be seen in the emerging field of computational displays – spurred by the widespread availability of precise optical and material fabrication technologies, the research community has begun to investigate the joint design of display optics and computational processing. Such displays are not only designed for human observers but also for computer vision applications, providing high-dimensional structured illumination that varies in space, time, angle, and the color spectrum. This workshop is designed to unite the computational camera and display communities in that it considers to what degree concepts from computational cameras can inform the design of emerging computational displays and vice versa, both focused on applications in computer vision.

The CCD workshop series serves as an annual gathering place for researchers and practitioners who design, build, and use computational cameras, displays, and projector-camera systems for a wide variety of uses. The workshop solicits papers, posters, and demo submissions on all topics relating to projector-camera systems.

Previous CCD Workshops:

CCD2016, CCD2015, CCD2014, CCD2013, CCD2012

Important Dates

Paper registration: April 3Paper submission deadline: April 5Paper acceptance notification: May 5Camera ready date: May 15Poster/Demo submission deadline: June 30- Workshop date: July 21

Venue

CCD Workshop is part of the CVPR 2017 workshops.

Please see the CVPR webpage for details on venue, accommodations, and other details!

Latest News

February 8: Website online.

May 11: Three exciting keynote speakers announced!

June 23: The program is available.

Participate

Paper Submissions

Paper submissions are handled through the workshop’s CMT website: https://cmt3.research.microsoft.com/CCD2017/

The paper submission deadline is Wednesday, April 5th. Submissions can be up to 8 pages in length (excluding reference) prepared using the CVPR-CCD Author Kit. Supplementary material can also be submitted if appropriate. Videos should be in a common format, e.g., MPEG-1, MPEG-4, XviD, or DivX. The submission and review process is double blind; avoid providing any information that may identify the authors. Papers accepted to the workshop will appear in both the CVPR proceedings and on IEEE Xplore.

Poster/Demo Submissions

CCD’s poster & demo session provides an excellent opportunity for attendees to present recently published works or late-breaking results. Note that unlike paper submissions, posters & demos do not have to be original works.

To submit a poster or demo, email us directly at ccd-workshop-2017@lists.stanford.edu with the subject “CCD Poster” by June 30th. Submissions should include a title, authors, affiliations, and a short description of the proposed poster or demo. We also encourage submitting supporting material (e.g. published papers, videos).

Program

| 8:30 – 8:45 | Welcome and Opening Remarks |

| 8:45 – 9:45 | Keynote 1

A Computer Graphics Perspective on Forward and Inverse Light Transport |

| 9:45 – 10:15 | Poster Spotlights |

| 10:15 – 10:30 | Morning Break |

| 10:30 – 11:30 | Session 1

Intel RealSense Stereoscopic Depth Cameras Compressive Light Field Reconstructions using Deep Learning Invited Talk: One-shot Hyperspectral Imaging using Faced Reflectors |

| 11:30 – 13:00 | Lunch (on your own) |

| 13:00 – 14:00 | Keynote 2

Computational Display for Virtual and Augmented Reality |

| 14:00 – 14:45 | Session 2

Generating 5D Light Fields in Scattering Media for Representing 3D Images The Stereoscopic Zoom |

| 14:45 – 16:00 | Afternoon Break + Poster Session |

| 16:00 – 16:45 | Session 3

Invited Talk: Title TBD Invited Talk: Inverse-scattering Bridging Micron to Kilometer Scales |

| 16:46 – 17:00 | Closing Remarks |

Keynote talks

Matthias Hullin, Professor and Head of Digital Material Appearance Group, University of Bonn

Matthias Hullin, Professor and Head of Digital Material Appearance Group, University of Bonn

Title: A Computer Graphics Perspective on Forward and Inverse Light Transport

Abstract: A recurring theme in my work is the development of devices and computational methods to measure, simulate, analyse and invert the propagation of light on macroscopic scales. In this talk, I will present a selection of recent research that is linked by the desire to replace specialized and heavyweight optical devices with consumer-grade hardware and computational methods. In particular, I will draw a vision of how computer graphics methodology (forward simulation of light transport, or rendering) could become a useful tool for solving inverse problems in imaging and various remote sensing applications.

Speaker Bio: Matthias Hullin is a professor of Digital Material Appearance at the University of Bonn, a post he took after research stages at the Max Planck Center for Visual Computing and Communication and the University of British Columbia. He obtained a doctorate in computer science from Saarland University (2010) with a dissertation that was awarded the Otto Hahn Medal of the Max Planck Society, and a Diplom in physics from the University of Kaiserslautern (2007). His research is focused on the interface between physics, computer graphics and computer vision.

David Luebke, VP Graphics Research, NVIDIA

David Luebke, VP Graphics Research, NVIDIA

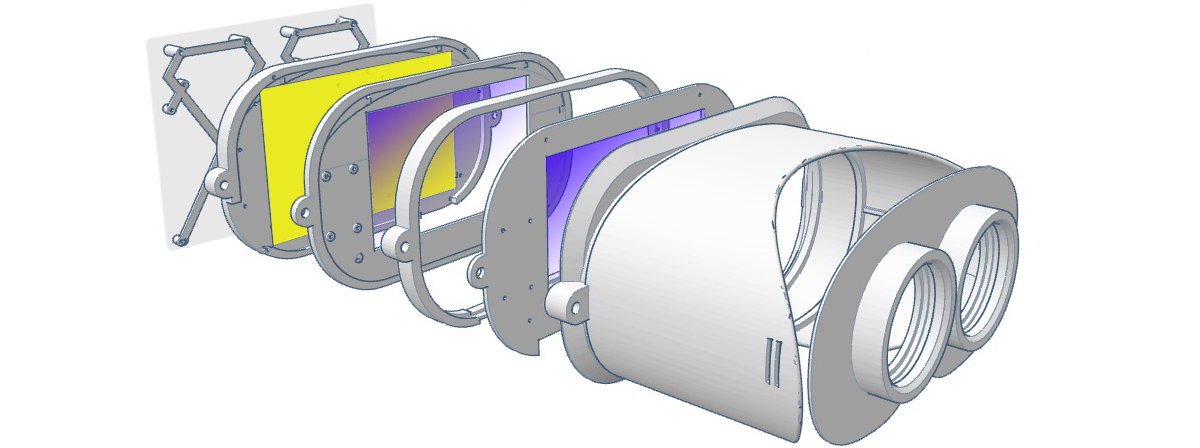

Title: Computational Display for Virtual and Augmented Reality

Abstract: Successful wearable displays for virtual & augmented reality face tremendous challenges, including: NEAR-EYE DISPLAY: how to put a display as close to the eye as a pair of eyeglasses, where we cannot bring it into focus? FIELD OF VIEW: how to fill the user’s entire vision with displayed content? RESOLUTION: how to fill that wide field of view with enough pixels, and how to render all of those pixels? A “brute force” display would require something like 10,000×8,000 pixels per eye! BULK: displays should be vanishingly unobtrusive, as light and forgettable as a pair of sunglasses, but the laws of optics dictate that most VR displays today are bulky boxes bigger than ski goggles. FOCUS CUES: today’s VR displays provide binocular display but only a fixed optical depth, thus missing the monocular depth cues from defocus blur and introducing vergence-accommodation conflict. EYEBOX: wearable displays must accommodate a wide range of facial shapes, ideally without being tightly strapped or custom-fitted to the wearer’s head, but many optical designs form a very small exit pupil or exhibit significant distortion across the eyebox.

To overcome these challenges requires understanding and innovation in vision science, optics, display technology, and computer graphics. At NVIDIA Research we have been drawing on all these fields to address (or sidestep!) these challenges, with the goal of moving closer to the “ultimate display”: a diffraction-limited, wide field-of-view, see-through display as light and forgettable as a pair of sunglasses. I will describe several computational display VR/AR prototypes in which we have co-designed the optics, display, and rendering algorithm with the human visual system to achieve new tradeoffs.

Speaker Bio: David Luebke runs graphics research at NVIDIA Research, which he helped found in 2006 after eight years on the faculty of the University of Virginia. His principal research interests are virtual and augmented reality, ray tracing, and real-time rendering.

Workshop chairs

Mohit Gupta, University of Wisconsin-Madison

Matthew O’Toole, Stanford University

Aswin C. Sankaranarayanan, Carnegie Mellon University

Committee members

Ashok Veeraraghavan, Rice University

Ayan Chakrabarti, TTIC

Belen Masia, Universidad de Zaragoza

Brandon Smith, University of Wisconsin-Madison

Douglas Lanman, Oculus Research

Imari Sato, National Institute of Informatics

Ioannis Gkioulekas, Carnegie Mellon University

Jason Holloway, Columbia University

Kaushik Mitra, IIT Madras

Koppal Sanjeev, University of Florida

Kristin Dana, Rutgers University

Oliver Cossairt, Northwestern University

Roarke Horstmeyer, California Institute of Technology

Srikumar Ramalingam, University of Utah

Suren Jayasuriya, Carnegie Mellon University

Tomas Pajdla, Czech Technical University in Prague

Yasuhiro Mukaigawa, NAIST

Yuichi Taguchi, MERL